AI Agent Deployment in 2025: Frameworks, Tools, and Best Practices

Amit Eyal Govrin

TD;LR

- AI agent deployment is moving from single agents to distributed multi-agent systems requiring modular, secure, and flexible infrastructures.

- Effective deployment involves preparing agents with integrated tools, choosing the right environment, containerizing with Docker, and continuous monitoring.

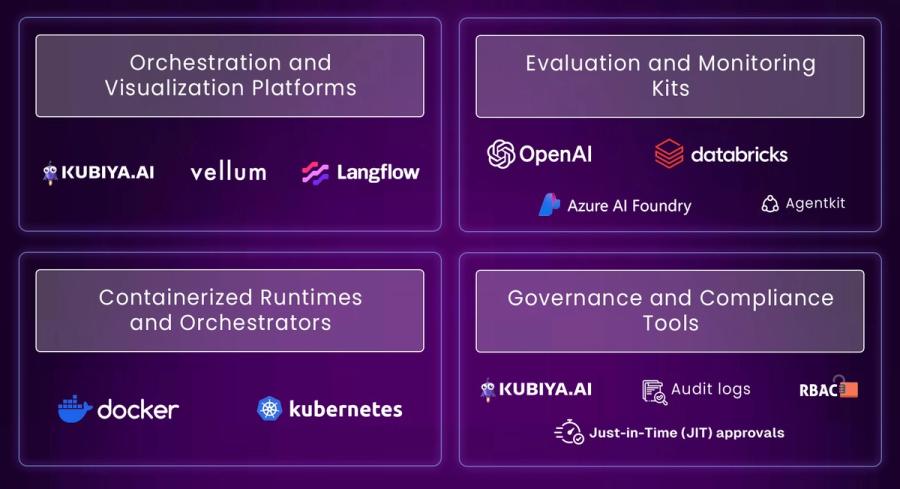

- Key tools include orchestration platforms like Kubiya and Vellum, evaluation kits, container runtimes (Docker, Kubernetes), and governance with RBAC.

- Leading frameworks such as LangChain, Kubiya.ai, AutoGen, and Langflow provide a balance of developer flexibility and enterprise-grade control.

- Best practices emphasize modular design, observability, governance, continuous benchmarking, and flexible hosting for scalable and compliant AI solutions.

Autonomous AI agents are no longer prototypes; by 2025, they are the engine of complex enterprise workflows. The deployment ecosystem has evolved significantly, shifting from isolated, single-agent projects to distributed multi-agent systems capable of orchestrating complex decision-making processes across business units. This transition is driven by advances in scalable frameworks, robust AI Governance requirements, and the necessity for Explainability (XAI).

Embracing this shift requires deployment strategies that emphasize modularity, robust security (including role-based access controls and immutable audit logging), and flexible hosting utilizing containerization (Kubernetes), cloud, and edge infrastructure.

As enterprises embrace AI agents for critical automation from IT operations, incident management, and Agentic RAG for document search, to customer service, and supply chain optimization the choice of frameworks and deployment tools is crucial.

Leading firms are seeing transformative results: IBM’s Watson AIOps slashed incident resolution time by 60%, while specialized multi-agent systems are reducing financial compliance research time by up to 75%.

Orchestration frameworks like LangChain, AutoGen, and Kubiya.ai strike the perfect balance between developer flexibility and enterprise-grade control, leveraging tools, APIs, and callable functions that let agents interact with databases, run code, and connect with external systems. Backed by real-time observability and strict policy enforcement, these technologies are the foundation of stable, secure, and massively scalable AI deployments.

This article shows how to deploy AI agents using tools like LangChain and Kubiya.ai, including an example of complex workflows. It also highlights important frameworks and trends to help organizations use AI for automation and compliance.

How to Deploy an AI Agent in 2025

Bringing the vision of AI agent deployment into reality involves following a practical, adaptable workflow applicable across leading frameworks like LangChain, Azure AI Foundry, and visual builders such as Langflow. The steps are fundamentally similar and can be tailored to suit your specific infrastructure and use case.

Step-by-Step Deployment Workflow

1. Prepare Your Agent

- Finalize your agent’s code, tools, and memory systems. For example, LangChain allows you to define tools (like search or API calls) and memory for context retention.

- Test your agent locally with realistic queries to validate responses.

2. Choose a Deployment Environment

- Options include cloud platforms (Azure, AWS), internal apps (Slack, Notion), web UIs, or mobile apps.

- For example, Azure AI Foundry provides quickstart guides for agent deployment as production services.

3. Containerize and Package

- Use Docker to package dependencies and the agent code for reliable deployment.

- Containerization ensures reproducibility and makes it easy to move between platforms or scale up.

4. Configure Monitoring and Logging

- Set up logging for agent actions, errors, and usage stats—vital for debugging and maintenance.

- Integrate tools for real-time uptime monitoring and alerting.

5. Deploy the Agent

- Push your container or code to the chosen environment (cloud service, server, or app integration).

- Configure endpoints, access controls (RBAC), and any integration touchpoints, such as APIs or messaging queues.

- For web-based deployment, you might embed your agent behind an API or chatbot interface.

6. Operationalize and Monitor

- Observe real interactions. Use metrics to track task success rates, failure patterns, and user feedback.

- Continuously refine the agent—for production, plan for updates, data refreshes, and adaptation to changing user requirements.

python

# Install packages

pip install langchain langchain-openai

# Set env variables

export OPENAI_API_KEY=your_key

# Sample Dockerfile

FROM python:3.10

WORKDIR /app

COPY . /app

RUN pip install langchain langchain-openai

CMD ["python", "your_agent_script.py"]- Build your Docker image and deploy to any cloud service or internal server.

- For enterprise use, follow guides like Azure AI Foundry's quickstart to spin up managed agent services.

Key Tips for Successful Deployment

- Start simply, deploy one agent, observe and refine before scaling.

- Implement strong governance: enforce authentication, permissions, and audit trails.

- Make monitoring a first-class feature not an afterthought.

Once deployment workflows are established, the next decision involves choosing where and how to run these agents; this is where deployment models play a crucial role.

AI Agent Deployment Models in 2025

When deploying AI agents, choosing the right deployment model is critical and depends on factors such as data control, latency requirements, cost constraints, and workload characteristics. Here’s an overview of the most common deployment approaches:

| Deployment Strategy | Overview | Benefits | Considerations |

|---|---|---|---|

| Cloud | Runs on third-party platforms like AWS, Azure, GCP | Scalable, managed services, access to GPUs | Privacy concerns, latency, vendor lock-in |

| On-Premises | Hosted on company’s own servers/data centers | Full control, security, low latency | High upfront cost, limited scalability |

| Edge | Executes on local or IoT devices near data source | Real-time, enhanced privacy, offline capable | Resource constraints, complex updates |

| Serverless | Pay-per-use, event-driven (e.g., AWS Lambda) | Auto-scaling, low ops overhead, cost efficient | Cold starts, time/resource limits |

| Hybrid | Combines cloud, on-prem, edge based on workload needs | Flexible, security options, cost optimization | Complex architecture, integration challenges |

Selecting the appropriate deployment model requires balancing organizational needs, technical requirements, and costs to achieve effective, secure, and scalable AI agent operations.

With foundational deployment models defined, understanding the tools powering these operations helps bridge experimentation with enterprise-scale production.

Core Tools Shaping AI Agent Deployment in 2025

The production use of AI agents relies on four core categories of tools that move prototypes into secure, scalable, and compliant enterprise systems.

1. Orchestration and Visualization Platforms

These tools provide the control plane and visual interface for building, debugging, and managing complex multi-agent logic. They are essential for turning abstract agent collaboration into measurable workflows.

- Vellum and Langflow offer visual builders to define the agent's flow, tools, and state transitions, simplifying flow tracing and multi-agent debugging.

- Kubiya.ai is a leading Infrastructure Orchestration Layer that focuses on making agents actionable and context-aware. It automates complex, multi-step DevOps and IT workflows (e.g., managing Terraform, Kubernetes, or incident response) by enabling agents to reason and act based on real-time system context.

2. Evaluation and Monitoring Kits

These tools ensure the agent remains reproducible, reliable, and accountable in production, addressing the challenge of model drift and operational visibility.

- Evaluation : Kits (like those in OpenAI’s AgentKit or provided by platforms like Databricks) define test datasets and metrics to measure agent performance metrics such as accuracy, faithfulness (groundedness), and task success rate.

- Monitoring: Enterprise solutions like Databricks' agent logs and Azure AI Foundry provide structured logging and tracing (often built on OpenTelemetry) to capture every input, output, and tool call for real-time alerting and post-mortem analysis.

3. Containerized Runtimes and Orchestrators

These technologies are the backbone of scalable, flexible deployment, ensuring the agent runs consistently across different environments (cloud, VPC, on-prem).

- Docker packages the agent code and all its dependencies into a single, portable container image.

- Kubernetes (K8s) provides the orchestration layer, automating the deployment, scaling, load balancing, and self-healing of these agent containers, making them production-ready for any flexible hosting environment.

4. Governance Stacks and Compliance Tools

In regulated environments, these specialized tools integrate security and compliance directly into the agent's operational lifecycle.

- Kubiya.ai excels in this category, providing a Zero-Trust execution environment. It natively enforces Role-Based Access Control (RBAC), requiring agent actions to adhere to defined policies, and supports features like Just-in-Time (JIT) approvals for high-risk actions.

- Audit Logging and Telemetry: Crucially, Kubiya and other governance tools create a complete, immutable audit trail of every significant agent action (who ran it, what tools it called, what data was accessed) to meet regulatory and security compliance standards.

Example: Building and Deploying a Document-Search AI Agent with LangChain and Kubiya

Step 1: Build the Agent

Create a Python script agent.py to initialize a LangChain agent that can search a document collection and answer queries.

python

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

from langchain.document_loaders import TextLoader

from langchain.indexes import VectorstoreIndexCreator

# Load documents

loader = TextLoader('docs/my_document.txt')

index = VectorstoreIndexCreator().from_loaders([loader])

# Define a tool for document search

search_tool = Tool(

name="Document Search",

func=index.query,

description="Use this to answer questions by searching the document."

)

# Initialize the OpenAI LLM

llm = OpenAI(temperature=0)

# Initialize the agent with the tool and LLM

agent = initialize_agent([search_tool], llm, agent="zero-shot-react-description")

# Query the agent

query = "What is the main benefit of AI agent deployment?"

response = agent.run(query)

print(response)Step 2: Containerize the Agent

Create a Dockerfile to package the agent for reliable deployment:

text

FROM python:3.10-slim

WORKDIR /app

COPY agent.py ./agent.py

COPY docs/my_document.txt ./docs/my_document.txt

RUN pip install langchain openai

ENV OPENAI_API_KEY=<your_openai_key>

CMD ["python", "agent.py"]Step 3: Build and Run Locally

Build the Docker image and run locally to test:

bash

docker build -t langchain-agent .

docker run -e OPENAI_API_KEY="<your_key>" langchain-agent

Step 4: Deploy Using Kubiya Agent Platform

- Instead of manually managing the container deployment, register your Docker image and agent service with Kubiya.

- Kubiya handles secure orchestration by managing container lifecycle on Kubernetes or OpenShift clusters.

- Setup RBAC and fine-grained access policies to control which teams and users can invoke or update this agent.

- Connect your agent to Kubiya’s chat-native interface (Slack, Microsoft Teams) or API gateway for interactive, secure invocations.

- Kubiya provides full observability with audit logs, usage tracking, and error monitoring out of the box.

Step 5: Monitor, Manage, and Update Through Kubiya

- Use Kubiya’s dashboard to monitor your agent’s uptime, query logs, and performance metrics.

- Roll out updates to your LangChain agent container smoothly with Kubiya’s controlled deployment pipeline and versioning.

- Leverage Kubiya’s policy enforcement and secrets management to secure API keys and sensitive data during runtime.

- Scale to multi-agent collaboration or trigger workflows across multiple LangChain agents using Kubiya orchestration capabilities.

Advanced AI Agent Deployment Architectures: AIOps, Finance, and Supply Chain

1. Autonomous Root Cause Analysis (RCA) in AIOps

In modern IT ecosystems, Incident Resolver Agents go beyond alert correlation to achieve self-healing infrastructure.

Goal:

Automatically detect, diagnose, and remediate recurring infrastructure issues—like memory leaks, high latency, or resource starvation—to reduce Mean Time To Resolution (MTTR).

Architecture Overview:

- Data Ingestion: Continuous metrics, logs, and traces from Prometheus, Datadog, Elasticsearch, and Kubernetes APIs, streamed via Kafka or AWS Kinesis.

- Anomaly Detection Core: Time-series models such as LSTM or Isolation Forest identify metric anomalies (e.g., a 5× latency spike).

- RCA Agent: A specialized LLM correlates anomalies with logs and CMDB data, performs reasoning, and pinpoints causes (e.g., “Deployment user-service-v3 caused memory leaks on Pods A–C”). Ko

- Automated Remediation: Executes validated rollback actions using Kubernetes APIs, ensuring traceability via immutable audit logs.

Outcome:

Noticeable reduction in MTTR and decrease in false positives, improving reliability and operational resilience.

2. Agentic RAG for Enterprise Financial Policy Search

For financial institutions, Agentic Retrieval-Augmented Generation (RAG) enables analysts to navigate thousands of regulatory and policy documents using natural language, reducing compliance risk and research time.

Goal: Deliver grounded, cited, and auditable answers from vast internal legal and compliance datasets.

Architecture Overview:

- Ingestion & Indexing: Regulatory PDFs and legal memos are embedded using domain-tuned BERT or Gemini models and stored in vector databases like Pinecone or Weaviate.

- Semantic Search: Queries (e.g., “What is the Tier 1 capital reserve requirement for Q4?”) are vectorized and matched using cosine similarity.

- Grounded Generation: The LLM generates responses only from retrieved documents, adding citations with source references.

- Validation: A citation-check tool ensures every statement is supported by retrieved evidence.

Outcome:

Higher faithfulness scores and significant reduction in manual policy research time.

3. Multi-Agent System for Supply Chain Optimization

Enterprises now deploy collaborative, domain-specific agents across ERP, WMS, and CRM systems to ensure resilience and efficiency in global supply chains.

Goal:

Optimize demand, inventory, and logistics in real time—minimizing costs and disruptions (e.g., port closures).

Architecture Overview:

- Framework: Built using AutoGen or CrewAI for orchestrated, multi-agent collaboration.

- Specialized Agents:

- Demand Forecasting Agent: Predicts short-term demand shifts via ARIMA/deep learning using CRM data.

- Inventory Agent: Tracks warehouse levels via WMS APIs to flag potential stockouts.

- Procurement Agent: Dynamically places or adjusts supplier orders through ERP APIs.

- Disruption Scenario: Upon receiving a PORT_CLOSED event, agents coordinate to rebalance operations—rerouting shipments, prioritizing high-value orders, and optimizing trade-offs.

Outcome:

Marked reduction in backlog, lower expedited shipping costs, and faster recovery from disruptions.

Implementation Challenges and Improvement Strategies

Deploying AI agents at enterprise scale presents unique technical and organizational challenges. This section highlights key obstacles and recommended strategies to ensure stability, security, and scalability.

Governance and Security Controls

Challenge: Managing permissions, securing sensitive workflows, and complying with regulatory standards is complex in distributed AI systems. Failure leads to security risks and compliance violations.Improvement Strategies:

- Enforce Role-Based Access Control (RBAC) early in the development lifecycle.

- Implement immutable audit logging for accountability.

- Adopt zero-trust execution models as offered by platforms like Kubiya.ai.

- Integrate Just-in-Time (JIT) approval workflows for high-risk actions.

Observability and Monitoring Integration

Challenge: A lack of robust monitoring hampers failure detection and performance troubleshooting, diminishing trust in AI agents.Improvement Strategies:

- Embed distributed tracing and telemetry (logs, metrics, event streams) using tools compliant with OpenTelemetry.

- Use structured, fine-grained logging to capture all agent inputs, outputs, and tool executions.

- Establish real-time alerting and dashboards for visibility into runtime behavior.

Continuous Benchmarking and Feedback

Challenge: AI models can suffer from performance degradation (model drift) or regressions after updates, risking unreliable operations.Improvement Strategies:

- Use evaluation kits to rigorously test agent performance on internal and live datasets pre- and post-deployment.

- Collect and integrate real-user feedback continuously into evaluation cycles.

- Automate evaluation pipelines within CI/CD workflows to detect and prevent regressions early.

Flexibility in Hosting and Deployment

Challenge: Enterprise environments require flexible deployment across cloud, on-premises, or hybrid infrastructures, which complicates rollout and scaling.

Improvement Strategies:

- Adopt containerization (Docker) to package agents with all dependencies for reproducibility.

- Use orchestration platforms (Kubernetes) to automate scaling, load balancing, and healing.

- Choose frameworks supporting multi-cloud and hybrid environments to maintain uptime and data governance options.

Emerging Deployment Trends

Multi-Agent Orchestration

The current paradigm shift involves moving from monolithic, single-task agents to distributed multi-agent systems (MAS). Enterprises are deploying dozens of specialized agents in collaborative networks that function as service-oriented architectures.

- Technical Mechanism: The collaboration is facilitated by Agent-to-Agent (A2A) communication protocols, an open standard prominently advanced by Google and Salesforce.

- A2A Functionality: This protocol defines a structured, secure, and asynchronous method for agen ts regardless of their underlying framework to dynamically discover capabilities via Agent Cards (a standardized metadata JSON) and delegate tasks via a well-defined Task Lifecycle. This promotes interoperability and the development of complex, cross-functional workflows.

Open-Source Dominance

A substantial majority of enterprises over 90%of enterprises are leveraging open-source agent frameworks like LangChain and AutoGen for production deployment.

Architectural Role of Frameworks:

- LangChain: Provides a modular toolkit for building LLM-powered chains and agents, focusing on the reasoning loop (deciding which tool to use) and robust integration with external data sources RAG, databases, and APIs.

- AutoGen: Specifically emphasizes conversational orchestration and actor-model communication, enabling sophisticated, autonomous collaboration among a predefined crew of specialized agents.

Enterprise Benefit:

Adoption is driven by the need for greater transparency, lower vendor lock-in risk, and the flexibility required to customize agent behavior and integrate proprietary enterprise data and tools.

Visual and Low-Code Tools

There is a growing demand for high-level, low-code development environments such as Langflow and CrewAI.

- Deployment Impact: These tools abstract the complexity of building agent logic, often by providing visual graph interfaces (like in LangGraph or Langflow) to define the workflow, tool calling, and state transitions.

- Result: This shift accelerates the AI iteration cycle by enabling non-data scientists (e.g., business analysts or solution architects) to rapidly prototype and deploy complex, multi-step agent workflows.

Model-Agnostic Infrastructure

Modern agent orchestration layers are being designed with model-agnostic infrastructure at their core, abstracting the LLM provider from the agent logic.

- Technical Principle: This is achieved through a unified API layer that standardizes the interface for all models, decoupling the agent's control flow from the specific LLM used for reasoning.

- The Outcome: The architecture supports dynamic switching between various LLMs Claude, GPT, Gemini during runtime. This allows for sophisticated optimization strategies to be implemented, such as routing low-complexity tasks to cheaper, lower-latency models and reserving complex reasoning for high-performance, higher-cost models, ultimately improving cost-efficiency and performance-per-task.

Conclusion

The shift to production-ready AI agents in 2025 represents a fundamental transformation in how organizations automate and scale their operations. Success requires more than selecting the right framework; it demands a holistic approach combining technical excellence, robust governance, and organizational readiness.

By following the deployment workflow outlined in this guide, leveraging proven frameworks like LangChain and Kubiya.ai, and adhering to best practices around modularity, observability, and continuous benchmarking, organizations can confidently move from experimentation to execution. The emerging trends in multi-agent orchestration, open-source adoption, and model-agnostic infrastructure signal that AI agents will only become more powerful and accessible.

The companies that thrive in 2025 and beyond will be those that start strategically, scale systematically, and maintain human oversight throughout the AI deployment lifecycle. AI is the enabler—but humans remain the driving force behind innovation, compliance, and competitive advantage

FAQs

Q1: What is AI agent development?

AI agent development is the process of designing, building, and deploying autonomous software systems that can perceive their environment, make decisions, and take actions to achieve specific goals.

Q2: How are AI agents deployed?

AI agents are deployed by choosing a hosting environment (cloud or on-premises), containerizing the agent with Docker, automating deployment via CI/CD pipelines, and setting up monitoring for ongoing performance. Infrastructure setup, access controls (RBAC), and continuous monitoring ensure the agent remains operational and secure.

Q3:What are common challenges faced during AI agent deployment in 2025?

The main challenges in AI agent deployment in 2025 include data quality, integration complexity, fragmented teams, infrastructure gaps, balancing human-AI collaboration, and managing task complexity. Overcoming these requires clear planning, robust infrastructure, aligned use cases, and continuous monitoring.

Q4:What are best practices for AI agent deployment?

Best practices for AI agent deployment include starting small with a clear MVP to validate the concept, defining clear goals aligned with business objectives, and incorporating a human-in-the-loop system for oversight and complex tasks.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.