Top AI Agent Orchestration Frameworks for Developers in 2025

Amit Eyal Govrin

TD;LR

- Multi-agent teamwork:These frameworks enable multiple AI agents to work together smoothly on intricate workflows.

- Wide range of options: Frameworks LangChain, CrewAI, Ray, and AutoGen offer diverse capabilities from NLP to enterprise automation.

- Engineered to expand: They help build scaling systems that recover from errors and reuse components, useful in customer service, finance, healthcare, and supply chains.

- Fits different users: Some target low-code/no-code users (Langflow), others provide full developer control (Microsoft Agent Framework, Ray).

- Built for deployment: Features like monitoring, safety, and extensibility ensure reliability for real-world AI projects.

As artificial intelligence continues to evolve in 2025, developers are no longer building single-task bots; they're designing AI ecosystems where multiple agents collaborate intelligently to perform complex workflows. From automating customer support to managing enterprise data pipelines, AI agent orchestration frameworks have become the backbone of scalable, autonomous systems.

These frameworks allow developers to connect, organize, and control multiple AI agents that think, communicate, and execute tasks together much like a digital team. Whether you’re building a multi-agent assistant, a knowledge retrieval system, or an adaptive enterprise solution, choosing the right framework is key to performance, flexibility, and scalability.

The Role of AI Orchestration in Modern Enterprise AI

A multinational financial institution uses AI orchestration to streamline its risk assessment processes. Multiple AI agents analyze diverse data sources like transaction records, market trends, and regulatory updates. Orchestration ensures these agents collaborate in real time, sharing insights and updating risk models dynamically. This coordinated system automates complex decision-making workflows, reduces manual errors, accelerates compliance reporting, and allows the institution to respond swiftly to emerging risks all while maintaining governance and scalability across global operations.

In this article, we explore the Top 10 AI Agent Orchestration Frameworks for Developers in 2025 from industry leaders like LangChain and CrewAI to emerging tools like Phidata and Langflow highlighting their key features, ideal use cases, and what sets them apart in the rapidly evolving AI landscape.

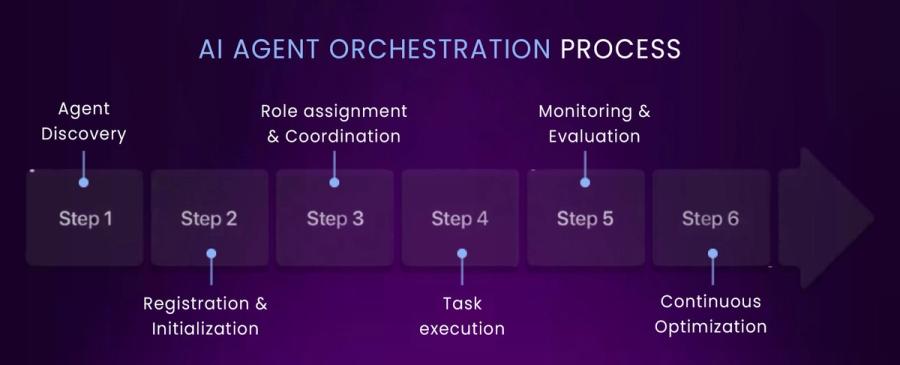

What Is AI Orchestration?

AI orchestration is the process of coordinating and managing different AI models, tools, agents, and systems so they work together effectively as a unified solution. Like a conductor leading an orchestra, the orchestrator connects all these components such as large language models, memory, planning modules, observability tools, and safety guardrails automating workflows, synchronizing decision-making, and streamlining operations across an enterprise. This enables organizations to solve complex problems end-to-end, maximize efficiency, and ensure their AI systems are scalable, reliable, and responsive to evolving needs.

Why does this matter? Because isolated agents can only solve one piece of a problem. But most real-world problems span multiple steps and decisions. Orchestration turns a set of single-task agents into a system that thinks and acts holistically.

It enables more intelligent, multi-step workflows, improving the coordination, adaptability, and sequencing. By combining their strengths under one orchestrated plan, agents can analyse and act together, building AI systems that are more capable and reliable than any single agent on its own.

The Purpose of an Orchestrator Agent

The orchestrator agent is the strategic hub of a multi-agent system. It doesn’t simply monitor tasks but makes judgment calls, assigns responsibilities, resolves conflicts, and ensures agents work synergistically.

Rather than a glorified scheduler, the orchestrator agent understands system-level objectives and context. It determines the order in which agents act, decides which agent is best suited to handle a particular input, and keeps the flow of information consistent across the system.

When two agents propose conflicting actions, say, one wants to discount a product to boost sales while another wants to raise prices due to inventory shortages, the orchestrator resolves the contradiction in light of company goals.

Its core responsibilities include:

- Distributing tasks dynamically based on current system state and priorities

- Sequencing agent actions to respect dependencies and shared goals

- Handling fallbacks and escalations when agents encounter errors or ambiguities

- Ensuring that all agents operate in a synchronised, up-to-date context

- Modifying workflows in real-time as new data and user inputs emerge

Ultimately, the orchestrator transforms a collection of intelligent parts into an intelligent whole.

Best AI Agent Orchestration Frameworks:

As AI evolves, orchestrating multiple intelligent agents into cohesive ecosystems has become essential for handling complex workflows.

1. Kubiya

Kubiya is an enterprise-grade AI agent orchestration platform designed primarily for engineering and DevOps teams to automate workflows across cloud, CI/CD, observability, and infrastructure systems using natural language commands and intelligent agents

key features

- Enterprise-grade AI orchestration tailored for engineering and DevOps

- Natural language command interface (Slack, Teams, Web)

- Deep integration with cloud, CI/CD, observability, infrastructure tools

- Context-aware, deterministic AI workflows with full audit and governance

- Visual workflow builder and modular agent design

- Security features: RBAC, SSO, just-in-time access, audit logging.

Pros:

- Enterprise-grade security with RBAC, SSO, and audit logging.

- Natural language command interface integrated with Slack and Teams.

- Deep integration with cloud infrastructure, CI/CD, and observability tools.

Cons:

- Primarily focused on engineering and DevOps use cases.

- Requires setup of complex workflows for maximum effectiveness.

- Enterprise pricing and deployment may be costly for smaller teams.

Getting Started with Kubiya

- Sign up or request enterprise deployment from Kubiya’s official site.

- Integrate Kubiya with Slack, Teams, or web console for natural language interaction.

- Connect your cloud (AWS, GCP, Azure), CI/CD, and observability tools.

- Use the visual workflow builder to create and configure pipelines.

- Set up security (RBAC, SSO, audit logging).

- Test workflows in development environments before production deployment.

Kubiya primarily offers enterprise console and API integration, so code typically involves API calls or configuration. Here is a hypothetical Python snippet for triggering a Terraform deployment via Kubiya API:

How This Works in Practice Inside an Enterprise Deployment: Kubiya

A global fintech company uses Kubiya to automate cloud infrastructure and CI/CD pipelines. Engineers issue natural language commands in Slack like “Provision staging environment for Project X with Terraform.” Kubiya agents orchestrate provisioning, enforce compliance policies, monitor deployments through Prometheus, and automatically open JIRA tickets for failed builds. This reduces manual toil, accelerates deployment times, and maintains audit compliance.

Below is a concise code workflow that models Kubiya's automation for cloud provisioning and CI/CD, as described in the enterprise scenario:

python

from kubiya_workflow_sdk import Workflow, Step

# Workflow: Provision and Deploy with Compliance and Monitoring

workflow = Workflow(

name="provision-and-deploy",

description="Provision environment, enforce compliance, monitor, and auto-ticket failures"

)

# Step 1: Provision environment with Terraform

workflow.add_step(

Step("provision")

.tool("terraform")

.command("terraform apply -auto-approve -var project=ProjectX")

)

# Step 2: Enforce compliance policy

workflow.add_step(

Step("compliance")

.tool("python")

.command("python check_compliance.py --env staging")

.depends(["provision"])

)

# Step 3: Monitor deployment status with Prometheus

workflow.add_step(

Step("monitor")

.tool("prometheus")

.command("promtool check rules /etc/prometheus/alerts.yaml")

.depends(["compliance"])

)

# Step 4: Auto-create JIRA ticket if deployment fails

workflow.add_step(

Step("auto-ticket")

.tool("jira")

.command("jira create issue --project=DEVOPS --summary='Deployment failed in ProjectX staging'")

.condition("previous_step_failed")

.depends(["monitor"])

)

Use a Slack command like:/k provision staging for Project XKubiya will handle all provisioning, compliance, monitoring, and JIRA ticketing automatically.

2. LangChain

LangChain is a modular Python framework that helps developers build applications powered by large language models (LLMs) through abstraction layers for models, prompts, memory, and workflow orchestration

key features

- Modular Python framework for LLM-powered applications

- Abstractions for models, prompts, memory, and chains

- Supports complex multi-step workflows and multi-agent orchestration

- Seamless integration with GPT, Claude, Gemini, and open-source LLMs

- Strong community and extensible ecosystem

- Ideal for document processing, knowledge workflows, and chatbots.

Pros:

- Highly modular and flexible for custom LLM-based workflows.

- Strong open-source community and ecosystem support.

- Supports complex, multi-agent orchestration across diverse applications.

Cons:

- Requires Python programming expertise.

- Can have a steep learning curve for newcomers.

- Reliability depends on external LLM providers' performance.

Getting Started with LangChain

- Install LangChain via Python: pip install langchain.

- Study LangChain abstractions: LLMs, prompts, memory, chains, and agents.

- Obtain API keys from LLM providers like OpenAI or Anthropic.

- Build simple prompt chains, then evolve to complex multi-agent workflows.

- Utilize community resources and example projects for guidance.

How This Works in Practice Inside an Enterprise Deployment: LangChain

A multinational law firm processes thousands of contracts monthly. Using LangChain, they automate contract classification, summarization, and key clause extraction. Multi-agent LangChain systems enable customer support agents to query contract statuses and terms in real-time, enhancing service quality and reducing legal costs while ensuring consistent information retrieval.

LangChain example that covers contract classification, summarization, and clause extraction in a single, minimal multi-agent workflow:

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

llm = OpenAI(model_name="gpt-4")

# Classify contract

cls = LLMChain(llm, PromptTemplate.from_template("Classify contract: {text}"))

contract_type = cls.run({"text": contract_text})

# Summarize contract

sumr = LLMChain(llm, PromptTemplate.from_template("Summarize contract: {text}"))

summary = sumr.run({"text": contract_text})

# Extract clause

extract = LLMChain(llm, PromptTemplate.from_template("Extract {clause} clause: {text}"))

termination = extract.run({"text": contract_text, "clause": "termination"})

3. CrewAI

CrewAI is an enterprise-grade multi-agent framework enabling modular agent teams called “Crews” that operate through coordinated workflows known as “Flows”

Key Features

- Enterprise-grade multi-agent orchestration using “Crews” and “Flows”

- Modular, reusable agent teams executing coordinated workflows

- Secure, encrypted inter-agent communication.

- Centralized dashboard for orchestration visibility.

- Supports synchronous and asynchronous task execution.

Pros:

- Modular agent teams (“Crews”) enable reusable workflows.

- A centralized dashboard provides excellent monitoring and control.

- Secure, encrypted inter-agent communications.

Cons:

- Less mature than some other frameworks; smaller community.

- Enterprise deployment and onboarding can be complex.

- Limited public documentation compared to open-source rivals.

Getting Started with CrewAI

- Access CrewAI via GitHub or enterprise onboarding.

- Define “Crews” (agent teams) and “Flows” (workflows) using SDK or UI.

- Enable encrypted communication between agents.

- Use CrewAI’s centralized dashboard for monitoring.

- Test synchronous and asynchronous multi-agent workflows.

- Integrate with organizational data systems and triggers.

How This Works in Practice Inside an Enterprise Deployment: CrewAI

A retail conglomerate deploys CrewAI to automate coordination between marketing, finance, and logistics departments. Agent “Crews” analyze sales data, optimize inventory, and predict demand in “Flows” executed asynchronously. Secure telemetry data sharing and monitoring dashboards improve transparency and efficiency, enabling rapid response to market changes.

Here’s a minimal CrewAI code snippet that automates a multi-agent “crew” workflow for interdepartmental decision-making mirroring the described enterprise deployment:

from crewai import Agent, Crew, Task, Process

# Define agents for each department

marketing = Agent(role="Marketing", goal="Analyze sales trends")

finance = Agent(role="Finance", goal="Optimize budget")

logistics = Agent(role="Logistics", goal="Predict inventory demand")

# Define tasks assigned to agents

analyze_sales = Task("Analyze latest sales data", agent=marketing)

budgeting = Task("Recommend optimal budget allocation", agent=finance)

predict = Task("Forecast inventory needs", agent=logistics)

# Crew to orchestrate and run tasks asynchronously

crew = Crew(

agents=[marketing, finance, logistics],

tasks=[analyze_sales, budgeting, predict],

process=Process.asynchronous

)

results = crew.kickoff(inputs={"period": "Q4-2025"})

print(results)

4. Ray

Ray is an open-source distributed computing framework designed for large-scale AI workloads, enabling efficient parallelism across ML, simulation, and training systems.

Key Features

- Open-source distributed computing for large-scale AI workloads

- Fault-tolerant and highly scalable parallelism

- Ideal for ML training, reinforcement learning, simulations

- Integrates with Python ML libraries and cloud platforms

- Enables scaling from laptops to large clusters seamlessly.

Pros:

- Highly scalable distributed computing for heavy AI workloads.

- Strong support for RL, simulations, and large-scale ML training.

- Integrates well with Python ML ecosystem and cloud providers.

Cons:

- Complex setup and management for non-experts.

- Focused primarily on ML workloads, less on chat or NLP workflows.

- Resource intensive, requiring significant infrastructure investment.

Getting Started with Ray

- Install Ray framework: pip install ray.

- Follow tutorials for distributed computing and ML training.

- Setup local or cloud environments (AWS, GCP).

- Build scalable distributed workflows and reinforcement learning models.

- Use Ray’s libraries for hyperparameter tuning and model serving.

- Monitor workloads with Ray dashboard.

How This Works in Practice Inside an Enterprise Deployment: Ray

An autonomous vehicle company leverages Ray for reinforcement learning across distributed robot fleets. Training simulations scale across cloud GPU clusters. Ray manages fault recovery and hyperparameter tuning, enabling rapid iteration of driving policies. Integration with Python ML libraries accelerates model updates and deployment.

A minimal Ray RLlib example for distributed autonomous vehicle policy training:

import ray

from ray.rllib.algorithms.ppo import PPOConfig

ray.init()

PPOConfig().environment("CartPole-v1").build().train()

5. AutoGen

AutoGen is a Microsoft Research project that orchestrates multiple intelligent agents collaborating dynamically to solve complex tasks

Key Features

- Microsoft Research’s multi-agent framework for complex tasks

- Extensible, adaptive AI agents with plug-and-play roles

- Peer-to-peer communication and dynamic workflow orchestration

- Suitable for interactive, evolving, context-aware systems

- Supports human-in-the-loop collaboration.

Pros:

- Adaptive, peer-to-peer multi-agent orchestration.

- Supports human-in-the-loop workflows for sensitive tasks.

- Lightweight and extensible for interactive AI applications.

Cons:

- Still evolving with limited industry adoption.

- Requires integration effort for enterprise workflows.

- Documentation and examples are less comprehensive.

Getting Started with AutoGen

- Clone AutoGen from Microsoft Research’s GitHub repo.

- Define agent roles and enable peer-to-peer communication.

- Integrate human-in-the-loop interaction using SDK features.

- Build and refine adaptive workflows responsive to dynamic contexts.

- Test interactive agent collaborations locally.

How This Works in Practice Inside an Enterprise Deployment: AutoGen

A healthcare provider employs AutoGen to build AI-assisted diagnostic tools. Multi-agent systems dynamically adjust workflows based on patient data changes, with human doctors reviewing AI analyses. Peer-to-peer agent communication coordinates lab results, medical history, and treatment suggestions ensuring personalized and compliant care.

AutoGen code example for healthcare multi-agent workflows, matching the scenario where agents coordinate diagnostics, lab results, and treatment, with human-in-the-loop review:

python

from autogen import AssistantAgent, UserProxyAgent, GroupChatManager

# Doctor agent reviews diagnosis/treatments

doctor = UserProxyAgent("doctor")

# AI agents for diagnostics, labs, treatment

diagnostic_agent = AssistantAgent("diagnostics")

lab_agent = AssistantAgent("lab_results")

treatment_agent = AssistantAgent("treatment")

# GroupChat to coordinate across agents

chat = GroupChatManager(

agents=[doctor, diagnostic_agent, lab_agent, treatment_agent],

admin_name="doctor" # doctor reviews and approves workflows

)

# Physician triggers workflow for a new patient case

response = chat.run(

"Patient A: abnormal labs. AI, suggest diagnosis, collect labs, recommend treatment for doctor to review."

)

print(response)This setup lets AutoGen agents asynchronously process data, suggest actions, share insights, and rely on a human doctor for oversight—mirroring real-world, compliant diagnostic automation.

6. Microsoft Agent Framework

Microsoft’s Agent Framework merges Semantic Kernel and AutoGen, offering an open-source SDK for building, deploying, and orchestrating enterprise multi-agent systems on Azure AI Foundry.

Key Features

- Combines Semantic Kernel and AutoGen for enterprise multi-agent systems

- Open-source SDK built on Azure AI Foundry

- Enterprise scalability, security, and governance

- Deep Azure cloud integration

- Pre-built modules and APIs for rapid development

Pros:

- Deep integration with Azure ecosystem and enterprise tools.

- Pre-built AI modules speed up development.

- Robust security and governance features for regulated industries.

Cons:

- Best suited for organizations committed to Microsoft Azure.

- Higher complexity due to enterprise-grade features.

- Smaller open-source community outside Microsoft ecosystem.

Getting Started with Microsoft Agent Framework

- Prepare Azure AI Foundry environment per Microsoft documentation.

- Install open-source SDK combining Semantic Kernel and AutoGen.

- Deploy using pre-built modules for enterprise workflows.

- Configure security and governance aligned with company policies.

- Manage lifecycle with Azure DevOps pipelines.

How This Works in Practice Inside an Enterprise Deployment: Microsoft Agent Framework

A bank uses the Microsoft Agent Framework to automate compliance reporting and risk assessments. Agents ingest regulatory documents, perform intelligent contract reviews, and generate audit reports. Integration with Azure AI ensures scalability and adheres to strict security and governance mandates, supporting hybrid cloud environments.

Microsoft Agent Framework can be used in a bank’s enterprise deployment to automate compliance reporting and risk assessments with Azure AI integration:

Python

from microsoft.agentframework import Agent, Orchestrator

from azure.ai.textanalytics import TextAnalyticsClient

from azure.identity import DefaultAzureCredential

# Initialize Azure Text Analytics client

credential = DefaultAzureCredential()

text_analytics_client = TextAnalyticsClient(endpoint="https://<your-endpoint>.cognitiveservices.azure.com/", credential=credential)

# Compliance agent that ingests documents and performs contract review

class ComplianceAgent(Agent):

def analyze_document(self, document_text):

response = text_analytics_client.analyze_sentiment([document_text])

# Perform intelligent contract review and risk assessment (mocked)

assessment = "Risk: Low; Compliance: Satisfactory"

return assessment

def generate_report(self, compliance_data):

return f"Audit Report: {compliance_data}"

# Orchestrator to coordinate agents

orchestrator = Orchestrator(agents=[ComplianceAgent()])

# Example usage

document = "Regulatory document text about banking compliance..."

compliance_agent = ComplianceAgent()

assessment = compliance_agent.analyze_document(document)

report = compliance_agent.generate_report(assessment)

print(report)This example shows an agent using Azure AI services to analyze documents, assess compliance risks, and generate audit reports, orchestrated within Microsoft Agent Framework supporting secure, scalable enterprise workflows in hybrid cloud environments.

7. LlamaIndex

LlamaIndex bridges LLMs and external data sources like PDFs, APIs, or databases providing semantic indexing and retrieval for enterprise data.

Key Features

- Bridges LLMs and external structured/unstructured data sources

- Semantic indexing and retrieval for enterprise knowledge bases

- Supports PDFs, APIs, databases, and vector search

- Enables contextual querying and document understanding

- Enhances enterprise search and data exploration systems.

Pros:

- Bridges LLMs with diverse structured and unstructured data.

- Enhances semantic search and document understanding.

- Supports multiple data types and large-scale knowledge bases.

Cons:

- Limited real-time data updating; manual refresh needed.

- Requires LLM provider integration knowledge.

- May need fine-tuning for highly specialized enterprise data.

Getting Started with LlamaIndex

- Install via Python: pip install llama-index.

- Connect documents, APIs, or databases for ingestion.

- Create semantic indexes for knowledge retrieval.

- Experiment with example applications for Q&A and search.

- Integrate with your preferred large language models.

How This Works in Practice Inside an Enterprise Deployment: LlamaIndex

An R&D organization utilizes LlamaIndex to index terabytes of internal research papers, experimental data, and patents. Custom semantic queries enable researchers to discover relevant work quickly, supporting innovation cycles. Integrated with enterprise LLMs, the system automates summarization and Q&A workflows over vast data.

Code snippet showing how LlamaIndex can be used in an enterprise deployment to index large research datasets and enable semantic search, summarization, and Q&A over internal documents:

python

from llama_index import GPTVectorStoreIndex, SimpleDirectoryReader, ServiceContext

from langchain.llms import OpenAI

# Load documents from local enterprise knowledge base

documents = SimpleDirectoryReader('path/to/research-papers').load_data()

# Initialize LLM and service context for indexing

llm = OpenAI(model_name="gpt-4")

service_context = ServiceContext.from_defaults(llm=llm)

# Build vector store index over documents

index = GPTVectorStoreIndex.from_documents(documents, service_context=service_context)

# Query interface for semantic search and summarization

query_engine = index.as_query_engine()

# Example query: summarize latest findings on a topic

response = query_engine.query("Summarize recent advances in quantum computing hardware")

print(response.response)This example illustrates ingesting enterprise research content, creating a vector index with LlamaIndex, and executing semantic queries with LLM-powered summarization ideal for accelerating R&D through instant knowledge discovery.

8. Smolagents

Smolagents, from Hugging Face, provides developers with a simple, lightweight toolkit to create LLM-driven agents quickly using pre-built templates and external tool calls.

Key Features

- Lightweight, developer-friendly agent toolkit from Hugging Face

- Thousands of pre-trained NLP task templates

- Easy integration with Hugging Face models and APIs

- Quick deployment of functional LLM-driven agents

- Suited for text classification, summarization, and assistants.

Pros:

- Lightweight and quick to deploy for NLP tasks.

- Access to thousands of pre-trained NLP models via Hugging Face.

- Simple integration and prototyping process.

Cons:

- Limited to NLP tasks, less suitable for complex orchestration.

- Computational cost can be high for large-scale models.

- Lacks enterprise-grade features like governance and security.

Getting Started with Smolagents

- Install Smolagents SDK from Hugging Face repos.

- Browse and select pre-trained NLP templates for your needs.

- Integrate with Hugging Face models and APIs.

- Prototype quick, lightweight NLP agents.

- Customize agents for classification or summarization tasks.

How This Works in Practice Inside an Enterprise Deployment: Smolagents

A software SaaS company deploys Smolagents-powered assistants to automatically classify and route customer tickets. Text summarization agents create condensed case notes for support staff. The lightweight toolkit enables quick adaptation to evolving support themes, improving team responsiveness and customer satisfaction.

Code snippet demonstrating how Smolagents can be used in an enterprise SaaS support deployment to classify and summarize customer tickets automatically:

Python

from smolagents import Agent, Pipeline

# Define an agent for ticket classification

class TicketClassifier(Agent):

def run(self, text):

# Basic intent classification (mocked)

if "refund" in text.lower():

return "Refund"

elif "bug" in text.lower():

return "Bug Report"

else:

return "General Inquiry"

# Define an agent for summarizing tickets

class TicketSummarizer(Agent):

def run(self, text):

# Simple text summarization (mocked)

return text[:100] + "..."

# Build a pipeline combining the agents

pipeline = Pipeline(agents=[TicketClassifier(), TicketSummarizer()])

# Example input ticket text

ticket_text = "Customer reports a bug in the payment system where the transaction fails intermittently."

# Process ticket through pipeline

classification, summary = pipeline.process(ticket_text)

print(f"Classification: {classification}")

print(f"Summary: {summary}")

This example shows how lightweight Smolagents agents can classify ticket types and generate condensed summaries, enabling automated routing and improved support efficiency in SaaS environments.

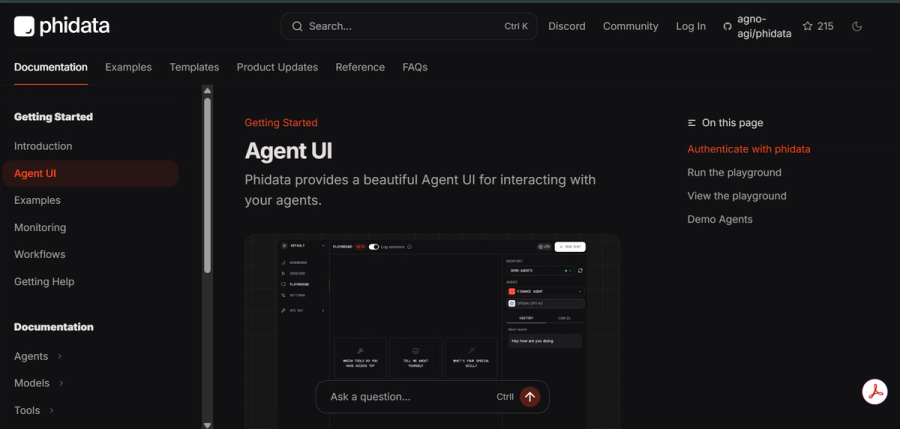

9. Phidata

Phidata is an open-source framework for building multimodal AI agents that handle text, image, and video data with built-in support for memory and tool integration.

Key Features

- Open-source multimodal AI agent framework supporting text, image, and video

- Built-in memory and tool integration for self-retrieval knowledge (RAG)

- Modular agent components for data crawling and content analysis

- Cloud-agnostic with fast prototyping support

- Ideal for multimodal chatbots and intelligent search assistants.

Pros:

- Supports multimodal AI workflows (text, image, video).

- Built-in memory and knowledge retrieval (RAG) capabilities.

- Cloud-agnostic and modular for flexible deployments.

Cons:

- Not designed for complex multi-agent orchestration.

- Requires multimodal data expertise to utilize fully.

- Smaller community and ecosystem support.

Getting Started with Phidata

- Clone or install Phidata repository.

- Configure agents to handle text, images, and video inputs.

- Use built-in memory and tool integration for advanced knowledge retrieval.

- Develop modular agents for crawling and analysis.

- Deploy agents on cloud or local infrastructure.

How This Works in Practice Inside an Enterprise Deployment: Phidata

A media company leverages Phidata for managing and searching across video archives, images, and transcripts. Multimodal AI agents enable content discovery by combining textual and visual cues, improving editorial workflows and rights management. Built-in memory and tool integration support dynamic content analysis.

Here’s a concise conceptual code snippet illustrating this:

Python

from phidata import MultimodalAgent, MediaIndexer, ContentSearchEngine

# Initialize media indexer for videos, images, and transcripts

indexer = MediaIndexer(data_sources=["/media/videos", "/media/images", "/media/transcripts"])

# Create multimodal AI agent combining text and visual analysis

agent = MultimodalAgent(

indexer=indexer,

memory=True, # Enable built-in memory for dynamic analysis and context retention

tool_integrations=["metadata_extractor", "face_recognition_tool"]

)

# Initialize search engine with the multimodal agent

search_engine = ContentSearchEngine(agent=agent)

# Query example combining textual and visual context

query = "Find all interviews with CEO discussing quarterly results where company logo appears"

# Run multimodal search

results = search_engine.search(query)

print(results)This example highlights how Phidata agents enable integrated multimodal content discovery by indexing heterogeneous media and supporting complex queries streamlining editorial decisions and rights management at scale

10. Langflow

Langflow is a visual, low-code/no-code platform for creating AI workflows built on LangChain’s architecture, enabling drag-and-drop orchestration of LLM chains.

Key Features

- Visual, low-code/no-code AI workflow design platform

- Drag-and-drop orchestration built on LangChain’s architecture

- Supports integration of multiple LLMs and open-source models

- Enables non-technical users to build and prototype AI workflows

- Good for rapid prototyping of chatbots, document parsers, and pipelines.

Pros:

- Visual, low-code/no-code AI workflow builder accessible to non-technical users.

- Integrates multiple LLMs and open-source models.

- Enables rapid prototyping and collaboration.

Cons:

- Limited advanced control compared to code-based frameworks.

- Can be complex for absolute beginners.

- Not ideal for large-scale production orchestration.

Getting Started with Langflow

- Install Langflow: pip install langflow.

- Use its drag-and-drop interface for building AI workflows.

- Integrate with multiple LLMs and open-source models.

- Create workflows visually for chatbots, document analysis, and pipelines.

- Export and deploy workflows to production or use for rapid prototypes.

How This Works in Practice Inside an Enterprise Deployment: Langflow

A marketing agency uses Langflow’s drag-and-drop interface to prototype chatbots and document analysis pipelines without deep coding. Campaign managers design multi-LLM workflows for content creation and lead qualification, iterating rapidly to optimize customer engagement. The tool bridges collaboration between technical and non-technical teams.

Code snippet showing how Langflow can be used in an enterprise marketing deployment to design multi-LLM chatbot and document analysis workflows with minimal coding:

Python

from langflow import Flow, LLM, Chain

# Initialize LLMs for content and lead qualification

content_llm = LLM(name="content-llm", model="gpt-4")

lead_qualifier_llm = LLM(name="lead-qualifier", model="gpt-4")

# Define content creation chain

content_chain = Chain(

llm=content_llm,

prompt_template="Generate marketing content for product launch: {product_details}"

)

# Define lead qualification chain

lead_chain = Chain(

llm=lead_qualifier_llm,

prompt_template="Qualify lead based on: {lead_info}"

)

# Create Langflow Flow with these chains

flow = Flow(name="marketing_workflow")

flow.add_chain(content_chain, output_key="content")

flow.add_chain(lead_chain, input_key="content", output_key="lead_score")

# Example run with input data

results = flow.run(product_details="New SaaS platform", lead_info="Contact: vip@example.com")

print(results)This example illustrates rapid prototyping of multi-step LLM workflows via Langflow’s abstractions, enabling marketing teams to innovate on customer engagement pipelines with low-code drag-and-drop ease.

When to Select Each AI Agent Orchestration Platform

Choosing the right AI agent orchestration platform depends on your specific project requirements, team expertise, and operational goals. Below is a detailed guide outlining when to select each popular framework:

| Platform | Best Fit For | Core Capabilities | Enterprise Applications |

|---|---|---|---|

| Kubiya | Engineering and DevOps teams | Enterprise-grade automation, natural language commands, strong security, visual workflow builder, Slack/Teams integration | Infrastructure automation, CI/CD |

| LangChain | Developers building multi-step LLM workflows | Flexible Python SDK, multi-LLM integration, strong community, extensibility | Document processing, chatbots |

| CrewAI | Enterprises with modular multi-agent teams | Secure encrypted inter-agent comms, centralized orchestration visibility | Cross-department workflow automation |

| Ray | Large-scale AI workloads and distributed execution | Scalability, fault-tolerance, cluster/cloud orchestration | ML training, simulation, model serving |

| AutoGen | Adaptive multi-agent systems with human-in-the-loop | Dynamic peer-to-peer communication, real-time evolving AI systems | Diagnostics, interactive analytics |

| Microsoft Agent Framework | Regulated enterprises using Azure AI Foundry | Cloud-native, pre-built AI modules, high governance and security | Finance, healthcare |

| LlamaIndex | Semantic search and knowledge management | LLM-augmented indexing, contextual querying | Enterprise knowledge bases, compliance |

| Smolagents | Lightweight, rapid prototyping | Fast NLP agent deployment, pre-trained models | Text classification, summarization |

| Phidata | Multimodal data integration | Cloud-agnostic, modular, supports text, images, video in AI systems | Media management, content discovery |

| Langflow | Low-code/no-code visual AI workflow builder | Drag-and-drop design, bridging business and technical teams | Chatbots, document parsing |

Fundamental Issues in AI Agent Orchestration

The challenges of running a system where many AI agents work together boil down to three essential points: making sure the team works reliably, ensuring the team follows the rules and can be trusted, and making sure the system is fast and affordable to run.

1. Getting the Team to Work Right (Reliability)

These are the core technical difficulties in making the agents function smoothly and correctly.

- Juggling the Team's Tasks (Coordination): It's extremely hard to organize many different AI workers so they talk to each other correctly, know whose turn it is, and move at the same speed. If one worker is confused or late, the entire project can stop or end up wrong.

- Keeping a Good Memory (Context): The system has trouble making sure every AI worker has an up-to-date and complete picture of the project's history, the current situation, and all the outside facts. If they forget something important, they'll make bad decisions.

- Fixing Mistakes Automatically (Error Recovery): The system must be smart enough to detect when a worker fails, an internet line cuts out, or an outside tool breaks, and then figure out how to fix itself and get back on track without needing a human to step in every time.

2. Earning Trust and Following the Rules (Governance)

These problems determine whether businesses can legally and safely use the AI team for important tasks.

- Security and Accountability (Governance): In a company, you need strict controls to know who (which AI worker) can access what data. You need a perfect record (audit trail) of every action they take, so you can prove they followed all the company and legal rules.

- Understanding "Why" (Explainability): When a team of AI workers makes a big decision, people need to know the reasons behind it. If the decision-making process is a "black box," people won't trust it, and it can't be used for serious work (like in banks or hospitals).

- Working with Humans (Human Oversight): Designing the system so that the AI workers can smoothly pause, hand a task to a human for approval, and then take it back without delays is a complex design challenge.

3. Being Fast and Affordable (Scalability and Viability)

These issues are about whether the system can handle a massive workload without getting too slow or too expensive.

- Handling Big Crowds and Costs (Scalability): The system has to grow quickly to manage thousands of AI workers at once. Crucially, it must be smart about using expensive computer resources (like the powerful AI models) to keep performance high while keeping the operating cost low.

- Plugging Everything In (Interoperability): The system has to connect with all the different software, databases, and old computer tools a company already uses. Building and maintaining all these different connections is a huge, ongoing task.

- Needing to be Instant (Latency): For some jobs, the AI workers need to talk to each other and respond immediately. It is difficult to make sure this happens reliably when all the computers are spread out across a network.

Building Reliable AI Orchestration: Engineering Priorities

1. Code Structure and Design

The focus here is on making the system easy to build, test, and maintain over time.

- Modular Architecture: You must design the system with clear boundaries. Separate your code so that the part handling communication, the part managing the workflow steps, and the part executing the agent logic are distinct. This makes debugging easier and lets teams work on parts independently.

- Standardized Integration Layers: Treat all external tools, databases, and APIs the same way. Build consistent, versioned APIs (like gRPC or REST) for every connection point. Use adaptor patterns to isolate the messy integration code, ensuring the core agent engine never breaks when an external system changes.

2. Data Integrity and Performance

This is about managing the information flow and ensuring the system is fast and consistent.

- Robust State Management: Agents need a flawless memory. Implement an architecture (often using event sourcing or transactions) that guarantees consistent, real-time knowledge across all agents. If the system fails, you must be able to audit and potentially roll back the state.

- Asynchronous Communication: To achieve speed, agents should never wait for each other. Use fast messaging queues (like Kafka or RabbitMQ) and event-driven patterns so agents can send information and immediately move on to their next task.

- Data Efficiency: Optimize the data itself. Use lightweight, fast serialization formats (like Protobuf instead of JSON) for messages passed between agents to reduce network load and latency.

3. Reliability and Operations (DevOps)

These practices ensure the system can handle failures and high loads without human intervention.

- Cloud-Native and Scalable: Write code that is stateless and can run easily inside containers (like Docker). Use container orchestrators (like Kubernetes) and Infrastructure-as-Code (Terraform) to automatically scale the entire system up or down based on the actual workload.

- Comprehensive Error Handling: Integrate smart defense mechanisms. This means using circuit breakers to prevent cascading failures and implementing exponential backoff retry logic so agents don't bombard a failing service.

- Tracing and Logging: Instrument every single agent and service with distributed tracing tools (like OpenTelemetry). This is crucial for tracking a single request from start to finish across dozens of microservices, making production debugging possible.

4. Trust, Security, and Auditability

For enterprise deployment, these features are non-negotiable from a compliance and security standpoint.

- Security by Design (RBAC): Implement Role-Based Access Control from the ground up. An agent's execution environment must strictly enforce permissions, ensuring agents only access the data and tools they are explicitly authorized for (the principle of least privilege).

- Transparency and Audit Logs: For every critical decision, write a detailed, unchangeable (immutable) log entry that captures the raw input, the LLM prompt, the final output, and the decision path. This is the proof needed for both internal debugging and external regulation.

- Human-in-the-Loop Integration: Build clear, secure APIs for human intervention. These interfaces should seamlessly inject human decisions (approvals, overrides) back into the workflow queue without causing bottlenecks or data corruption.

Conclusion

In 2025, AI agent orchestration frameworks define the next wave of intelligent, collaborative, and scalable systems.

Frameworks like LangChain, CrewAI, and Ray power enterprise and technical workflows, while LlamaIndex, Langflow, and Botpress enable developers to build practical, domain-specific applications quickly.

For developers aiming to stay ahead, mastering these frameworks will be critical to designing the next generation of AI-driven systems that automate, analyze, and scale intelligently.

FAQs

What is an AI agent orchestration framework, and why is it important?

It’s a platform that manages multiple AI agents working together, essential for building complex, scalable AI systems that solve real-world problems.

How do AI agent orchestration frameworks improve multi-agent collaboration?

They enable smooth communication, task coordination, and error handling among agents to ensure efficient teamwork and adaptive workflows.

Which AI agent orchestration frameworks are best for enterprise applications?

LangChain, CrewAI, Ray, and Microsoft Agent Framework are top choices for their scalability, security, and robust enterprise features.

Can non-developers use AI agent orchestration tools, or are they only for programmers?

Some frameworks like Langflow offer low-code/no-code interfaces for non-developers, while others like Ray and Microsoft Agent Framework are geared towards programmers needing advanced customization.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.