Deterministic AI vs Generative AI: A Developer’s Perspective

Amit Eyal Govrin

As AI agents become more common in developer tooling, the excitement around "AI-driven automation" is growing. But for platform teams, SREs, and DevOps engineers, not all AI is created equal, especially when it comes to reliability, control, and auditability.

This is where terms like deterministic AI and generative AI come into play. And no tool surfaces this contrast better than Kubiya, an AI copilot for DevOps and internal developer workflows.

Kubiya is a deterministic AI assistant, a term that stands in contrast to the more popular LLM-driven "copilots" like ChatGPT or GitHub Copilot. So, what exactly does that mean in practice?

This article breaks it down with technical clarity and real-world developer implications.

What is Deterministic AI?

Deterministic AI operates on fixed logic paths and well-defined inputs. The idea is simple: for a given input, you always get the same output, no surprises, no fuzzy guesses, no room for interpretation. For example, a Kubernetes health check system that restarts a pod if it fails three consecutive liveness probes is deterministic, the logic and thresholds are hard-coded and produce predictable actions.

Think of it as programmatic intelligence. There’s no “learning” or “creativity” involved, only structured workflows, conditionals, and rule-based systems.

How it looks in practice:

Let’s say you want to redeploy a Kubernetes deployment. A deterministic system like Kubiya would:

- Check if the user has permission to perform this action

- Validate the namespace and deployment name

- Run kubectl rollout restart deployment <name> -n <namespace>

- Log the output and track it for auditing

Every time this flow is triggered, the logic is exactly the same.

Why it is important for engineers:

In production environments, you don’t want creative AI. You want reliable automation that follows your org’s policies and respects access controls. Deterministic AI shines in:

- Infrastructure automation (Terraform, Helm, K8s)

- CI/CD execution

- Access management and secrets rotation

- Policy-enforced workflows

Limitations:

The main limitation is flexibility. You need to predefine every action and path. If a user asks for something slightly outside the defined flow, the system won’t know how to handle it. That’s where generative AI can complement.

What is Generative AI?

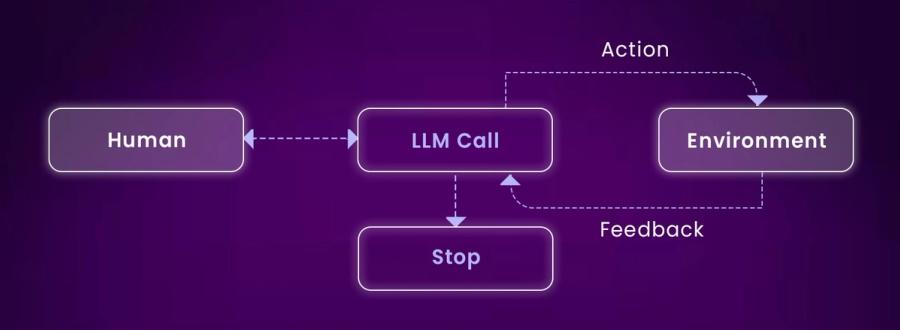

An illustration of agentic AI.

Generative AI, often powered by large language models (LLMs), doesn’t follow strict logic. Instead, it’s probabilistic. It takes your prompt and, based on training data, tries to generate what it thinks you want.

It’s great at filling in gaps, understanding ambiguous input, and generating responses that sound natural. But that comes at a cost, you lose consistency and control.

Common uses in dev workflows:

- Summarizing build logs after a CI failure

- Suggesting fixes for failed Terraform plans

- Generating boilerplate code for a new microservice

- Answering vague developer questions like “why is prod down?”

Why engineers like it:

Generative AI feels human. It can help reduce time spent debugging, writing boilerplate, or navigating documentation. It’s especially useful for junior developers or in high-pressure incident response situations.

The catch:

Generative AI can hallucinate, it might invent APIs, suggest unsafe commands, or respond with incomplete answers. That’s fine in an IDE, but dangerous in production infrastructure.

The 5 Levels of Agentic AI

Adaline Labs describes a progression of AI agency, from simple text responders to fully autonomous agents, and this framework helps position where tools like Kubiya fit within the intelligence spectrum.

Basic Responder

A straightforward text-response system. These AI models don’t interact with external systems, they simply respond to prompts and stop.

Router Pattern

Adds decision-making: the AI can choose between predefined “functions” or workflows, like selecting to run a script or fetch documentation.

Tool Calling

The AI can actually call external tools/APIs when necessary, e.g., fetch logs, query Kubernetes, or run Terraform commands, based on context it infers.

Multi-Agent Pattern

Complex tasks are delegated to specialized sub-agents. For instance, one part handles log parsing, another proposes fixes, and another executes cleanups.

Autonomous Pattern

Fully independent agents that plan, code, and execute new logic on the fly, similar to an AI developer. (Still mostly theoretical in infrastructure contexts.)

Kubiya’s Hybrid Approach

Kubiya sits in a middle ground that’s both pragmatic and powerful. It uses generative AI only where it helps, but strictly limits its authority when it comes to action execution.

Here’s what that looks like:

1. User sends a natural-language prompt: “Restart the checkout service in staging”

2. LLM parses the prompt: Extracts entities like checkout-service and staging

3. Maps to a validated deterministic workflow: Matches to a predefined action:

kubectl rollout restart deployment/checkout-service -n staging4. Executes with guardrails

Kubiya checks:

- User permissions

- Environment constraints

- Available context

5. Logs and audits: Every execution is logged with metadata: who, what, where, when

This is deterministic execution wrapped in a conversational UX, a solid tradeoff that balances productivity with control.

When Should You Use Deterministic AI?

1. Infrastructure Provisioning

Think Terraform or Pulumi. When you're creating a VPC, deploying a GKE cluster, or modifying IAM roles, you need repeatable and verifiable results. You don’t want AI guessing resource names or skipping a dry-run.

2. Access Control & Secrets Rotation

Resetting secrets, rotating keys, or modifying roles must go through audited, secure workflows. Deterministic AI enforces approval policies, avoids human error, and prevents overreach.

When Should You Use Generative AI?

1. Developer Onboarding

Developer onboarding often suffers from information sprawl. A new engineer might need access to: Setup instructions across several tools and platforms, Internal documentation stored in Confluence, Notion, or GitHub, Tribal knowledge hidden in Slack threads or Jira tickets, On-call practices, naming conventions, or service responsibilities.

Now imagine a junior engineer asking Kubiya in Slack: “How do I spin up the dev environment for the payments service?” Instead of pointing them to a 5,000-word wiki page with outdated steps, Kubiya, powered by generative AI, does the heavy lifting: It parses the question and determines intent, It searches across structured and unstructured content (docs, READMEs, Slack messages), It extracts only the relevant setup steps for the payments service, and surfaces them in a clean, readable format.

Something like:

“To set up the payments service in your local dev environment:

- Clone the monorepo and check out the dev branch.

- Run pnpm bootstrap && pnpm dev:payments.

- Set your .env file based on the shared template in /configs/dev.env.

- The service runs on port 4002. Hit localhost:4002/healthz to verify.

For DB access, use the payments-local instance in Cloud SQL Proxy.”

No one had to update a doc, and the engineer got what they needed in seconds, without creating ticket noise in internal support channels.

Kubiya enables this by blending LLMs with internal context. Unlike static bots or search, it understands nuance and intent, even when the question isn’t phrased perfectly. Over time, it can learn from usage patterns, common follow-ups, and org-specific terminology to become more helpful.

2. Incident Debugging

The second area where generative AI shines is incident triage, especially when things break and time is of the essence.

During an outage or failed deployment, engineers often deal with massive log files, cryptic stack traces, and unclear failure modes. This isn’t where you want to write jq filters or grep 1,200 lines of JSON logs.

Instead, with Kubiya plugged into your CI/CD stack (e.g., GitHub Actions, ArgoCD, or Jenkins), an engineer can ask:

“Why did my production deployment fail last night?”

Kubiya, using its generative layer, fetches the most recent pipeline runs, parses the logs, and generates a structured summary:

“The deploy failed during the Install Dependencies step. Error:

ERROR: pnpm install failed - missing lockfile. Run `pnpm install --lockfile-only`.This started after commit d7c1a23 which removed pnpm-lock.yaml from the repo. The failure affected only the staging-deploy job. Try restoring the lockfile or updating the pipeline to allow missing lockfiles.”

This is a context that would take a human 10+ minutes to piece together, especially in a high-pressure scenario.

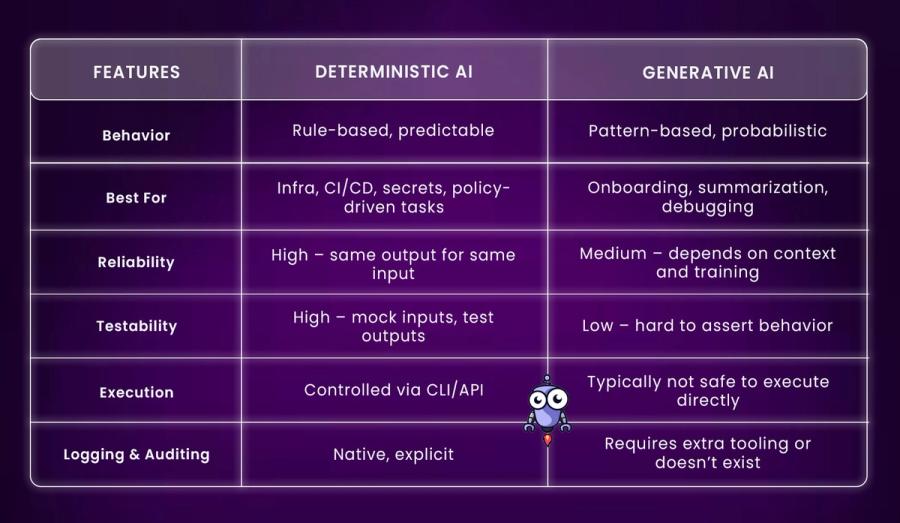

Deterministic AI vs Generative AI: A Comparison Table

Example Workflows Using Kubiya

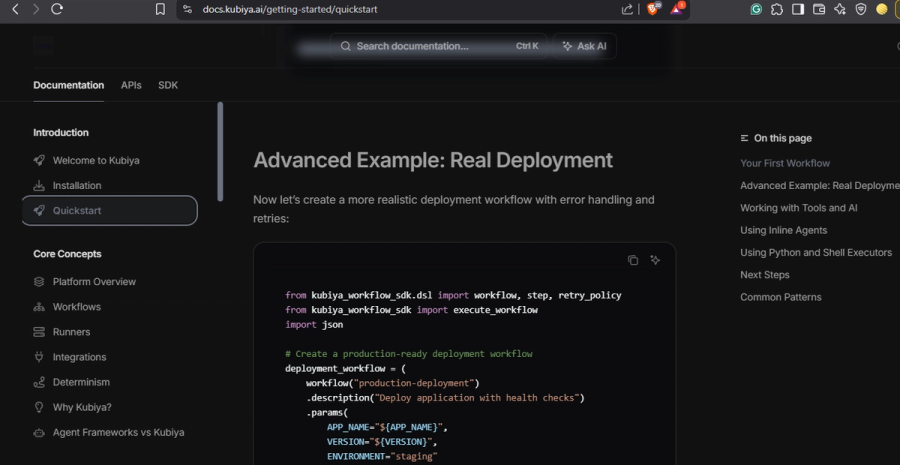

1. Controlled Deployment (Deterministic)

Kubiya’s deterministic workflow for a deployment:

from kubiya_workflow_sdk.dsl import workflow, step, retry_policy

from kubiya_workflow_sdk import execute_workflow

import json

# Create a production-ready deployment workflow

deployment_workflow = (

workflow("production-deployment")

.description("Deploy application with health checks")

.params(

APP_NAME="${APP_NAME}",

VERSION="${VERSION}",

ENVIRONMENT="staging"

)

# Step 1: Validate deployment parameters

.step("validate", """

if [ -z "$APP_NAME" ] || [ -z "$VERSION" ]; then

echo "Error: APP_NAME and VERSION are required"

exit 1

fi

echo "Deploying $APP_NAME version $VERSION to $ENVIRONMENT"

""")

# Step 2: Deploy application

.step("deploy", """

echo "Deploying ${APP_NAME}:${VERSION}..."

# In real scenario: kubectl set image deployment/${APP_NAME} app=${APP_NAME}:${VERSION}

sleep 2

echo "Deployment initiated"

""")

# Step 3: Health check with retries

.step("health_check", """

echo "Checking health..."

# In real scenario: curl -f http://localhost:8080/health

echo "Health check passed"

""")

# Step 4: Notify success

.step("notify", """

echo "🎉 Deployment Complete!"

echo "Application: ${APP_NAME}"

echo "Version: ${VERSION}"

echo "Environment: ${ENVIRONMENT}"

echo "Status: Healthy"

""")

)

# Execute the workflow

params = {

"APP_NAME": "my-awesome-app",

"VERSION": "2.1.0"

}

print("🚀 Starting production deployment workflow...\n")

for event_str in execute_workflow(

deployment_workflow.to_dict(),

api_key="YOUR_API_KEY",

parameters=params,

stream=True

):

try:

event = json.loads(event_str)

if event.get("type") == "step_started":

print(f"▶️ {event.get('step_name', 'Unknown')}: Starting...")

elif event.get("type") == "step_completed":

print(f"✅ {event.get('step_name', 'Unknown')}: Completed")

elif event.get("type") == "log":

print(f" {event.get('message', '')}")

except json.JSONDecodeError:

# Handle non-JSON events

if "data:" in event_str:

print(event_str.replace("data:", "").strip()Prompt:

“Start the payment-api in dev”

Result:

The execute_workflow function with stream=True returns a generator of string events (JSON or SSE format) that you iterate over to get real-time workflow execution updates, while stream=False returns an ExecutionResult object with the final results.

2. Debugging Pipeline Failures (Generative)

Prompt:

“Why did my staging deploy fail last night?”

Kubiya:

- Fetches the last GitHub Actions run

- Parses log context via LLM

- Responds: “The build failed during the ‘Install deps’ step due to a missing pnpm-lock.yaml. Try re-generating it and committing the lockfile.”

It won’t fix it, but it’ll reduce your context switching and triage time.

Best Practices for Teams Using AI Workflows

1. Use Generative AI for Intent Parsing, Not Execution

Generative AI, such as GPT-based models, is excellent at understanding human language; it can take vague, unstructured prompts like “Can you restart payments in staging?” and parse them into structured parameters like service name and environment. This makes it ideal for handling the intent recognition layer of any AI system. However, it should never be responsible for deciding or executing infrastructure logic.

The outputs of an LLM are inherently probabilistic and unpredictable, which is fine for generating text or summaries , but risky for running production commands. Instead, teams should treat generative AI as a natural-language frontend that feeds into deterministic backends: rule-based, pre-approved workflows that handle real execution.

2. Map Prompts to Pre-Defined, Whitelisted Workflows

Every prompt passed into an AI system should be mapped to a known, approved internal workflow. These workflows should be written and owned by the platform or SRE team and parameterized in a way that guards against unintended consequences. For instance, if a user asks to restart a service, the assistant should only succeed if there is a registered workflow for that exact operation, with clear input validation rules.

If the user types a prompt that doesn’t match anything explicitly allowed , say, attempting to “terminate all prod instances” , the system should immediately reject or escalate. This mapping approach is essential for enforcing operational boundaries and ensuring no LLM prompt leads directly to unsafe or unauthorized execution paths.

Conclusion

The hype around AI copilots is real, but so are the risks. For developers managing production systems, the line between automation and autonomy must be drawn carefully.

Kubiya offers a pragmatic path forward:

- Natural prompts for humans

- Deterministic execution for infra

- Guardrails, auditing, and RBAC by default

It’s not about choosing deterministic vs generative, it’s about using each where it fits best. And for platform teams looking to empower developers without compromising control, Kubiya is a tool worth serious consideration.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.