Kubiya vs CrewAI: A Practical Comparison of Multi-Agent Platforms for DevOps Teams

Amit Eyal Govrin

TL;DR

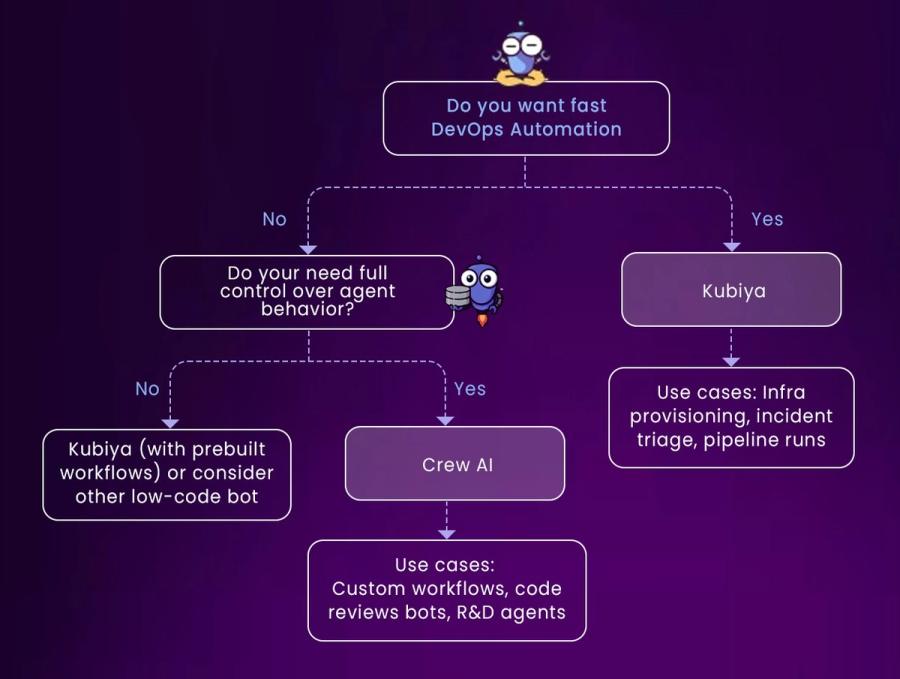

- Kubiya: Built for DevOps teams. Slack-native, scoped memory, pre-integrated with Terraform, CI/CD, and cloud-native tools. Great for platform engineers and SREs looking for automation inside existing workflows.

- CrewAI: Highly flexible framework. Build custom autonomous agents with programmable memory and coordination. Ideal for AI-native use cases or internal R&D tools.

- Use Case Fit: Use Kubiya for fast infra automation. Choose CrewAI if you’re building bespoke workflows and want total agent control.

- Tradeoffs: Kubiya is more operational and works on a real time basis; CrewAI is experimental and requires more engineering effort.

Multi-Agent Platforms in the DevOps Landscape

DevOps isn't about writing bash scripts anymore or using different CI/CD platforms or using terraform or pulumi. It's about coordinating systems, tools, and humans in environments that change minute by minute. Think of DevOps like an airport control tower, every command needs to coordinate with several moving pieces. Now imagine trying to do that with static, hardcoded bots. It breaks fast.

That’s where multi-agent platforms enter the scene. These aren't dumb automations; they’re intelligent workers that remember what happened before, collaborate with other agents, and adapt their responses to the situation. An agent can provision an environment, fetch logs, evaluate compliance, and escalate to another agent, all while retaining memory of context.

The difference from traditional bots is like the difference between a vending machine and a personal assistant. A vending machine gives you a snack. An assistant knows your dietary preferences, notices you skipped lunch, and brings you a protein bar unprompted.

Statistically, the shift is real. According to Statista, the AI software market, including intelligent agent systems, is projected to exceed $300 billion by 2026. DevOps teams are no exception to this growth curve.

Kubiya vs CrewAI at a Glance

At first glance, both Kubiya and CrewAI promise “multi-agent automation.” But their philosophies, workflows, and user targets are completely different.

Kubiya behaves like a DevOps team member that lives inside Slack or MS Teams. You say “rollback prod to the last stable config,” and it acts, remembers context, applies Terraform, and checks compliance before executing. It’s task-aware, role-aware, and security-conscious. No need to define agents manually, you interact through natural language, and Kubiya maps it to structured workflows.

CrewAI, in contrast, is more like a sandbox for designing autonomous workers. You define agents with roles, memory, tools, and prompts. It’s not DevOps-aware by default, you build everything from memory architecture to execution chains. Want a ‘Code Reviewer’ agent who collaborates with a ‘CI Runner’ and ‘Notifier’ agent? You can design it all.

The tradeoff is immediacy vs flexibility. Kubiya is plug-and-play with Terraform, GitHub Actions, Datadog, and more. CrewAI gives you infinite possibilities, but zero defaults.

Kubiya: Designed for Real-World DevOps

Kubiya’s standout feature is its deep contextual memory tied to Slack and workspace-specific channels. If you previously created a staging environment in #dev-staging, Kubiya remembers the region, IaC module, and even your deployment preferences. It scopes this memory to that channel, preventing accidental cross-environment commands.

You can ask it things like “recreate the sandbox like we did last week,” and it will reconstruct the action by linking memory with infrastructure context. It’s like having a Terraform state file with natural language bindings.

Its integrations are serious, native hooks into Terraform, Helm, GitHub Actions, AWS SDKs, Jira, and Datadog. This isn’t just a chatbot with LLM prompts. It’s a full-fledged orchestration layer that can execute workflows while enforcing RBAC, audit trails, and policy-as-code compliance.

Security-wise, it integrates with Okta, Azure AD, and supports scoped permissions. Every action is logged, traceable, and reversible. This makes Kubiya production-safe in high-stakes cloud-native setups.

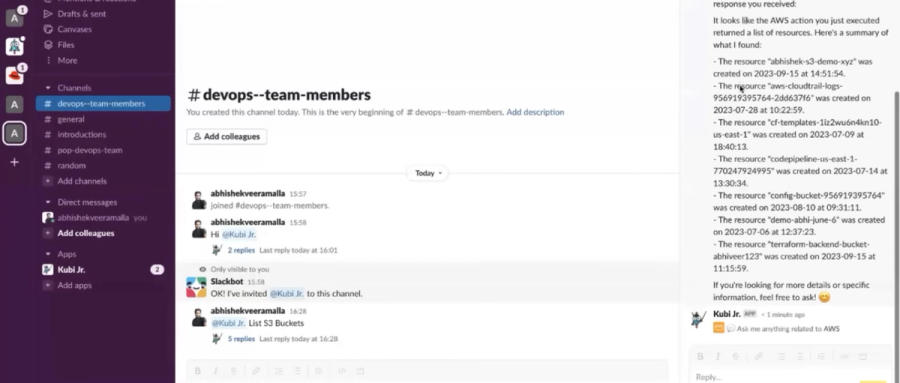

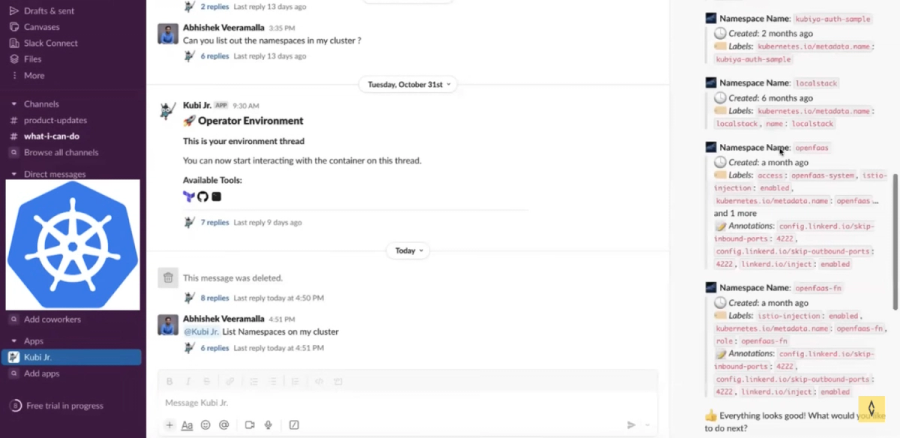

Once Kuby is successfully integrated with Slack and AWS, users can begin interacting with their cloud infrastructure through natural language. For instance, a simple prompt like “list S3 buckets” immediately triggers Kuby to execute the relevant AWS API call and return accurate results, which can be verified in the AWS console. Kuby also supports querying other services such as Lambda and IAM — whether listing all Lambda functions (even confirming when there are none) or retrieving IAM roles in the account. One of Kuby’s most powerful features is its AI-driven prompt suggestions that adapt to the context of the user’s query, enabling actions like creating, deleting, or updating AWS resources without writing any code. Additionally, the documentation offers example prompts for more complex tasks like listing EC2 instances or fetching key pairs, allowing users to gradually master how they engage with the assistant.

CrewAI: Build-Your-Own Agent Framework

CrewAI is all about customization. It doesn’t assume anything about your domain. Instead, it gives you composable building blocks: agents, roles, tools, memory stores, and coordination logic. You define each piece.

Want to build a set of agents that simulate DevOps workflows, write unit tests, review PRs, or summarize incidents from logs? You can do that, but you have to write the orchestration logic, tools, and memory plumbing yourself.

This is ideal for LLM-native teams or research orgs building internal dev tools. CrewAI agents can communicate via messages, share memory in JSON format or vector stores, and execute functions based on their assigned role. You're building the architecture, not configuring a prebuilt one.

But this flexibility has a cost. There are no Slack plugins, no pre-integrated CI tools, and no opinionated defaults. You’ll likely need Python, LangChain familiarity, and DevOps context to wire it up effectively.

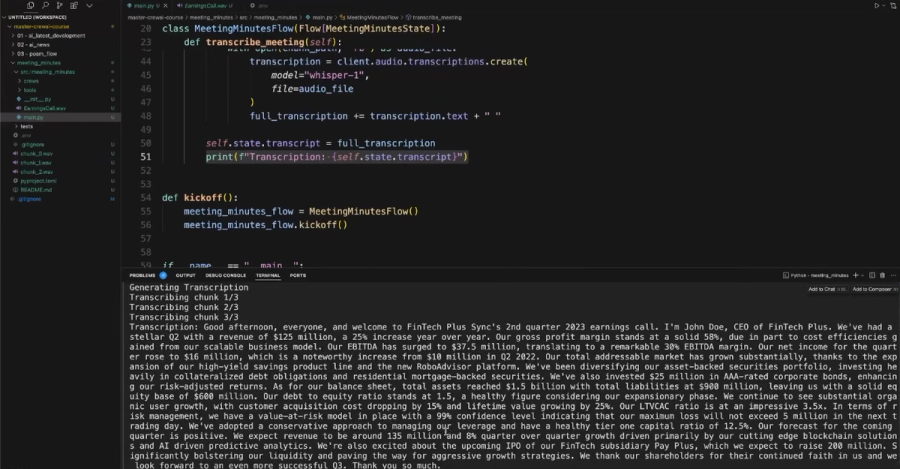

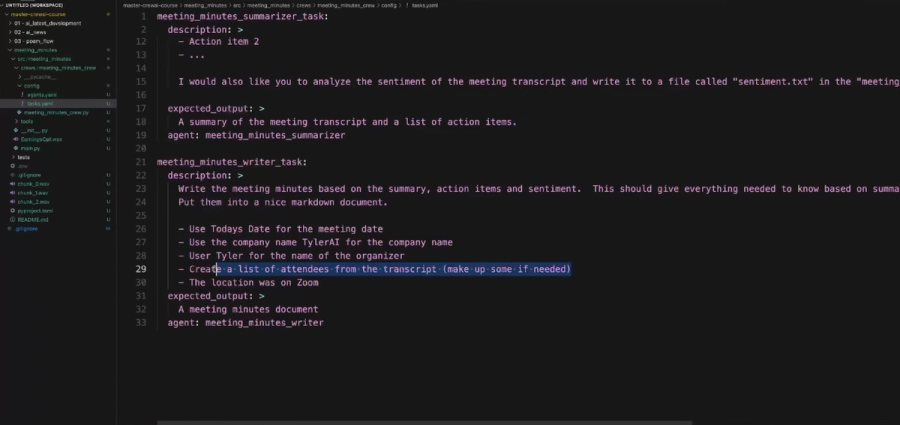

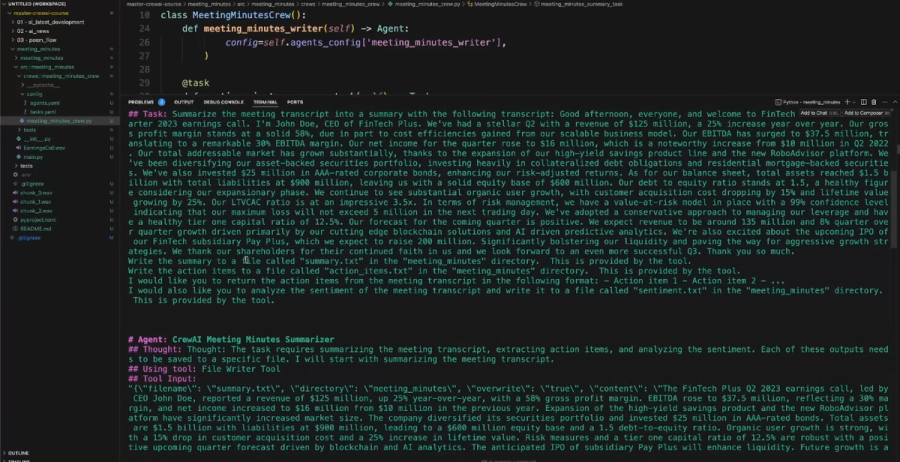

Below we have created an automated system using Crew AI to transcribe Zoom meeting audio, summarize key points, extract action items, perform sentiment analysis, and prepare formatted meeting minutes. The process starts by initializing a Crew AI flow named meeting_minutes, modifying the state to hold the transcript and final summary, and using OpenAI’s Whisper model for speech-to-text transcription. To manage large audio files, the audio is chunked into 60-second segments with pydub, transcribed individually, and concatenated. Once transcribed, a generate_meeting_minutes function triggers a Crew with two agents and tasks: one to extract summaries, actions, and sentiments, and another to compile everything into a well-structured Markdown document using file writer tools.

Use Case Comparison: Who Wins Where?

Let’s break this down practically.

Say you're a platform engineer who needs to provision environments on-demand. With Kubiya, you say, “create a sandbox with the same config as yesterday,” and it handles Terraform, IAM, and Slack notifications. The memory and workflow structure is baked in.

Try that in CrewAI? You’d need to define agents for provisioning, memory for config history, tools to run Terraform, plus coordination logic to map past context to current commands.

Another case: log triage. Kubiya integrates with Datadog, so you can ask, “what changed before CPU spiked?” It’ll fetch logs, correlate metrics, and suggest potential causes.

CrewAI could do this if you write an agent that knows how to fetch logs, another to analyze, another to summarize, and coordinate them. Powerful, but more engineering effort.

Where CrewAI shines is experimentation. Want agents that simulate code reviews, explore codebases, or act as intelligent planners? CrewAI gives you the bones to build that. Kubiya doesn’t, it stays focused on production-friendly DevOps workflows.

Which One Fits Your Team? A Quick Matrix

Let’s face it, you don’t always need a full framework to make a smart decision. Sometimes, all you need is a clear view of how a tool aligns with your team’s constraints, expertise, and velocity goals.

Here’s a comparison matrix to help you quickly assess which platform might work better for your current setup:

| Criteria | Kubiya | CrewAI |

|---|---|---|

| Team Size | Mid to large DevOps/platform teams | Small R&D teams or AI/infra builders |

| Setup Time | Minutes (SaaS onboarding + Slack) | Days (custom Python config + infra) |

| Programming Skill Needed | Low – config-driven, no prompt writing | High – Python + LLM prompt design |

| DevOps Integration | Native: Terraform, Helm, Jenkins, etc. | DIY: Build adapters into your workflows |

| Memory Model | Scoped to user, channel, workspace | Programmable, but must be defined |

| Security & Compliance | RBAC, SSO, audit-ready | Must build your own controls |

| Ideal For | Automating existing DevOps workflows | Prototyping new agent behaviors |

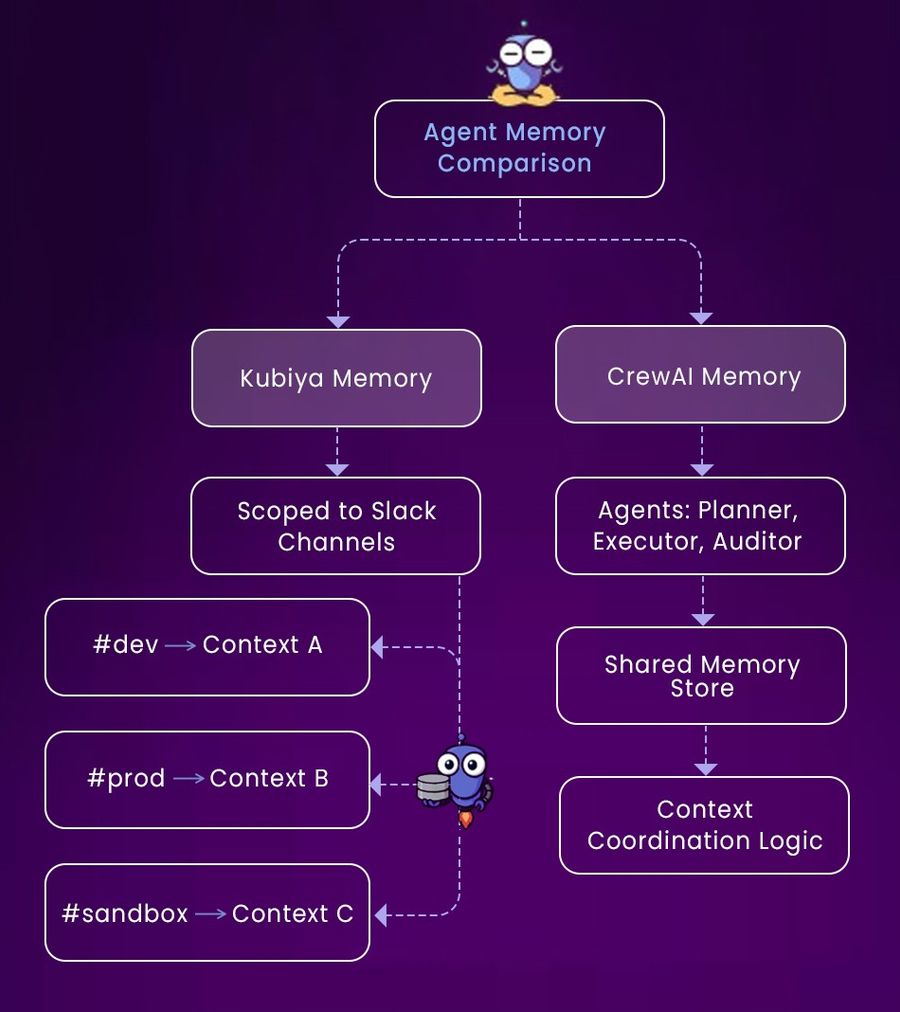

Memory and Architecture: Scoped vs Shared

Kubiya treats memory like project-specific storage. Actions in #infra-dev don’t leak into #prod-maintenance. It remembers toolchains, workflows, inputs, and decisions. That makes it great for collaboration, engineers can reference shared memory when debugging or rerunning tasks.

CrewAI’s memory is a blank canvas. You define the memory store, JSON, Redis, or a vector DB, and how agents interact with it. One agent may query for deployment history, another for error logs. But unless you structure memory properly, agents won’t “remember” anything useful.

This difference matters when scaling. Kubiya scales across teams with consistent behavior and predictable context. CrewAI scales architecturally, letting you define memory syncs across distributed agents, but that sync is yours to build and maintain.

Think of it as the difference between a knowledge base built for you, versus an open notebook you’re expected to organize yourself.

Developer Experience and Operational Maturity

Kubiya is all about immediacy. Within an hour of setup, your team can create environments, manage pipelines, and investigate incidents, all from Slack. No LLM training, no Python scripting. The UI allows configuration of workflows, environment bindings, and RBAC mappings.

CrewAI, in contrast, is developer-first. You install it, write agents, configure their toolkits, and test interactions. Think of it more like building microservices. You’ll want CI/CD for agents, version control for prompt templates, and observability hooks for agent runs.

For DevOps teams with minimal ML experience, Kubiya is safer and more scalable. For R&D teams or orgs building LLM-native agents, CrewAI gives total architectural freedom.

When to Choose What?

If you’re managing infrastructure, CI/CD, and incident response inside Slack, Kubiya fits natively. It gives platform engineers a way to reduce cognitive and operational load without teaching agents how to use Terraform.

If you’re building internal tools where agents behave like knowledge workers, for example, conducting research, summarizing logs, or reviewing PRs, CrewAI is your playground. You’ll need to code, but the possibilities are broader.

Ultimately, Kubiya is for running real infra. CrewAI is for inventing new ways of working with LLMs.

Best Practices for Agent Adoption in DevOps

Adopting multi-agent systems in DevOps is not just about wiring in AI, it's about reshaping how humans and machines collaborate in production environments. These systems introduce flexibility, but also risk if deployed carelessly. Below are some critical practices, enriched with real-world analogies, to ensure safe and effective adoption, regardless of whether you're using Kubiya, CrewAI, or another platform.

1. Scope Memory Like You Would Scope Access in IAM Policies

Imagine giving a junior engineer full access to your entire cloud account on their first day. Sounds reckless, right? Yet that’s exactly what happens when you let agents operate with unscoped or global memory. An agent shouldn’t have the power to recall everything from every workspace, user session, or system unless it’s explicitly needed.

Instead, treat memory like IAM access policies: keep it tight, relevant, and need-to-know. In Kubiya, this could mean scoping memory to a Slack channel or Terraform workspace. In CrewAI, this might involve defining JSON-based memory shards accessible only to specific agent roles. Think of it like compartmentalizing data inside project vaults, agents get access to the vaults they need, and nothing more. This reduces context bleed, avoids accidental command reuse, and makes agent behavior more predictable.

2. Treat Agents Like You Treat Microservices, Not Macros

If you treat agents like glorified macros that can string together commands, you're going to run into problems. Agents, like microservices, are autonomous components in a system, they need versioning, observability, and rollback support. You wouldn't deploy a new version of a microservice to production without tests, logs, and monitoring, so why do it for a new agent prompt or toolchain?

Each agent should have a defined contract: what input it expects, what it does, what outputs it returns. Store agent configurations in Git. Track changes to their behavior over time. Pipe their decisions through logging pipelines. Think of it as shifting from scripting to service architecture, the same discipline applies, just with a different execution model.

If an agent can trigger a kubectl delete or a terraform apply, treat that capability like giving a service write access to production infrastructure. Wrap it in guardrails. Log every call. Require approvals where necessary.

3. Keep Humans in the Loop, Always

Finally, never forget: agents are helpers, not deciders. Think of them like co-pilots, they might suggest a great route, but you still control the steering wheel. In high-impact scenarios, like rotating credentials, reverting infrastructure, or applying security patches, agent actions should trigger reviews, not execute immediately.

Use confirmation prompts. Add policy-based approval workflows. Even something as simple as “Are you sure you want to roll back production?” can prevent catastrophe. In platforms like Kubiya, you can configure these confirmations as part of the workflow schema. In CrewAI, this might mean routing agent suggestions to a human validator agent or escalation channel.

Think of fallback paths like fire escapes in a building, you hope you never need them, but you’d never move into an office that didn’t have one. Your agents shouldn’t be any different.

Conclusion

Kubiya and CrewAI both offer compelling visions of multi-agent systems, one for structured, secure DevOps, and the other for programmable, flexible coordination.

Kubiya is ready for your team today. CrewAI lets you build what might come next.

Choose the one that fits your maturity, your appetite for control, and the kind of automation your team actually needs, not the one that just checks the most boxes.

FAQs

1. Can I integrate CrewAI with Slack or Teams?

Yes, but not out-of-the-box. You’ll need to build or adapt plugins using Python or a bridge service.

2. Does Kubiya support GitHub Actions, Terraform, or Helm?

Yes. Kubiya offers native adapters for these tools and more, including Datadog and Jira.

3. Which platform is better for rapid onboarding?

Kubiya is good for rapid onboarding. Its SaaS model and prebuilt DevOps flows reduce setup time to minutes.

4. Which one gives me full control over agent architecture?

CrewAI gives you full control over agent architecture. It’s built for developers who want to define agent roles, memory, tools, and workflows from the ground up.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.