Kubiya vs LangGraph: Best LLM Orchestration for Developers

Amit Eyal Govrin

As LLM-powered agents gain adoption in engineering workflows, new layers of tooling are emerging to make them usable in production. Two such tools are Kubiya and LangGraph. They both aim to help teams automate complex, multi-step tasks through language models, but they take very different approaches.

This guide compares them side-by-side with practical use cases, code-driven examples, and architecture-level insights to help engineering teams choose what fits their stack.

What Problem Are These Tools Solving?

Engineering teams are often stuck in repetitive tasks: managing deployments, fetching logs, provisioning environments, or running diagnostics. LLMs are good at translating high-level intent into actions, but to reliably execute tasks, you need orchestration. That means handling state, memory, fallbacks, and permissions.

Kubiya and LangGraph both enable LLMs to act, not just chat. But they approach the problem from two ends of the spectrum. One is a prebuilt DevOps assistant. The other is a framework to build custom agents from scratch.

What is Kubiya?

Kubiya is a conversational DevOps assistant designed for self-service workflows. It integrates with systems like GitHub, AWS, Jenkins, Terraform, and more to expose operational tasks through natural language. Engineers interact with Kubiya via Slack, Microsoft Teams, or web chat. It sits between developers and the platform, reducing the need for tickets or manual coordination.

Key Capabilities

- Intent Recognition: Detects user intents like "restart staging" or "rotate secrets" and maps them to predefined workflows.

- Integrations: Supports a wide range of infra tools out of the box.

- Permissions and Approvals: Integrates with RBAC policies, supports approval chains, and logs all interactions.

- No-code authoring: DevOps teams can define workflows using a declarative YAML-based syntax.

- Deployment: Available as SaaS or self-hosted with enterprise features.

Kubiya works best when teams want to scale operational support without writing a single line of orchestration logic.

What is LangGraph?

LangGraph is a low-level framework for building agentic LLM applications. It lets you define agents as state machines using directed acyclic graphs. Each node in the graph represents a deterministic step: a tool call, a prompt chain, a decision gate, or a memory update. These agents are built using Python and LangChain components.

Core Design

- Graph-Based State Management: Define transitions between steps explicitly, with full control over flow.

- Memory and Looping Support: Maintain conversation history or task state across multiple turns.

- Custom Tools: Integrate with internal APIs, shell scripts, or cloud platforms using Python.

- Composable Agents: Combine multiple tools and prompts to build deeply nested workflows.

- Deployment: Runs as part of a custom LangChain stack inside backend services or APIs.

LangGraph is for teams who need precise orchestration logic, want to build from scratch, and have the engineering capacity to manage and deploy custom agent frameworks.

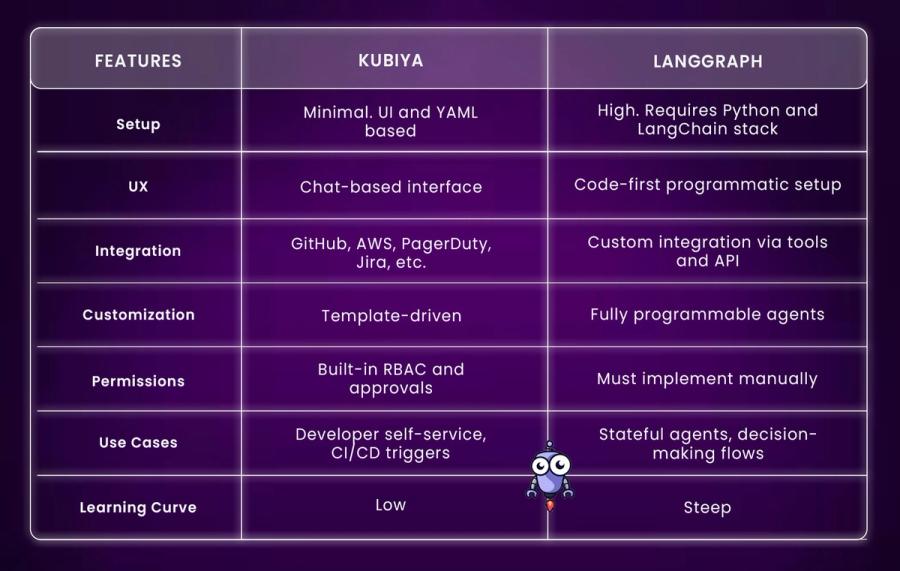

Kubiya vs LangGraph: Feature Comparison

When to Use Kubiya

Automating DevOps Tasks

Kubiya is great for operational tasks that repeat across teams. For example:

- Restarting pods

- Rotating secrets

- Rolling back deployments

- Running postmortems or change reports

Kubiya exposes these as predefined tasks via chat. You can restrict usage to specific roles and require approvals for risky actions.

Enabling Developer Self-Service

When developers need logs or want to re-trigger builds, Kubiya allows them to do this without logging into Jenkins or Kubernetes. This reduces wait time and unburdens platform teams.

Quick Implementation

Because Kubiya is pre-integrated with common DevOps tools, onboarding is fast. You don’t need to build workflows or write orchestration logic.

When to Use LangGraph

Building Stateful, Custom LLM Agents

LangGraph is best used when you’re designing LLM-powered agents that need to:

- Remember previous context

- Branch based on conditions

- Call different tools based on outcomes

- Retry or recover from failure

For example, you might design an agent that diagnoses failed deployments, suggests fixes, and opens remediation PRs. Each of these steps becomes a node in a LangGraph.

Fine-Grained Orchestration Control

LangGraph gives you total control over how agents reason, plan, and act. You define the structure, memory, control flow, and error handling. This is important for agents that interact with critical systems or follow complex processes.

Embedding in Custom Applications

LangGraph is intended for backend services, APIs, or internal platforms. You can combine it with LangServe to expose your agents via endpoints or integrate into existing dashboards.

Example: Automating Pod Log Access

Using Kubiya

Here’s an example of exposing a Kubernetes log query via Kubiya:

task:

name: "Fetch pod logs"

trigger: "get logs for service X"

action: kubectl logs service-x -n prod

roles: [devops, sre]

requires_approval: trueThis can be triggered from Slack with a message like:

"Get logs for service X"

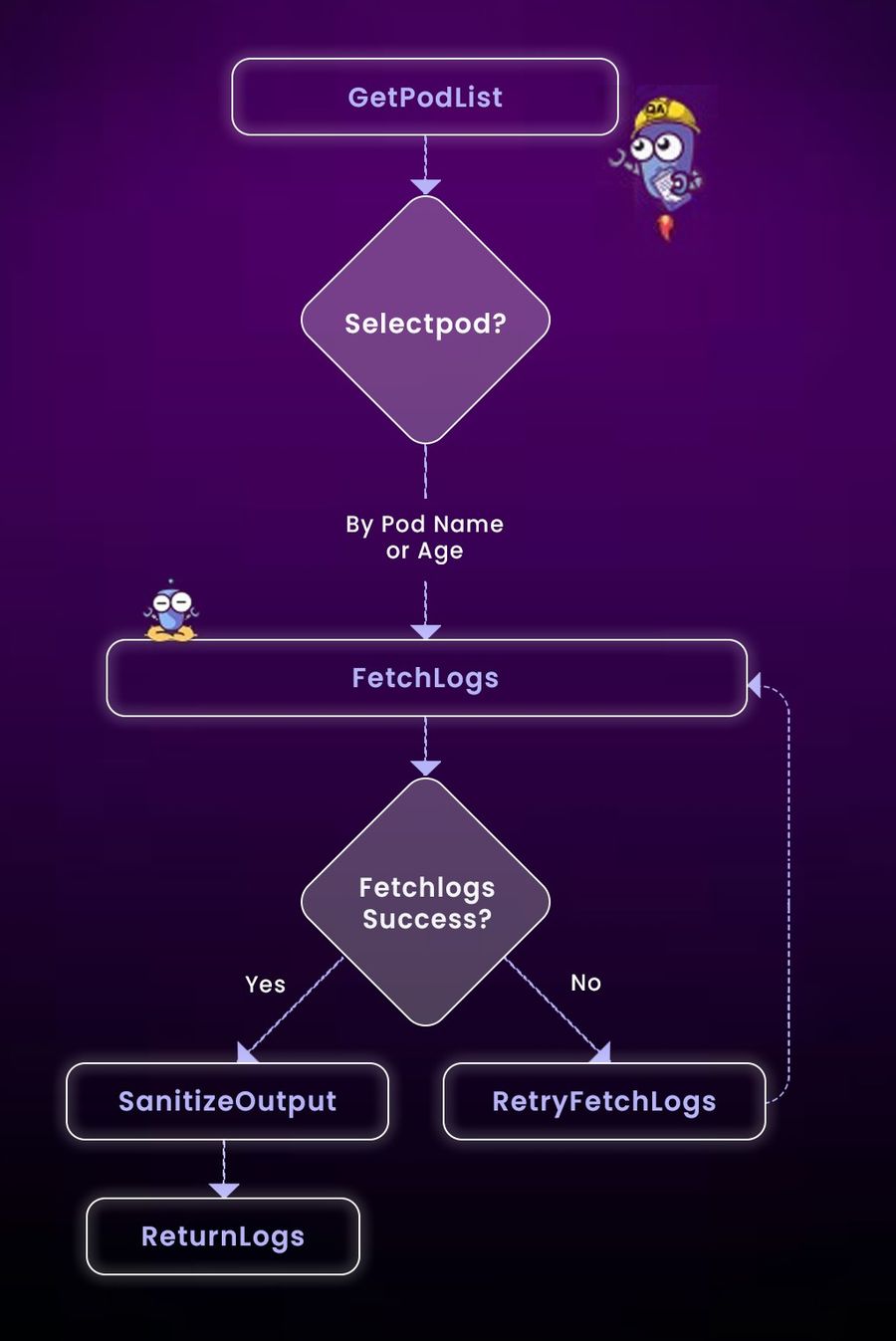

Using LangGraph

To do the same in LangGraph, you'd define a graph with three nodes:

graph = StateGraph()

graph.add_node("get_pods", GetPodsTool())

graph.add_node("select_pod", SelectPod())

graph.add_node("fetch_logs", FetchLogsTool())

graph.set_entry_point("get_pods")

graph.add_edge("get_pods", "select_pod")

graph.add_edge("select_pod", "fetch_logs")Each node is a Python function that wraps shell commands or API calls. This offers flexibility but requires full implementation.

Why Kubiya Wins When You Just Want Things to Work

LangGraph gives you flexibility, but at the cost of time and complexity. Kubiya, on the other hand, is built for teams who want immediate utility without maintaining custom agent infrastructure.

Here’s where Kubiya pulls ahead:

1. Zero Orchestration Logic to Maintain

Kubiya handles workflows declaratively. You don’t need to design agent graphs or manage transitions. Define tasks in YAML, connect integrations, and you're live.

2. Built-In DevOps Knowledge

Kubiya understands DevOps patterns out of the box. Whether it’s fetching logs, rolling back deployments, or rotating secrets, you don’t need to teach it what those operations mean. LangGraph requires you to encode all logic manually.

3. Security and Approvals Are Not Afterthoughts

RBAC, scoped access, multi-step approvals, audit logs, Kubiya ships these as first-class features. With LangGraph, you’d need to build and maintain that as part of your own agent architecture.

4. Chat-Native UX from Day One

Kubiya is designed for Slack and Teams. It handles back-and-forth conversations naturally and prompts users only when needed. LangGraph outputs need to be embedded into chat layers manually.

5. Time to Value is Measured in Hours, Not Sprints

You can set up Kubiya, define your first task, and ship it to your team in a day. LangGraph requires you to build tooling, design graphs, implement custom tools, and validate flows end-to-end before anything goes live.

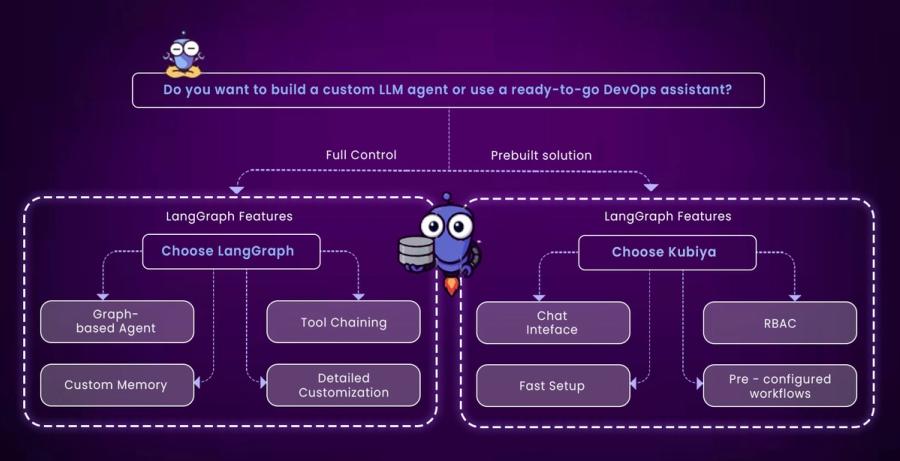

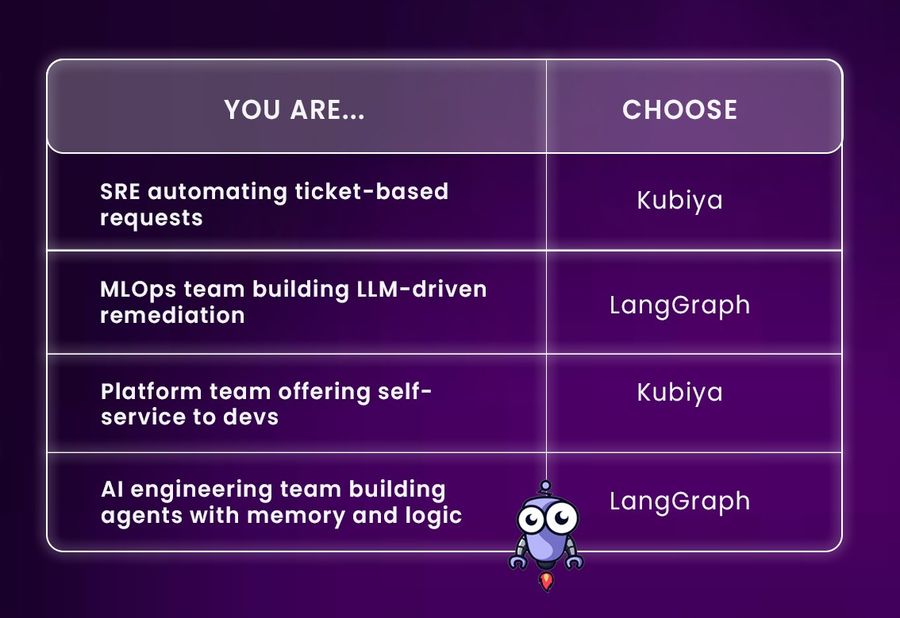

Decision Framework: Which One Should You Pick?

If you want prebuilt values with minimal coding, Kubiya is faster. If you need full control and are already using LangChain, LangGraph offers power at the cost of effort.

Conclusion

Kubiya and LangGraph address the same need from different angles. Kubiya is for teams who want DevOps automation via chat interfaces without building a system from scratch. LangGraph is a low-level framework that gives you everything you need to build custom, stateful LLM agents with complex workflows.

Pick Kubiya if you want to expose existing infrastructure tasks in a conversational format. Choose LangGraph if you want to build agent behavior from the ground up using code.

FAQs

Q1. Can Kubiya work with our internal tools?

Yes, it supports custom integrations via REST APIs and scripts.

Q2. Is LangGraph limited to LangChain tools?

LangGraph depends on LangChain. You can use any tool that LangChain supports or wrap your own.

Q3. Does Kubiya support approvals?

Yes. You can configure who can approve what, and Kubiya tracks it all with logs.

Q4. What are other alternatives in this space?

If you're looking for orchestration tools: Guardrails, CrewAI, and AutoGen. For DevOps chatbots: Humanitec, Cortex, and Port.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.