Model Context Protocol (MCP): Architecture, Components & Workflow

Amit Eyal Govrin

TL;DR

- The MCP architecture follows a modular client–server design that cleanly separates the Host Application, MCP Client, and MCP Server, ensuring predictable and scalable integrations.

- It enables standardized tool discovery and execution, allowing AI agents to interact with external systems through schemas instead of custom integrations.

- MCP supports stateful, context-rich communication, giving LLMs access to files, logs, schemas, and environment context across long workflows.

- The architecture provides flexible transport options, including local stdin/stdout and remote HTTP with streaming, making it suitable for both lightweight local setups and distributed enterprise systems.

- Enterprises adopt MCP architecture for its security isolation, interoperability, and ease of extensibility, allowing teams to add new tools without modifying the core AI application.

The Model Context Protocol (MCP) is built around a clear architectural structure that simplifies how modern AI agents interact with tools and data at scale. Instead of relying on custom, ad-hoc integrations, MCP separates responsibilities across three layers: the Host Application handles user input, the MCP Client manages available capabilities, and MCP Servers expose tools and resources through a standardized interface. This modular approach allows developers to add or update tools without reworking their entire system. By unifying how models request context and invoke external actions, MCP helps teams build AI systems that stay reliable and maintainable as they grow in complexity. Agents can discover tools, access context, and perform multi-step tasks without extra integration code, making it easier for organizations to implement secure, long-term AI workflows.

In this article, we’ll break down how MCP achieves this by explaining its architecture, core primitives, communication flow, and practical examples of how agents interact with tools in real-world development environments.

What is MCP and Why It Was Introduced

The Model Context Protocol (MCP) is an open standard designed to solve one of the biggest problems in the AI ecosystem: the lack of a unified, secure, and consistent method as AI systems become more capable, they need to interact with files, logs, APIs, databases, and multi-step workflows. Before MCP, every team had to connect these pieces manually by writing their own API wrappers, authentication logic, error handling, and data-parsing glue. This made integrations slow to build and expensive to maintain. MCP fixes this by giving AI agents one consistent way to discover and use tools, no matter how those tools are implemented internally.In practice, teams use MCP to let internal AI assistants inspect system logs, run database queries, read documentation, or perform routine operational tasks like restarting a service, checking deployment status, or fetching build logs — without rebuilding custom connectors each time. Support teams benefit in similar ways: they can fetch customer records or create and update tickets through standard MCP tools instead of managing separate scripts. These kinds of everyday workflows show how MCP helps smaller engineering groups and startups build dependable AI-driven systems without the overhead of maintaining one-off integrations.

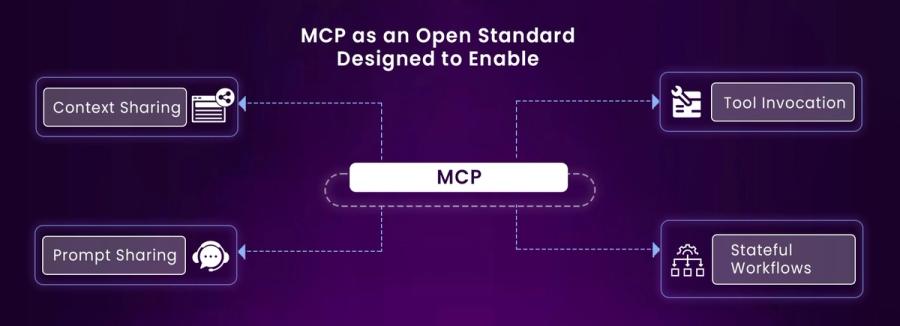

MCP as an Open Standard Designed to Enable

Context Sharing (files, logs, schemas, data)

MCP lets host applications pass structured context such as files, logs, datasets, configurations, and schemas directly to the agent instead of forcing developers to manually stitch this information into prompts. In practical terms, the agent can ask for the latest log file, a specific database record, or a configuration value whenever it needs it, and the host returns that data in a clean, structured format. This removes the usual guesswork and avoids situations where the model is working with stale, incomplete, or copy-pasted context. The agent always operates on up-to-date information coming straight from the source system.

Tool Invocation (APIs, databases, workflows)

A big advantage of MCP is that it gives AI agents a consistent way to call external tools such as APIs, databases, or file-system utilities. Before MCP, developers had to write a separate integration for each service meaning custom API wrappers, custom authentication handling, custom response parsing, and custom error logic for every single endpoint or database they wanted the agent to access. With MCP, those actions are exposed once through an MCP server, and any MCP-compatible agent can use them immediately.This makes common tasks checking system status, calling an internal API, reading a file, querying a database, or triggering an automation workflow , much easier to automate. For teams that already rely on DevOps scripts or internal dashboards, it also means the AI can run the same operations reliably without maintaining additional connectors or extra code.

.Server.py

from fastapi import FastAPI

from fastapi.responses import JSONResponse

app = FastAPI()

@app.get("/get_user")

async def get_user():

return JSONResponse({"user": "chandrakant", "role": "admin"})

This FastAPI snippet exposes a /get_user endpoint that returns structured JSON data. In an MCP setup, this endpoint becomes a tool the agent can call to retrieve user information on demand.

Client.py

import requests

res = requests.get("http://localhost:8000/get_user")

print("Tool Response:", res.json())

Prompt Sharing (reusable structured instructions)

MCP also standardizes how prompts are stored and shared. Instead of scattering prompts across files or embedding them inside code, teams can define prompts as reusable resources that AI agents can request whenever they need them. This keeps instructions consistent across different environments and prevents issues where one prompt changes in one place but not another. For example, a team might store a “Bug Summary Prompt,” a “Customer Email Template,” or a “Security Checklist Prompt” in a central location—MCP makes it easy for any agent to load and reuse them.

Stateful Interactions Across Long Workflows

Many AI tasks don’t finish in a single step. Real workflows i.e like debugging code, processing documents, running an analysis, or updating records ,require the agent to remember information from earlier steps. MCP supports this by letting tools and hosts pass context between steps, such as previous outputs, intermediate results, or session data. This keeps long workflows stable and prevents the agent from losing track halfway through. For example, in a multi-step onboarding flow, the agent can hold onto earlier API responses or user inputs and continue from the exact point it left off instead of restarting the process.

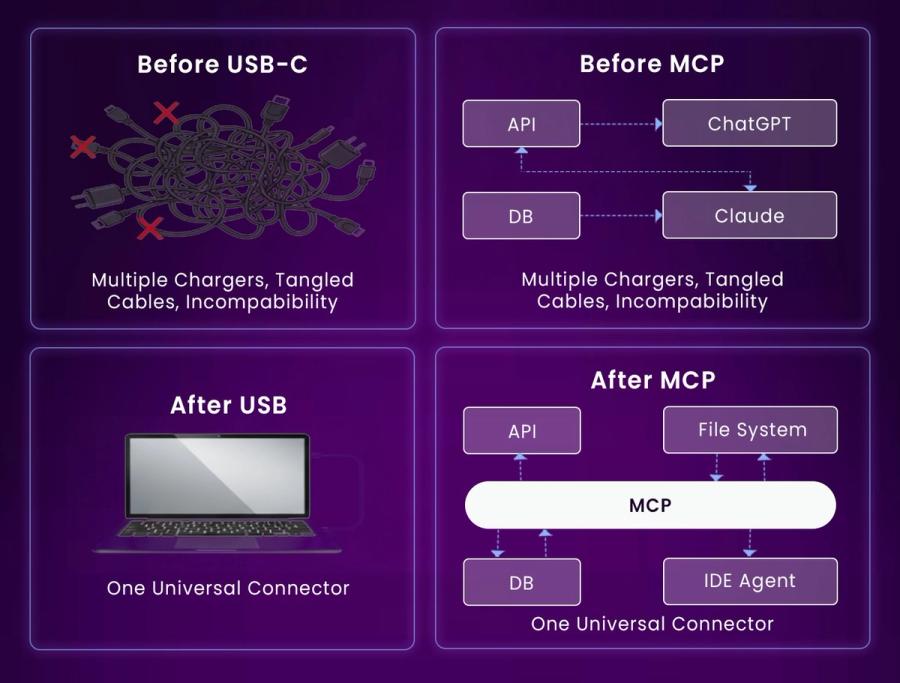

Why MCP Is Compared to USB-C for AI Integrations

MCP is often described as the USB-C of AI because it brings the same type of standardization to AI integrations that USB-C brought to hardware. Before USB-C, devices used different cables, different shapes, different voltages, and different data protocols. Connecting anything required the right cable and often an adapter. Once USB-C appeared, everything became simpler — one port, one connector, one universal standard.MCP works the same way for AI systems. Instead of every app needing its own custom connector for each tool, MCP provides one protocol that any tool can implement and any AI model can understand. Developers don't need to reinvent integration logic or maintain multiple versions they just expose a tool through MCP, and it “just works” with any MCP-compatible agent.

The Fragmentation Problem Before MCP

Before MCP, developers had to deal with a messy and inconsistent integration landscape. Every AI model, every tool, and every environment needed its own connection logic. This slowed down development and made systems harder to maintain.

Each AI App Needed Custom Connectors for Each Tool

If a team was using tools like a SQL query tool, a file-system reader, a Git helper, a search API, and a logging service, and they had 4 different AI apps, they ended up building 20 separate connections because each app needed its own code, its own authentication setup, and its own way of handling errors for every tool.

No Standard for Stateful Tool Usage

Every tool had its own way of handling long-running tasks or remembering previous steps. Some used:

- session tokens

- temporary files

- custom state IDs

None of them worked the same way, and LLMs often lost track of context mid-workflow. Developers had to rebuild state handling in each integration, which made long workflows fragile.

No Unified Way to Send Context to LLMs

Before MCP, developers had to manually pass things like file contents, API responses, logs, schemas, and system state to the model, and because every tool returned data in its own format one using XML, another CSV, another nested JSON they constantly had to write glue code just to make the model understand and work with the information.

Inconsistent Security & Authentication Patterns

Each tool used different auth schemes:

- OAuth

- API keys

- session cookies

- internal tokens

- custom headers

There was no shared standard, so every integration needed separate security handling. For enterprises, this created risk, audit complexity, and a lot of duplicated work.

MCP Introduced as a Fix for These Pain Points

MCP solves these issues by offering a single, predictable protocol for:

- discovering tools

- invoking them

- exchanging context

- handling state

- validating permissions

- structuring responses

With MCP, developers expose tools once through an MCP server, and any AI agent can use them. This reduces engineering overhead, improves reliability, and makes it far easier to build scalable multi-agent systems.

Why Modern Platform are adopting MCP

Modern AI platforms i.e meaning today’s widely used AI systems such as ChatGPT, Claude, Gemini, VS Code AI extensions, enterprise agent frameworks, workflow automation tools, and internal LLM platforms used by companies — are quickly moving toward MCP because custom integrations and one-off connectors no longer scale. These platforms need a shared protocol instead of separate scripts to connect models with tools, data sources, and system resources. MCP provides this by offering a stable and predictable way for agents to work: a single, consistent method for discovering tools, invoking them with structured inputs, and maintaining context across multi-step workflows.

Fragmentation in AI Integrations

Before MCP, each platform like VS code ,Claude etc, created its own way of connecting AI models to external tools. One system relied on custom API wrappers, another watched files for changes, and another depended on local scripts. These approaches all worked differently, making it nearly impossible to reuse integrations across products or teams. MCP eliminates this inconsistency by introducing a single, standardized interface that tools can implement once and AI agents can use anywhere.

Hard-to-Maintain Custom Connectors

Custom integrations often became a maintenance burden as APIs evolved, authentication methods changed, and schemas were updated. Teams ended up spending large amounts of time fixing broken connectors or rewriting them entirely when internal endpoints were deprecated. By providing stable schemas and a JSON-RPC–based communication model, MCP reduces this overhead and ensures tool interfaces remain reliable even as systems grow and change.

Limited Interoperability Across AI Tools

A major limitation before MCP was that a tool integration written for one system rarely worked anywhere else. For example, if a team built a small Python script to query their internal SQL database for an internal chatbot, that same script couldn’t be reused in their VS Code coding assistant or their customer-support bot. Each platform expected different request formats, different authentication methods, and different response structures, so teams ended up rewriting the same capability multiple times.MCP fixes this by standardizing how a tool defines its inputs, outputs, and capabilities. Once a tool is exposed through MCP for example, a database query tool or a log-fetching tool any MCP-compatible agent can use it immediately, whether it’s running inside a desktop assistant, an IDE extension, or a custom automation bot. This means teams can build a tool once and reuse it across all their AI applications instead of creating separate integrations for each environment.

Difficulty Maintaining Context Across Steps

AI agents often need to carry out workflows that consist of several stages: for example, they might validate input, fetch relevant data, apply a tool to process that data, then write results back into a system, and finally notify a user. Each of those stages needs context from the previous steps files, logs, session variables, or tool outputs. Before MCP, developers had to manually pass this intermediate state between each stage, which made workflows brittle and hard to scale.

No Unified Semantics for Tool Calling

Before MCP, every tool behaved differently depending on who built it and which AI system was trying to use it. One tool might return JSON, another might send back plain text, and another might respond with XML. Some tools expected query parameters, others needed a POST body, and each one had its own style of error messages. Because none of this was standardized, agents had to be manually adapted to each tool’s quirks, and there was no reliable way for an agent to “discover” what a tool could do.

MCP fixes this by enforcing a single, shared contract for tool calls. Every tool communicates through JSON-RPC, defines its inputs and outputs with clear schemas, supports capability negotiation, and reports errors in a predictable structure. With these rules in place, a tool behaves the same way no matter which agent is calling it, making tool usage consistent, discoverable, and far easier to maintain.

No Dynamic Discovery of Capabilities

AI models previously had no built-in way to understand what tools were available or what arguments they required. Developers had to hardcode tool lists, parameters, and workflows into the application logic. MCP resolves this with dynamic capability discovery, allowing agents to request a list of tools, schemas, arguments, resources, and prompts from the MCP server at runtime. This reduces manual setup and enables more autonomous agents

code.py

import requests

tools = requests.get("http://localhost:8000/tools").json()

print("Available Tools:", tools)

output.py

Available Tools: ['search_db', 'get_logs', 'run_analysis']

Demand for Multi-Agent Workflows

Companies are moving from simple assistants to collaborative multi-agent systems that must share context, call each other’s tools, and maintain coordinated workflow state. Without a shared protocol, this becomes unreliable and inconsistent. MCP creates the common foundation these agents need, ensuring all tools, capabilities, and context behave the same way across the entire ecosystem.

A Shift Toward Standards as AI Scales

As AI becomes deeply embedded in enterprise systems, organizations need predictable behavior and long-term maintainability rather than one-off integrations. Similar to how HTTP standardized web communication, MCP represents a shift toward standardized agent–tool interaction. It brings predictable behavior, easier debugging, lower maintenance costs, strong security alignment, and reliable interoperability across teams. These advantages make MCP a natural choice as agentic AI continues to scale.

Architecture Overview: Host, Client, and Server

The architecture of MCP is intentionally simple: everything revolves around the Host, the Client, and the Server. These three pieces work together to let an LLM access tools, request context, and run workflows without the developer needing to wire everything manually. Understanding this separation makes it easier to build reliable integrations and debug issues, especially when multiple tools or agents are involved.

MCP Host

The MCP Host is the main AI application i.e, Claude Desktop, a VS Code extension, or an agent runtime inside a platform like Adopt AI. It is responsible for managing the model’s context window, deciding when to call tools, sending user messages to the model, and routing tool responses back to the LLM.In simpler terms, the Host is the "conversation controller." It decides what the model sees, what tools it can access, and how tool outputs should flow back into the model. Developers usually don't write Host logic themselves unless they’re building a custom agent platform ,but understanding how Hosts behave helps when designing tools that need to return structured results.

MCP Client

The Client is created by the Host and sits between the Host and each MCP Server. You can think of it as the connection manager. There is typically one MCP Client per MCP Server, and its job is much smaller but extremely important: managing the session, negotiating capabilities, and routing messages back and forth.The Client abstracts transport details, meaning the Host doesn’t need to care whether a Server runs via STDIO, HTTP, WebSockets, or anything else. This abstraction is a major reason MCP is flexible like you can run a tool on your laptop or on an enterprise cloud, and the Host still interacts with it the same way.

example.py

def call_tool_via_client(server_url, payload):

response = requests.post(f"{server_url}/invoke", json=payload)

return response.json()

result = call_tool_via_client("http://localhost:9000", {"action": "ping"})

print(result)

MCP Server

The Server is where the real work happens. It exposes tools, resources, and prompts for the AI agent to use. A server might provide actions like “get user logs,” “query a database,” “summarize a document,” or “run a workflow.”Servers can run locally (via STDIO) or remotely (via HTTP/SSE). Local servers are great for development environments like VS Code, while remote servers are used in production setups—deployed on cloud services, internal dev tools, or enterprise systems.Because MCP servers are language-agnostic, developers can build them in Python, Node, Go, or any language they prefer. For example, a simple Python-based MCP-like tool might look like-

Example.py

from fastapi import FastAPI

app = FastAPI()

@app.post("/tools/ping")

def ping_tool():

return {"message": "pong"}Putting It All Together (Why This Architecture Works)

Once you understand the Host–Client–Server relationship, MCP feels very natural.

- The Host manages the conversation and model context.

- The Client manages the connection to a tool provider.

- The Server exposes the tool itself.

This separation keeps each component clean and easy to maintain. Developers can update tools without modifying the Host, swap servers without rewriting clients, and add new capabilities simply by registering a new server. It’s the same reason modern frontend libraries moved away from frameworks tightly tied to testing tools like Enzyme for cleaning separation leads to more flexibility and less breakage.

MCP Data Layer vs Transport Layer

One of the cleanest parts of MCP’s design is how it separates what is communicated from how it travels. This is very similar to how HTTP separates its request format (method, headers, body) from the underlying network. MCP follows the same principle: the Data Layer defines the meaning and structure of messages, while the Transport Layer defines how those messages move between the Host, Client, and Server.This separation keeps the protocol flexible and future-proof i.e you can switch transports without changing how your tools behave.

MCP Data Layer

The Data Layer defines the content and semantics of communication. It describes what messages mean, how tools are invoked, and how servers negotiate capabilities. Developers interact with the data layer concepts far more often than transport, because this is where all the actual functionality resides.

The data layer includes:

- JSON-RPC 2.0 message structure MCP uses well-defined request/response formats (method, params, id) so tools behave consistently.

- Session lifecycle Connections follow a predictable sequence: initialize → capability negotiation → ready.

- Core primitives Servers expose tools, resources, and prompts, and clients know how to request them.

- Capabilities Server and client describe what they support (streaming, prompts, resources, etc.) so they can adjust behavior dynamically.

- Notifications Servers can send events (logs, updates, outputs) without requiring a request.

MCP Transport Layer

The Transport Layer defines how messages are physically sent. It doesn’t change the meaning of the messages — it only decides the pathway.

MCP supports two main transports:

- STDIO Used for local tools. Extremely fast because messages stay inside the machine. Ideal for IDE extensions, local scripts, or developer tools.

- HTTP + SSE Used for remote tools that require authentication and network communication. Cloud providers, enterprise backends, and remote MCP servers typically use this.

MCP Primitives: The Core Building Blocks

MCP is built around a small set of primitives that describe everything an AI agent can do, read, or request. These primitives are intentionally simple but powerful i.e they give developers a predictable structure for exposing capabilities without worrying about the transport details or UI framework. If you understand these primitives, you understand the heart of MCP.

Tools

Tools are executable actions exposed by an MCP server. They behave like small, well-defined functions that an AI agent can call: making an API request, reading a file, running a database query, or triggering a workflow. What makes MCP tools reliable is that each one comes with a clear input schema and output schema, so the agent always knows what parameters it should send and what structure it can expect in return.

Resources

Resources represent context objects that an agent can read or fetch. Unlike tools, resources are not actions—they are data. This includes files, logs, records, database schemas, configuration documents, or even structured metadata the model may need during a workflow.

Developers often expose resources to:

- let the model read a file without embedding it in the prompt,

- let the model inspect logs before debugging,

- let an agent pull a database schema so it knows what queries are valid.

Prompts

Prompts are reusable instructions or templates stored on the server. Instead of embedding a long prompt in every request, agents can fetch a named prompt like "bug_summary_prompt" or "security_review_template" and fill in the variables. This keeps instructions consistent across workflows and easier to update centrally—very useful for enterprise environments.

Client-Side Primitives

Some primitives don’t live on the server—they are executed by the client or host. These exist because not everything should be a tool call.

Sampling

Sampling means asking the LLM to complete a prompt. For example, the host might generate a structured question, send it to the LLM, and get a completion. This isn’t a tool it’s pure model inference.

Elicitation

Elicitation is when the host asks the user for input based on what the agent requested. Example, If a tool needs a missing parameter, the client can ask the user: “Which project ID should I query?”

Logging

Logging lets the host output debug or status messages during workflows.

Developers often use this when chaining multiple tools together so they can track what the model is doing.

example.py

def log(message):

print(f"[MCP CLIENT] {message}")

log("Starting workflow…")MCP Architecture in Action: A Practical Example

One of the best ways to understand MCP is to walk through a simple workflow from start to finish. If you’ve ever compared Enzyme’s wrapper.find() with React Testing Library’s screen.getByText(), you know how a practical example reveals the difference in architecture. MCP benefits from the same kind of demonstration seeing how a Host, Client, and Server cooperate makes the protocol feel much more intuitive.

Below is a small, realistic sequence that shows how an agent interacts with an MCP server: initializing, discovering tools, calling one of them, receiving structured output, and even handling real-time updates. This mirrors how MCP runs inside Claude Desktop, VS Code agents, and enterprise automation platforms.

Initialize the Connection

When an MCP Client connects to a Server, they start with a handshake.

The client tells the server what it supports (streaming, prompts, resources), and the server

List Available Tools

Once the session is ready, the Host asks ,this is similar to running ,

example.py

screen.debug()

in RTL to see what elements exist.

Conceptual MCP-style request

{

"jsonrpc": "2.0",

"id": 1,

"method": "tools/list"

}Now the agent knows exactly what actions it can perform by seeing the output of the above code.

Call a Tool with Arguments

Once a tool is discovered, the agent invokes it by name.

Here’s a simple example using a fake sum_numbers tool.

Call a Tool with Arguments

Once the agent knows which tools are available, the next step is actually calling one. In MCP, tools behave like small functions exposed by the server. The agent simply calls the tool by name and passes the arguments the tool requires. This is similar to how, in React Testing Library, you interact with the app using real user-accessible actions instead of poking internals like Enzyme did.

Client sending a request to the tool

This represents what the MCP Host would send internally

{

"jsonrpc": "2.0",

"id": 2,

"method": "tools/call",

"params": {

"name": "sum_numbers",

"arguments": { "a": 5, "b": 7 }

}

}Server-side example

A real MCP server would use JSON-RPC .

example.py

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class SumInput(BaseModel):

a: int

b: int

@app.post("/tools/sum_numbers")

def sum_numbers(payload: SumInput):

return {"result": payload.a + payload.b}

This mirrors how MCP tools work:

- structured inputs

- predictable outputs

- clearly defined behavior

In a real-world example, this tool could easily be something like calculate_invoice_total, check_service_health, or fetch_user_balance—common actions engineering teams often automate.

Receive Structured Content

One of MCP’s biggest advantages is that the response is always clean, structured JSON. This means the LLM or client can reliably parse the result without guessing.

Response.json

{

"jsonrpc": "2.0",

"id": 2,

"result": { "result": 12 }

}0 scraping text, no brittle parsing logic. For developers used to Enzyme vs RTL comparisons — this is the difference between scraping DOM nodes vs. getting the exact element, typed and predictable.

In a real-world workflow, this could be:

- CPU usage from a monitoring service

- formatted logs from a DevOps tool

- a filtered list of database records

All structured and ready to use.

Handle Notifications

If the MCP server adds or removes a tool while running, it notifies the client. This is useful in real environments where capabilities change — for example, a CI/CD tool might register new deployment actions after a service update.

The client updates its list automatically, so the agent always works with the latest functionality.It’s similar to how frameworks handle UI updates without requiring the developer to manually re-query everything.

Migration Strategy: Moving from Custom Integrations to MCP

Step 1: Set up an MCP Server

The first step in adopting MCP is deciding how your server will communicate. MCP supports two transports, and each fits a different use case.

If you're building something that runs on a user’s machine—like a VS Code extension, local developer tool, or CLI assistant STDIO is usually the best option. It’s fast, secure, and doesn’t require opening network ports. Most local agent tools use STDIO because it keeps everything self-contained.For anything running on an internal network, in the cloud, or shared across multiple services, HTTP is more practical. It works well for remote services, enterprise dashboards, CI/CD systems, or backend APIs that want to expose MCP tools to multiple agents. HTTP also lets you layer authentication, rate limits, and monitoring more easily.MCP servers can be written using official SDKs in JavaScript, Python, Rust, and more. The SDKs handle the protocol details so you can focus on exposing meaningful tools, resources, or prompts instead of managing JSON-RPC plumbing.

Step 2: Configure MCP Clients

Once the server is running, the next step is setting up an MCP client to this is the part of the host application that actually communicates with the server. The client connects to the server, tells it which features it supports (like tools, resources, prompts, or streaming), and receives the server’s capabilities in return. This negotiation makes sure both sides are aligned before any tool calls happen, so the agent doesn’t try to use something the server can’t provide.

In this snippet, the client connects to the MCP server, the capability negotiation happens automatically, and once the connection is established the client can see which tools and resources the server exposes. This is the basic setup every host application goes through before the agent starts using any tools.

Step 3: Shift Tool Integrations into Standardized Primitives

The next step is to move your existing integrations into MCP’s structured primitives, and this is usually where developers see the biggest gain. Most backend systems already expose functionality through REST, GraphQL, RPC, or CLI commands. Under MCP, these operations become tools like small, well-defined actions with clear inputs and outputs. Instead of maintaining custom wrappers or scattered scripts, you expose these actions once and any MCP-compatible agent can use them consistently.

example.py

@app.post("/tools/get_weather")

def get_weather(payload):

city = payload["city"]

return {"temperature": 27, "condition": "Clear"}For DevOps workflows like the ones Kubiya.ai automates the things such as logs, config files, deployment results, and environment details can be exposed as MCP resources so the agent can fetch them directly when needed.

example.py

@app.get("/resources/deploy_log")

def deploy_log():

return open("/var/logs/deploy.log").read()Similarly, a Kubiya-type operation like restarting a service can be exposed as a simple MCP tool:

example.py

@app.post("/tools/restart_service")

def restart_service(payload):

return {"status": f"{payload['service']} restarted"}These examples show how common tasks like calling an API, reading a log file, or restarting a service ,can be wrapped as MCP tools or resources. Instead of rebuilding your systems, you just expose what already exists in a consistent format that any MCP agent can use.

Step 4: Handle Stateful Context

Many real workflows take more than one step: validating input, running checks, applying changes, then updating a system. MCP supports this by letting servers maintain session-level state so the agent can work across multiple steps without losing context.

For example:

- an agent might validate input in one call

- run a database query in the second

- generate a report in the third

Because the session keeps state, the agent doesn’t need to resend everything each time. This is valuable for long-running tasks like log analysis, deployment workflows, or data processing jobs. It makes agent interactions feel more like working with an API client than stateless text prompts.

Step 5: Add Security + Permissions

Once tools and resources are exposed, security becomes essential. MCP plays well with existing security models, so you can apply the same patterns your infrastructure already uses.For cloud-based or internal services, OAuth is common, allowing agents to authenticate with scoped permissions. For teams exposing many tools, namespacing helps isolate capabilities so one agent can’t accidentally access another team’s functionality. Local tools often use sandboxing, ensuring the agent only interacts with approved files or commands.Rate limits can also be applied at the MCP layer, preventing runaway agents from overloading backend systems.In practice, MCP lets teams scale safely: tools are exposed in a controlled way, agents follow permission rules, and each environment enforces its own security model.

Use Cases & Examples

Below are real, practical examples showing how teams use MCP to power modern AI workflows. These scenarios are modeled the same way a React guide walks through “Migrating a Form Component” or converting a “Redux-connected Component”—simple, real, and grounded in what developers actually build.

Example 1: AI Code Editor

An AI-powered code editor benefits heavily from MCP because it can plug into multiple development-related servers without custom glue code. A filesystem MCP server lets the agent read and write files just like an IDE. A Git MCP server allows the agent to commit, diff, or inspect branches without shell scripts. And an LSP MCP server gives the agent access to code intelligence,definitions, diagnostics, symbol search and go directly through a structured interface. Together, these servers make it possible for an AI to refactor code, fix errors, or navigate large codebases using the same primitives the editor already relies on.

Example 2: Customer Support Assistant

Customer support teams often need quick access to user context, order data, and ticket history. With MCP, these data sources become resources that the assistant can fetch on demand,no custom CRM integration required. Actions such as creating a new ticket, escalating an issue, or updating a status become tools, giving the agent a reliable way to perform work inside the company’s support system. Instead of stitching together scripts or API wrappers, everything is standardized and available through MCP.

A support agent asks the AI, “Find the user’s last three orders and create a refund ticket if the latest one failed.” Through MCP:

- Order history is fetched as a resource

- Refund ticket creation is executed as a tool

- Issue logs are pulled for context

The agent completes the entire workflow without custom integrations or manual lookups.

Example 3: Data Analysis Agent

A data analysis agent can use MCP to combine structured tools with rich data resources, such as CSV files, database tables, log snapshots, cached dataframes, or preprocessed feature sets. A SQL MCP tool lets the agent run parameterized queries safely without embedding raw SQL in prompts. A graph-generation tool can turn query results into charts using a consistent input schema. Meanwhile, cached dataframes exposed as MCP resources allow the agent to pull subsets of data or summary statistics without hitting the database again. This mirrors how analysts already work with SQL, plotting libraries, and dataset files, but MCP wraps these pieces in a unified protocol the agent can use cleanly and reliably.

Example: A data analyst asks, “Show me monthly revenue, calculate the growth rate, and generate a bar chart.” Using MCP, the AI:

- Calls the SQL tool to fetch revenue by month

- Loads the dataframe resource to compute growth

- Uses the graph tool to render a visualization

Conclusion

MCP gives AI agents a consistent way to call tools and carry context through multi-step tasks, which is important in engineering work where actions like checking logs, reviewing deployments, or running diagnostics need to behave the same way every time. This aligns well with how Kubiya.ai operates: Kubiya already runs DevOps tasks as deterministic, containerized workflows. Exposing those workflows through MCP would simply make them easier for different agents to discover and use without adding custom integration logic. In practice, MCP becomes the common protocol, and Kubiya supplies the operational actions that agents can rely on safely and predictably.

Frequently Asked Questions ?

What is MCP used for?

MCP is used to let AI agents access tools, data, and system resources through a single, standardized protocol.

Is MCP tied to Claude?

No , Claude implements MCP, but the protocol itself is open and can be used by any model or platform.

Can I run MCP locally?

Yes, MCP servers can run locally using STDIO, making them ideal for dev tools, editors, and local automation.

How does MCP ensure security?

MCP inherits your system’s existing security model using OAuth, permissions, namespacing, and sandboxing depending on the transport.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.