What Are Multi-Agent Systems in AI? Concepts, Use Cases, and Best Practices for 2025

Amit Eyal Govrin

TL;DR

- Definition: Multi agent systems (MAS) comprise multiple autonomous AI agents collaborating to solve tasks that are difficult for a single model. Each agent can use tools (APIs, databases) and memory to achieve sub-goals.

- Advantages: Compared to a monolithic model, an MAS can specialize agents for subtasks, scale by adding/removing agents, and often offer better interpretability and robustness.

- Applications:. Multi-agent AI systems enhance enterprise workflows by assigning specialised agents to handle tasks like cloud management (Google Cloud, Azure), supply chain optimisation (IBM), contract analysis (JPMorgan COIN), manufacturing (Siemens), and customer support (Talkdesk).

- Best Practices: Key guidelines include matching the system architecture to the problem, defining clear agent roles, building flexible communication protocols, balancing autonomy vs. central control, and emphasizing safety, monitoring and incremental testing.

- In 2025 and beyond, multi-agent AI systems will empower enterprises with scalable, intelligent, and fault-tolerant solutions—driving automation in support centres, smart infrastructure, and dynamic supply chains across evolving digital ecosystems.

In an enterprise landscape, a single-agent system may seem reliable at first. It operates independently, solves a specific set of problems that the enterprise faces, follows structured rules and logic, and requires relatively few computing resources compared to multi-agent AI. However, as complexity increases and more tools are needed to handle multiple, interdependent tasks, such a do-it-all system can no longer support the entire workflow effectively.

This is when enterprises start leaning toward a multi-agent AI system. Compared to a single-agent AI, a multi-agent system can divide a complex workflow into smaller, manageable units, each agent focusing on a specific task or responsibility. In this article, you will explore core concepts of multi-agent AI systems, their use cases, and best practices to build a multi-agent system in 2025.

What is a Multi Agent AI System?

A multi agent AI system does not rely on a single agent to accomplish all tasks. When an enterprise faces multiple complex tasks, operates in a rapidly evolving environment, or needs to integrate with various tools, it must adopt a system where multiple AI agents work together, collaborate, and adapt to the changing landscape. A multi-agent AI system involves the simultaneous operation of multiple AI agents in order to facilitate large-scale, complex workflows. Also, if one agent faces some lags or issues, it does not slow down the whole system. Other agents in the system keep on working, making the whole system free from a single point of failure.

Example: A prime example of a multi agent AI framework is Microsoft's Azure AI Foundry Agent Service, launched in GA in May 2025. It allows developers to build and orchestrate specialized AI agents into robust, long-running workflows.

Consider a customer support scenario:

- An “Authentication” agent verifies credentials.

- A “Triage” agent assesses the issue.

- A “Billing” agent retrieves invoice data.

These agents can execute concurrently or sequentially. Crucially, if one agent, like the "Billing" agent, slows down, the central orchestrator ensures others continue, managing retries and fallback logic to prevent the entire system from stalling.

Another example is Vaiage, which is a multi-agent AI system developed by Jiexi Ge, Binwen Liu, and Jiamin Wang (May 2025) for personalised travel planning. It combines LLM reasoning with external data sources to create adaptive itineraries. Key agents include:

- Chat Agent – collects user preferences

- Information Agent – queries APIs (Maps, Weather, Rentals)

- Recommendation Agent – filters attractions

- Route Agent – plans routes

- Strategy Agent – optimises time

- Communication Agent – delivers the plan

Agents collaborate using TravelGraph, a shared graph-based memory for real-time updates.

Foundational Concepts in Multi-Agent Systems

Building and understanding a MAS requires several core concepts:

Agents and Autonomy

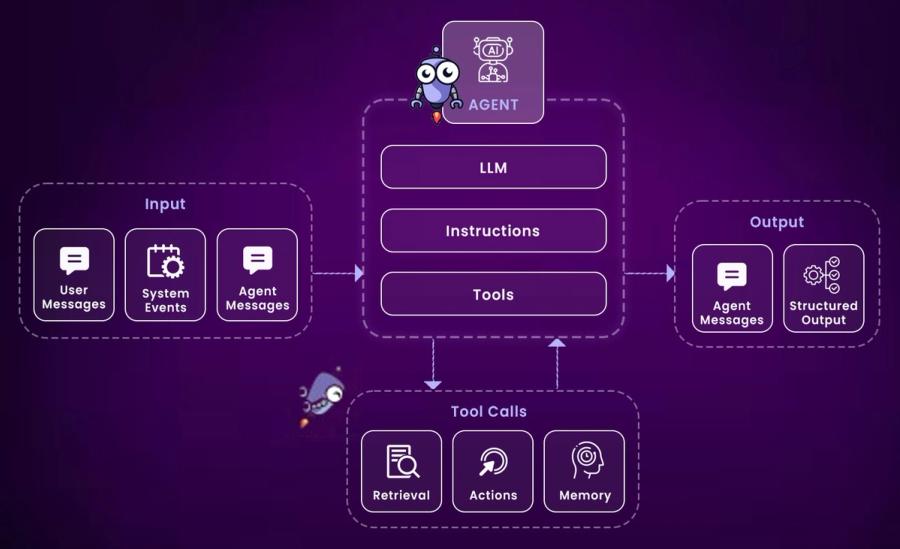

In multi AI agent systems, each agent operates autonomously, making independent decisions based on its knowledge, tools, and goals. Typically, an agent includes a large language model (LLM) or a task-specific reasoning engine, and can:

- Perceive inputs (user commands, data streams)

- Store and recall memory

- Call external tools or APIs

- Execute actions aligned with both local and shared objectives

Environment

The environment refers to the shared space in which agents act and respond. This may include physical systems (like robotics) or virtual infrastructures (e.g. databases, message queues, cloud APIs).

Example: In Autonomous CloudOps, agents interact through a cloud-native control plane. Monitoring agents sense latency spikes, scaling agents provision new instances, and cost-control agents adjust usage. Each action alters the environment state in real time.

Interactions and Communication

Agents interact by passing messages or signals. Communication can be direct (agent-to-agent messages) or indirect (writing to/reading from a shared environment or blackboard). Designing effective communication protocols is a key challenge: agents need a way to understand each other (using agreed-upon languages or schemas) and to coordinate tasks. For instance, they might use JSON messages over HTTP, publish/subscribe queues, or custom APIs.

For example, Amazon's fulfillment centers use a Multi-Agent System (MAS) where hundreds of Kiva robots collaborate to streamline inventory. Agents include Kiva Robots (retrieving shelves), a Task Allocator, and a Traffic Controller. Robots report real-time inventory and location data. Agents communicate via a central system, acting autonomously to adjust to delays, congestion, or prioritize tasks, optimizing order fulfillment and overall warehouse efficiency.

Organization

Multi agent systems can adopt:

- Centralised architectures, with a single orchestrator agent (simpler but less fault-tolerant)

- Decentralised structures, where agents act peer-to-peer, enabling scalability and resilience

- Hybrid setups, combining a central planner with local autonomous agents

Example: In Uber’s dispatch system, a hybrid MAS handles ride allocation. A central planner matches drivers and passengers, while individual agents (driver and rider apps) make local decisions like rerouting or rescheduling based on real-time traffic.

Planning and Learning

Agents in multi AI agent systems often use planning or learning algorithms to decide actions. A common paradigm is multi-agent reinforcement learning (MARL). In MARL, each agent learns from its experience and may share learned policies or rewards with others.

For example, Kolat et al. (2023) proposed a cooperative MARL approach for adaptive traffic signal control across a network of six intersections. Each intersection is managed by an autonomous agent using deep Q-learning. Agents share a cooperative reward signal to reduce overall congestion. In simulations, their method reduced fuel consumption by 11% and average travel time by 13% over traditional fixed-cycle control.

Global Objectives

Multi-agent cooperation is typically guided by a global reward or objective function. Either the system assigns rewards to individual agents based on overall performance, or agents negotiate sub-goals. This encourages behaviors like cooperation and resource sharing. In safety-critical MAS, designers often build in an overseer or supervisory agent (sometimes a human) to ensure global constraints are met.

Real-World Applications of Multi-Agent AI

Multi-Agent AI Systems (MAS) are transforming enterprise operations by enabling decentralized, collaborative problem-solving. These systems use multiple AI agents, each specializing in a task, to boost adaptability, efficiency, and resilience.

Here are five real-world enterprise examples:

Autonomous Cloud Operations (e.g., Google Cloud Autopilot, Azure Automanage): Monitoring agents detect anomalies like latency spikes. Scaling agents adjust resources, while cost-control agents manage budgets—all working in sync for optimal performance.

Supply Chain Optimization (e.g., IBM Sterling Supply Chain Solutions): Agents represent suppliers, logistics, and manufacturers. They negotiate, adjust production, and re-route shipments in real-time, reducing delays and improving efficiency.

Financial Trading & Fraud Detection (e.g., FinTech platforms): Trading agents analyze markets and execute trades. Simultaneously, fraud detection agents monitor for suspicious activity, protecting assets and maintaining compliance. JPMorgan’s COIN (Contract Intelligence) system, where AI agents parse legal documents to extract key data. This multi-agent workflow reduced a 360,000-hour annual task to seconds.

Smart Manufacturing (e.g., Siemens Digital Industries): Robotic agents handle tasks like welding or inspection. Planning agents adjust production based on real-time inputs, enabling flexible workflows.

AI-Powered Customer Service (e.g., Talkdesk, Observe.AI): One agent detects user intent, another retrieves data, while a third completes actions like refunds, creating seamless, automated support without human input.

Multi-Agent AI: Best Practices

Designing and deploying a robust multi agent AI requires careful planning. Here are key best practices, drawn from industry guidance:

Define Clear Agent Roles and Boundaries

Start by clearly defining the role, objective, and observation/action space of each agent. Avoid ambiguity in agent responsibilities to prevent unintended overlaps or conflicts that can destabilize training or execution.

Use Modular, Composable Architectures

Design agents and their interfaces in a modular fashion. Decoupling communication, perception, decision-making, and actuation layers improves maintainability, enables easier debugging, and supports iterative upgrades without disrupting the entire system.

Establish Robust Communication Protocols

Clear and reliable communication is essential in multi agent systems. Since agents often run in parallel or across different systems, they need fast and structured ways to share information. Use standard formats like JSON over HTTP, gRPC, or FIPA-ACL to keep messages consistent and easy to understand.

Example: In InsurifyAI, a supervisor agent gives out tasks through a shared workspace (called a blackboard). Specialised agents—like one for checking policy details or another for tracking claim status—read the task, do the work, and send updates back using structured messages. This setup helps agents work together smoothly and bounce back quickly from any errors.

Promote Decentralized Decision-Making Where Possible

Centralized control can become a bottleneck or single point of failure in large-scale deployments. Where feasible, distribute decision-making to individual agents using decentralized protocols or consensus algorithms. This enhances resilience and scalability.

Leverage Simulation for Iterative Testing

Before deploying to production, validate multi-agent interactions in high-fidelity simulation environments. Simulation provides an opportunity to test coordination protocols, emergent behaviors, and safety constraints at scale. Continuously refine policies based on simulation results before real-world deployment.

Incorporate Human Oversight and Control Hooks

Especially in critical applications (e.g., industrial automation, finance, healthcare), include interfaces for human supervisors to override, pause, or reconfigure agents at runtime. Human-in-the-loop mechanisms are vital for mitigating risk and ensuring compliance.

Design Incentives and Reward Structures Carefully

In reinforcement learning-based multi-agent systems, poorly designed reward functions can lead to unintended or exploitative behaviors. Use domain knowledge and progressive curriculum learning to shape rewards in alignment with high-level system goals.

Monitor Emergent Behavior Continuously

Multi-agent interactions often produce unexpected emergent behaviors. Continuous monitoring, logging, and analysis of agent interactions help detect anomalies early and adapt policies accordingly.

Plan for Scalability and Interoperability

Use interoperable standards and protocols when building a multi agent AI framework. As system complexity grows, ensure that adding new agents or capabilities doesn’t degrade overall performance or coordination fidelity.

Conclusion

Multi-agent systems represent a powerful paradigm shift in AI. By orchestrating many specialized agents, MAS can address problems that single agents cannot handle alone. In 2025 and beyond, as AI continues to evolve, MAS are poised to tackle ever more ambitious tasks, from managing smart cities and energy networks to automating entire business processes.

Implementing an MAS demands attention to architecture, communication, and safety. But when done well, the payoff is high: systems that are more scalable, flexible, and resilient. Developers and AI engineers can leverage MAS principles to build collaborative intelligence into applications, ultimately creating solutions where the whole truly becomes greater than the sum of its parts.

FAQs

What are multi-agent AI systems?

Multi-agent AI systems (MAS) are systems that consist of multiple autonomous agents that interact, collaborate, or compete within a shared environment. Each agent has its own perception, reasoning, and action capabilities. MAS enables decentralised decision-making, allowing agents to collectively solve complex problems that are difficult or impossible for a single agent to handle efficiently.

What is an example of a multi-agent system?

A prime example of a multi-agent system is Amazon’s warehouse automation system. It uses hundreds of Kiva robots as agents that retrieve items from shelves, while a task allocation agent manages robot assignments and a traffic management agent prevents collisions. These agents communicate and coordinate in real-time to optimise warehouse operations.

What are the 5 types of agent in AI?

According to IBM, the five main types of AI agents are:

Simple Reflex Agents – React directly to percepts using condition-action rules (e.g., if-then logic).

Model-Based Reflex Agents – Use internal models of the world to handle partially observable environments.

Goal-Based Agents – Make decisions based on desired outcomes or goals, using search or planning techniques.

Utility-Based Agents – Choose actions based on a utility function that quantifies preference among different outcomes.

Learning Agents – Adapt and improve performance over time by learning from experience and feedback.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.