Top 10 AI Orchestration Tools in 2025

Amit Eyal Govrin

TL;DR

- AI orchestration ties together different AI models into smooth workflows that work in real time and scale easily.

- This brings big wins like making operations faster, boosting AI teamwork, centralizing monitoring, adapting with data, and keeping everything compliant.

- To build this, you start by setting clear goals, organizing your data, designing the system, picking the right platform, automating workflows, then monitoring and improving continuously.

- The leading AI orchestration platforms in 2025 cover DevOps-focused tools like Kubiya AI, no-code data platforms like Domo, and Python-friendly orchestrators like Airflow and Prefect.

- Succeeding with AI orchestration means tackling tech complexity, building skills, enforcing security and governance, and embracing modular, iterative development

As AI continues to transform industries, managing complex AI workflows has become a major challenge. AI orchestration tools play a vital role by automating, coordinating, and connecting diverse AI models, agents, and systems into smooth, efficient processes. Acting like conductors, these tools integrate machine learning, robotic automation, and large language models into unified workflows that enable real-time decisions and governance.

In 2025, AI orchestration solutions range from open-source pipeline schedulers to advanced multi-agent platforms with enterprise-grade security. Selecting the right tool can significantly enhance your organization’s ability to deploy AI at scale and unlock its full business value.

This article explores the top 10 AI orchestration tools shaping the future of AI automation this year, providing detailed comparisons to help you find the platform best suited to your technical requirements and operational goals.

What is AI Orchestration?

AI orchestration is the process of connecting and coordinating multiple AI models, agents, and automated systems into a single, intelligent workflow. Instead of running in isolation, these AI components work together in real time to deliver efficient, scalable, and adaptive automation.This unified approach helps businesses speed up decision-making, improve collaboration between AI services, maintain visibility across operations, and ensure compliance with policies. As AI applications become more complex, orchestration tools are essential to unlock the full potential of automation.

Real-Time AI Orchestration in Ride-Sharing: The Case of SwiftRide

In ride-sharing platforms like SwiftRide, AI models handle different tasks such as predicting rider demand, dynamically pricing rides, and matching drivers. When these models run independently, inefficiencies arise due to lack of coordination.

AI orchestration connects these disparate systems in real time, enabling demand forecasts to update ride matching instantly. Pricing models adjust fares based on current conditions, while the platform continuously optimizes driver-rider pairings. This intelligent synchronization delivers a smoother customer experience, better resource utilization, and maximizes overall platform efficiency.

AI Orchestration vs. AI Workflow Automation

- AI orchestration manages the entire AI ecosystem, ensuring seamless collaboration and scalability.

- AI workflow automation focuses on automating specific tasks within these orchestrated systems.

Orchestration is the foundation that enables businesses to extract value from automated AI workflows.

Understanding AI Orchestration Components

At a high level, AI orchestration connects AI models, data sources, and automated workflows to work cohesively.

Key components include:

- Integration: Seamless data and model interoperability via APIs

- Automation: Scheduling, resource management, and error handling

- Governance: Ensures security, compliance, and auditingFor technical readers, orchestration platforms often offer rich SDKs, config files, and extensible plugins to customize deployment and monitoring.

How AI Orchestration Works

AI orchestration brings together modular components APIs, AI modules, cloud infrastructure, and orchestration platforms to automate complex workflows efficiently. APIs enable real-time communication and data exchange between isolated AI services (such as language models, vision modules, or custom microservices). These components are deployed on scalable cloud servers to handle high-volume workloads.

The orchestration platform acts as the control layer that sequences tasks, manages dependencies, and optimizes resource allocation. Developers define workflows declaratively or programmatically, specifying which AI modules to invoke, how data flows between them, and under what conditions agents should parallelize or branch. Automatic scaling, error handling, and monitoring are built in, letting teams focus on application logic rather than deployment complexity

.

Benefits of AI Orchestration

- Operational Efficiency:AI orchestration automates the connection and data flow between different tools, so tasks (like chatbots, document processing, and risk assessment) run in a coordinated sequence. This streamlines processes, cuts manual steps, and optimizes resources, leading to faster results and lower costs.

- Improved AI Performance:By linking together multiple specialized models, orchestration tackles complex problems as one smart system. It enables centralized monitoring, adaptive workflows, and continuous learning, so your AI solutions become more accurate and reliable over time

Example: AI Orchestration in Healthcare Diagnostics

A hospital uses several AI tools: one analyzes medical images, another evaluates patient risk factors, and a third recommends treatments. APIs connect these tools to share data seamlessly, while cloud servers provide scalable computing for heavy workloads. The AI orchestration platform manages this entire workflow by triggering each AI task in sequence, monitoring progress, and handling errors. As a result, imaging results flow automatically to risk assessments, then to treatment recommendations, delivered quickly to doctors. This reduces manual effort, speeds up diagnosis, and improves patient care quality

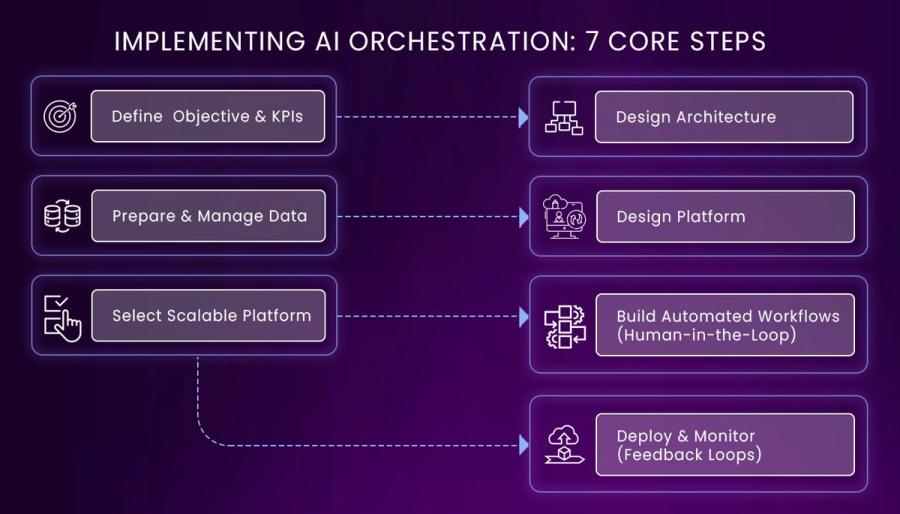

Implementing AI Orchestration: 7 Core Steps

Turning AI orchestration from an idea into action takes a clear, organized approach. The seven steps below guide you through every important phase—from defining goals and designing systems to building workflows and deploying solutions ensuring your AI setup is scalable, reliable, and aligned with business objectives.

Step 1:

Define Objectives & KPIs (set clear goals)

Step 2:

Design Architecture (create systems that connect AI components)

Step 3:

Prepare & Manage Data (organize and clean data for AI use)

Step 4:

Design Platform (select and plan the underlying platform)

Step 5:

Select Scalable Platform (choose tools that grow with your needs)

Step 6:

Build Automated Workflows (add human-in-the-loop processes if required)

Step 7:

Deploy & Monitor (send the workflows live and use feedback for improvement)

By following these seven steps, organizations can ensure their AI orchestration projects are reliable, scalable, and aligned with business goals. Each stage from planning through deployment and monitoring helps avoid silos, reduces integration headaches, and creates workflows that adapt as business needs grow.

For example:

Suppose a hospital wants to orchestrate its AI systems for patient diagnostics. By first setting clear goals and KPIs, they ensure every model supports the right clinical outcomes. The architecture enables instant data flow between imaging AI, predictive analytics, and patient records. With a scalable platform, they build workflows where human doctors can review AI suggestions (human-in-the-loop). By integrating, testing, and then monitoring these workflows, the hospital continuously improves both speed and reliability of care—delivering fast, actionable insights while maintaining strict compliance.

Having explored the core concepts, let’s now consider the leading AI orchestration platforms each excelling in different enterprise domains.

Starting with Kubiya AI, a platform built for DevOps automation and multi-agent orchestration, followed by Domo’s no-code approach to AI pipelines, and Python-centric tools like Airflow for data workflows

Unleashing the Top AI Orchestration Platforms of 2025

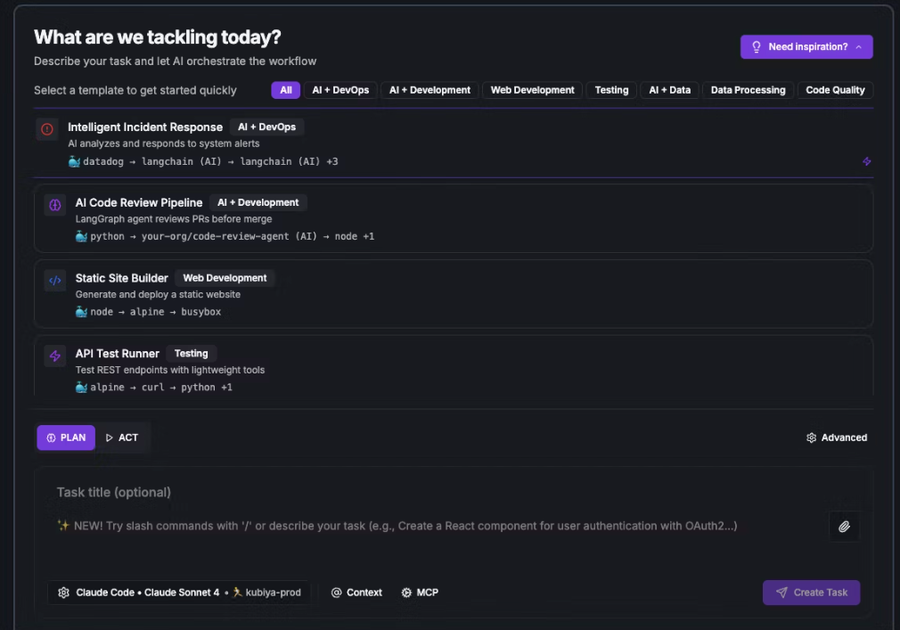

1. Kubiya AI

Kubiya is a modular multi-agent AI orchestration platform designed primarily for DevOps automation. It allows users to trigger complex workflows using natural language commands in Slack, Teams, or web consoles. Kubiya specializes in automating infrastructure provisioning (via Terraform), CI/CD pipelines, incident management, and approval workflows. Its strengths include contextual memory that retains organizational and workspace state, role-based access control for security, and tight integration with cloud and DevOps tools. Kubiya is valued in enterprises that require reliable, secure, and scalable AI automation

Key features of Kubiya.ai for AI orchestration :

- Context-Rich Agent Intelligence: Kubiya agents have live access to infrastructure, APIs, logs, and cloud platforms, enabling decisions based on the full system state, not just isolated prompts. This enhances reliability and accuracy in automation.

- Deterministic Execution: Kubiya ensures workflows produce the same output for identical inputs every time, which is critical for predictable, safe automation in sensitive infrastructure and DevOps environments.

- Zero Trust Security & Policy Enforcement: Kubiya offers enterprise-grade security with role-based access control (RBAC), single sign-on (SSO), audit trails, just-in-time (JIT) approvals, and an embedded policy engine that enforces compliance and operational guardrails.

- Modular Multi-Agent Framework with Extensive Integrations: Kubiya’s modular agents specialize in areas like Terraform, Kubernetes, GitHub, and CI/CD pipelines, coordinating complex workflows seamlessly. It integrates natively with major cloud providers, collaboration tools, and monitoring platforms, enabling broad automation coverage.

Enterprise Use Case: Accelerating Infrastructure Automation with Kubiya

Large enterprises often struggle with slow and error-prone cloud infrastructure provisioning due to manual workflows, long approval cycles, and compliance risks. Kubiya solves this by enabling developers to use simple natural language commands in Slack to request complex infrastructure setups. Kubiya’s multi-agent orchestration interprets intent, applies organizational policies, coordinates Terraform deployments, and manages approvals automatically.

Key Benefits:

- Faster provisioning: reduces setup times from days to hours

- Policy adherence: automatic enforcement of security and compliance rules

- Developer empowerment: self-service infrastructure provisioning without scripting

- Full auditability: detailed logs and real-time status updates in Slack

This use case exemplifies how Kubiya’s context-aware AI agents and policy-driven workflows transform traditional DevOps operations, making them more efficient, secure, and scalable. This example builds on the key features of Kubiya, illustrating their practical impact in enterprise environments.

Pros

- Goal-Driven Automation: Kubiya turns vague requests into safe, auditable actions, cutting down manual work and speeding up DevOps tasks.

- Contextual Memory: It remembers past commands and workspace context to avoid mistakes across teams and projects.

- Policy-as-Code: Built-in compliance and governance with role-based access, approvals, and detailed logs.

- Easy Integration: Works smoothly with Slack and CLI, letting developers control automation with chat or command line.

Cons

- Complex for Small Teams: Might be too advanced or unnecessary for smaller teams or simple tasks.

- Learning Curve: Takes some time to fully understand how its multi-agent system and policies work.

- Smaller Community: The user and developer community is still growing compared to open-source alternatives.

Getting Started with Kubiya AI Orchestration

Kubiya AI is a powerful platform for automating complex workflows across DevOps, cloud infrastructure, and business operations using multi-agent orchestration with real-time context awareness. Follow these steps to set up and begin using Kubiya.

Step 1: Install Kubiya CLI

Kubiya CLI is your primary interface to manage agents, workflows, and integrations.

# Install Kubiya CLI on macOS/Linux/Windows

bash

curl -fsSL https://raw.githubusercontent.com/kubiyabot/cli/main/install.sh | bash# Verify installation

kubiya --version# Set your API key for authentication (replace with your key)

export KUBIYA_API_KEY="your-api-key"Step 2: Connect Your Tools and Infrastructure

Securely link your cloud accounts (AWS, Kubernetes, GitHub, Jira) through the Kubiya dashboard or CLI to enable context-aware automation.

bash

# List available Kubiya agents

kubiya agent list# Describe a specific agent to explore capabilities

kubiya agent describe devops-assistant --output yamlStep 3: Create or Use an Agent

You can create a custom agent with specific tools or leverage prebuilt templates suited for DevOps automation.

Example of creating an agent via API:

bash

curl -X POST "https://api.kubiya.ai/api/v1/agents" \

-H "Authorization: UserKey $KUBIYA_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"name": "devops-expert",

"description": "DevOps and infrastructure specialist",

"tools": ["aws-ec2", "kubernetes"]

}'

Step 4: Define a Workflow Using Python SDK

Use the Kubiya workflow SDK to script multi-step automation tasks like Terraform infrastructure provisioning.

python

from kubiya_workflow_sdk import Workflow, Step

workflow = Workflow(

name="deploy_app",

description="Deploy to staging using Terraform",

steps=[

Step(

name="terraform_plan",

image="hashicorp/terraform:1.0.0",

command="terraform plan"

),

Step(

name="terraform_apply",

image="hashicorp/terraform:1.0.0",

command="terraform apply -auto-approve"

),

]

)

workflow.execute()Step 5: Agent Configuration Example (YAML)

Agents can be customized through configuration files specifying their tools and environment.

text

name: devops-expert

description: Manages infrastructure workflows

tools:

- aws-ec2

- kubernetes

environment:

KUBECONFIG: /app/.kube/config

KUBECTL_VERSION: "1.27"

Step 6: Test and Deploy

- Test your workflows in Kubiya’s sandbox environment.

- Use built-in observability and runbooks to monitor, debug, and optimize workflows.

- Promote workflows to production with Kubernetes-native scalability for reliability.

For community support, Kubiya offers a dedicated service desk portal for submitting and tracking issues at https://kubiya.atlassian.net/servicedesk/customer/portal/4.

You can also explore and contribute to their open-source projects, including CLI and agent templates, in the Kubiya GitHub organization: https://github.com/kubiyabot.

For tutorials, guides, and updates, visit the official blog at https://www.kubiya.ai/blog. These resources provide excellent help and engagement opportunities for Kubiya users.

2. Domo

Domo is a business intelligence and no-code AI orchestration platform designed to empower business teams with predictive analytics, data pipeline automation, and actionable insights. It provides an intuitive visual interface to connect diverse data sources, automate AI model scoring, and trigger business workflows without programming expertise. Domo is ideal for enterprises seeking to democratize AI and automate data-driven decision-making across non-technical users.

Key Features of Domo for AI Orchestration

- No-Code AI Agents: Create custom AI agents that automate tasks, adapt dynamically, and grow with your business needs without requiring coding skills.

- Unified Data Integration and Preparation: Connect, centralize, and prepare data from any source with powerful ETL capabilities, ensuring clean, structured, and reliable datasets for AI.

- AI-Assisted Workflows: Automate workflows from data preparation to forecasting, enabling teams to save time and focus on strategic priorities.

- Secure and Governed Platform: Built-in security, compliance frameworks, and proactive alerting provide scalable governance, reduce risks, and maintain data quality.

- Conversational AI and Insight Sharing: Leverage AI chat integrated into content for natural conversations and personalized insights delivery directly to users and customers.

Enterprise Use Case: Empowering Data-Driven Decisions with Domo

A multinational corporation uses Domo to centralize and prepare massive datasets from various cloud data warehouses and on-premise sources. Business teams leverage Domo’s no-code AI agents to automate model scoring and trigger workflows, such as sales forecasting and inventory optimization, without involving IT or data science teams directly.

Domo’s visual dashboards deliver real-time insights and embed conversational AI that guides non-technical users through data exploration and decision-making. This seamless automation and democratization of AI reduces manual effort, improves decision quality, and accelerates time to actionable intelligence.

Key Benefits:

- Simplified data preparation and integration

- Empowerment of business users through no-code AI agents

- Real-time predictive analytics driving operational efficiency

- Enhanced governance and data compliance

Pros

- User-friendly no-code AI and workflow automation for business teams

- Robust data integration and preparation capabilities

- Secure platform with proactive governance and compliance

- Built-in conversational AI facilitating easy insights sharing

Cons

- Limited customization compared to developer-centric AI platforms

- Complex enterprise features may require onboarding and training

- Best suited for data-driven business users rather than pure DevOps or engineering teams

- May involve licensing costs based on data volume and usage

Getting Started with Domo AI Orchestration

Step 1: Sign Up and Access

- Register for a Domo account and access the web-based dashboard.

Step 2: Connect Data Sources

- Use the visual integration tools to link databases, cloud platforms, and SaaS applications.

Step 3: Build AI Agents and Workflows

- Use the no-code AI agent builder to create automated tasks and workflows tailored to your business objectives.

Step 4: Visualize and Share Insights

- Create interactive dashboards and embed AI-powered conversational interfaces to deliver actionable insights.

Step 5: Monitor and Govern

- Apply built-in security policies, audit logs, and compliance frameworks to ensure data governance.

Community Support and Resources

- Official Documentation and Tutorials: Available at Domo Learning Center

- Community Forums: Engage with users and experts on the Domo Community platform

- Customer Support: Includes onboarding assistance, training, and ticket-based help

3. Kubeflow

Kubeflow is an open-source machine learning platform designed to enable scalable and portable ML workflows on Kubernetes. It helps data scientists and ML engineers build, deploy, and manage production-ready machine learning models with a focus on Kubernetes-native tooling and infrastructure abstraction.

Key Features of Kubeflow

- Kubernetes-Native: Fully leverages Kubernetes for container orchestration, scaling, and resource management.

- Composable ML Pipelines: Allows definition of complex ML workflows as reusable, modular components.

- Multi-Framework Support: Integrates with TensorFlow, PyTorch, XGBoost, and custom ML frameworks.

- Centralized Model Management: Manages model training, deployment, monitoring, and lifecycle in one platform.

- UI and CLI Tools: Includes web UI for pipeline control and a CLI for automation and scripting.

- Scalability: Supports distributed training and serving at scale using Kubernetes resources.

- Extensibility: Supports custom operators, plugins, and integration with cloud services and storage.

Enterprise Use Case: Production ML Workflow with Kubeflow

A large company runs several ML projects with TensorFlow and other frameworks, using Kubeflow to orchestrate end-to-end workflows. Data scientists design pipelines to preprocess data, train models on distributed GPU pods, validate results, and deploy the best models to serving endpoints. Kubeflow manages resource allocation, versioning, and scaling transparently, allowing teams to focus on model development. The platform also monitors performance and enables automated retraining triggered by new data.

Key Benefits:

- Containerized, cloud-native ML workflows ensuring portability and scalability.

- Seamless integration with Kubernetes ecosystem and cloud platforms.

- Efficient handling of complex training workloads and multi-step pipelines.

- Centralized model lifecycle and experimentation management.

- Strong community and continuous innovation from contributors.

Pros

- Kubernetes-centric design aligns with modern cloud infrastructure.

- Strong support for distributed training and multi-framework pipelines.

- Rich UI and SDK support for pipeline creation and deployment.

- Flexible, extensible architecture for custom ML needs.

Cons

- Requires Kubernetes expertise for setup and maintenance.

- Steep learning curve for users new to Kubernetes or container orchestration.

- Some features may require ongoing customization for specific enterprise use cases.

Getting Started with Kubeflow

- Install KubeflowDeploy Kubeflow to a Kubernetes cluster using manifests or the Kubeflow command-line tool.

- Configure Kubernetes ClusterEnsure cluster resources and permissions fit Kubeflow requirements.

- Create ML PipelinesUse the Kubeflow Pipelines SDK or UI to define and submit pipeline workflows.

- Train and Deploy ModelsLaunch distributed training jobs and deploy trained models with ease.

- Monitor and ManageUse the dashboard to track pipeline runs, model versions, and system health.

Resources and Community Support

- Official Documentation: https://www.kubeflow.org/docs/

- GitHub Repository: https://github.com/kubeflow/kubeflow

- Community Forums and Slack channels for active discussions.

- Tutorials and webinars for beginners and advanced users.

4. IBM watsonx Orchestrate

Watsonx Orchestrate is IBM’s enterprise AI workflow automation platform designed to integrate AI-driven automation seamlessly into business processes. It leverages natural language triggers and robust governance frameworks to automate workflows in areas such as customer support, finance operations, and human resources. watsonx Orchestrate supports hybrid cloud deployments and tightly integrates with IBM’s AI portfolio, enabling enterprises to combine scalable AI automation with strong compliance controls.

Key Features of watsonx Orchestrate

- Natural Language Workflow Triggers: Users interact with the platform using intuitive natural language commands to initiate complex workflows.

- Enterprise Governance & Compliance: Integrated governance and compliance guardrails ensure workflows adhere to organizational policies and regulatory requirements.

- Hybrid Deployment Flexibility: Supports cloud, on-premises, and hybrid deployment architectures to fit diverse enterprise environments.

- Deep IBM AI Ecosystem Integration: Seamlessly connects with IBM Watson services and AI models for advanced cognitive automation.

- Multi-Domain Workflow Automation: Automates cross-functional processes across customer care, finance, HR, and other departments.

Enterprise Use Case: Enhancing Service Operations with watsonx Orchestrate

A large financial institution adopted watsonx Orchestrate to automate customer support and back-office operations. Using natural language inputs, employees can trigger workflows like processing loan applications or handling service requests. The platform coordinates various backend systems while ensuring compliance through embedded governance policies. This automation leads to faster processing times, reduced manual errors, and improved customer satisfaction.

Key Benefits:

- Increased operational efficiency and speed

- Improved policy compliance and reduced risk

- Enhanced employee productivity with AI assistance

- Scalable automation across hybrid environments

This use case illustrates how watsonx Orchestrate enables enterprises to embed AI into day-to-day operations securely and effectively.

Pros

- User-friendly natural language interface that lowers automation barriers.

- Strong governance and compliance tailored for regulated industries.

- Flexible deployment options fitting diverse IT landscapes.

- Deep integration with IBM’s AI and analytics portfolio.

Cons

- Primarily suited for enterprises already invested in IBM technologies.

- May require upfront change management for workforce adoption.

- Complexity in configuring workflows for highly customized processes.

- Less flexibility outside IBM AI ecosystem compared to open platform

Getting Started with watsonx Orchestrate

1. Sign Up and Access

- Request access via the IBM watsonx platform portal.

- Log in to the watsonx dashboard with your credentials.

2. Connect Business Systems

- Use the visual connectors or APIs provided by IBM to integrate your backend systems, cloud services, and data sources.

- Set up connectors for CRM, ERP, cloud platforms like AWS, Azure, or Data sources.

3. Build AI-Powered Workflows

- Use the visual, no-code workflow designer or select prebuilt templates.

- Define triggers based on natural language commands or event-driven actions.

- Drag and drop actions, validations, and decision points to automate processes like customer onboarding, claims handling, or approval flows.

4. Monitor and Govern

- Track workflow execution with built-in dashboards.

- Use audit logs, compliance reports, and governance features to ensure security and regulatory compliance.

- Adjust workflows as needed based on analytics insights.

5. Scale and Optimize

- Expand automation to new use cases.

- Tune AI models, improve rules, and leverage continuous improvement tools.

- Use built-in AI model management for ongoing refinement.

Optional: Programmatic API Example (for automation or integration)

bash

curl -X POST "https://api.watsonx.ibm.com/orchestrate/v1/workflows/{workflow_id}/execute" \

-H "Authorization: Bearer your_access_token" \

-H "Content-Type: application/json" \

-d '{

"inputs": {

"customer_id": "12345",

"request_type": "loan_approval"

}

}'

Community Support and Resources

- IBM watsonx Support: Official IBM support portal and customer success teams.

- IBM Developer Community: Engage with experts and peers through forums and tutorials at IBM Developer.

- Documentation and Tutorials: Comprehensive resources available at IBM watsonx docs.

- Enterprise Onboarding: Dedicated onboarding and training programs for enterprise customers.

5. UiPath Agentic Automation

UiPath UiPath enhances traditional Robotic Process Automation (RPA) by integrating AI agent orchestration, enabling bots to make intelligent decisions and coordinate complex workflows across finance, supply chain, customer service, and more. Combining vision-based AI, natural language processing, and machine learning, UiPath supports both human-assisted and fully autonomous automation, boosting operational efficiency and driving end-to-end intelligent automation in enterprises.

Key Features of UiPath Agentic Automation

- AI-Powered Decision Making: Bots leverage AI models to make context-aware decisions during process execution.

- Vision AI & NLP: Enables understanding and processing of unstructured data and natural language inputs for smarter automation.

- Hybrid Automation: Supports attended automation for human-bot collaborations and fully unattended workflows.

- Orchestration & Scalability: Robust orchestration layer manages bot fleets and automates complex multi-step, cross-system workflows.

- Industry-Specific Solutions: Tailored automation accelerators for banking, healthcare, insurance, public sector, and manufacturing.

Enterprise Use Case: Streamlining Finance Operations with UiPath

A global financial services firm uses UiPath to automate repetitive tasks such as client onboarding, loan processing, and exception handling. AI-driven bots extract data from documents, validate compliance rules, and collaborate with human agents for approvals. This hybrid automation approach reduced manual efforts, accelerated operations, and saved millions in operational costs.

Key Benefits:

- Reduced processing times dramatically (e.g., from 27 days to 12 hours for claims processing)

- Improved accuracy and compliance with intelligent validation

- Enabled workforce to focus on complex, high-value tasks

- Scaled automation across multiple business units seamlessly

Pros

- Combines RPA reliability with advanced AI decision-making capabilities.

- Strong support for attended and unattended automation scenarios.

- Industry-tailored accelerators reduce implementation time.

- Mature platform with extensive enterprise adoption and community.

Cons

- Complexity in deploying and managing AI models alongside RPA workflows.

- Requires skilled resources for setting up advanced AI integrations.

- Pricing and licensing can be significant for large-scale deployments.

- May involve integration challenges with legacy systems.

Getting Started with UiPath Agentic Automation

1. Sign Up and Access

- Create a UiPath account at the UiPath Automation Cloud portal.

- Log in to access the Automation Cloud dashboard.

2. Install UiPath Studio

- Download and install UiPath Studio from the official page.

- Use the visual designer to build workflows integrating both AI and RPA components.

3. Connect AI Models with AI Fabric

- Deploy and manage machine learning models in workflows using UiPath AI Fabric through Studio or APIs.

Example API call to deploy a model:

python

import requests

api_url = "https://cloud.uipath.com/ai-fabric/api/v2/models"

headers = {

"Authorization": "Bearer YOUR_API_TOKEN",

"Content-Type": "application/json"

}

payload = {

"name": "predictive-model",

"modelType": "classification",

"modelPath": "path_to_model.pkl",

"description": "Customer churn prediction"

}

response = requests.post(api_url, headers=headers, json=payload)

print(response.json())4. Configure Bots and Orchestrator

- Use UiPath Orchestrator to schedule, manage, and monitor bot execution.

- You can create robots, schedule processes, and monitor jobs via UI or API/CLI.

Example CLI commands:

bash

# Authenticate CLI

uipathorch login --tenant YOUR_TENANT --username YOUR_USERNAME --password YOUR_PASSWORD

# Create a robot

uipathorch robot create --name "MyBot" --type "Attended"

# Start a process

uipathorch process start --name "OrderFulfillment"5. Deploy and Monitor Workflows

- Deploy workflows to production and use Orchestrator dashboards for monitoring logs, performance, and job status.

- Example API call to start a job:

bash

curl -X POST "https://cloud.uipath.com/odata/Jobs/UiPath.Server.Configuration.OData.StartJobs" \

-H "Authorization: Bearer YOUR_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-d '{"startInfo": {"ReleaseKey": "release_key_here", "Strategy": "All", "RobotIds": [123], "NoOfRobots": 0}}'Community Support and Resources

- UiPath Forum: Active community for discussions and troubleshooting at

- UiPath Academy: Free training resources and certifications at

- Official Documentation: Comprehensive guides at https://docs.uipath.com/

- Marketplace: Pre-built components and workflow templates available at

https://marketplace.uipath.com/

6. Microsoft AutoGen

AutoGen is a Microsoft research framework enabling collaborative multi-agent AI workflows. Multiple AI agents interact, delegate tasks, and collectively solve large problems, such as generating and testing code, producing documentation, or engaging in conversational tasks. AutoGen emphasizes conversational agent collaboration, making it ideal for advanced AI automation involving complex coordination and teamwork.

Key Features of Microsoft AutoGen

- Asynchronous Messaging: Facilitates event-driven and request/response communication among agents for robust collaboration.

- Modular and Extensible: Allows customization via pluggable components including custom agents, tools, models, and memory systems.

- Observability & Debugging: Built-in tools with OpenTelemetry support provide comprehensive tracking, tracing, and debugging capabilities.

- Scalable & Distributed: Supports complex distributed agent networks that can operate across organizational boundaries seamlessly.

- Community & Built-in Extensions: Offers advanced model clients, multi-agent teams, and tools with an extensible architecture encouraging open-source contributions.

- Cross-Language Support: Enables interoperability between agents built in Python and .NET, with plans for more languages.

- Strong Type Support: Enforces type checking at build time to ensure high code quality and robustness.

Enterprise Use Case: Collaborative AI Automation with AutoGen

A software development organization uses Microsoft AutoGen to automate code generation, review, and documentation collaboratively by multiple AI agents. One agent generates code snippets, another tests and validates the code, while a third creates detailed documentation and usage guides. The agents communicate asynchronously, dynamically share tasks, and provide feedback to improve output quality.

Key Benefits:

- Streamlined software development lifecycle with AI teamwork

- Reduced manual coding, testing, and documentation time

- Enhanced code quality through multi-agent validation and collaboration

- Scalable architecture supporting complex coordination workflows

This example demonstrates AutoGen’s strong value in accelerating software engineering and advanced AI automation involving multiple cooperative agents.

Pros

- Enables sophisticated multi-agent collaboration for complex AI workflows.

- Modular design supports extensive customization and community-driven enhancements.

- Advanced observability and debugging enhance development and maintenance.

- Open-source framework fostering research and innovation.

Cons

- Primarily research-focused with a steep learning curve for enterprise adoption.

- Requires developer expertise in multi-agent system concepts and asynchronous programming.

- Currently limited production-ready integrations compared to commercial platforms.

- Cross-language support is evolving and may limit some interoperability use cases.

Getting Started with Microsoft AutoGen

1. Access the Framework

- Clone the open-source AutoGen repository from Microsoft’s official GitHub:

bash

git clone https://github.com/microsoft/autogen.git

cd autogen

2. Set Up Environment

- Install Python dependencies (and optionally .NET SDK if needed)

bash

pip install -r requirements.txt3. Explore Samples

- Review example workflows provided in the examples/ directory to understand multi-agent collaboration.

4. Build Custom Agents

- Create agents using the SDK by defining agents, tools, and memory components.

5. Run & Debug

- Use built-in tracing and logging tools for debugging multi-agent interactions.

6. Extend and Scale

- Customize workflows and deploy distributed agent networks as needed for your application.

Simple Code Example: Creating and Running Two Cooperative Agents

python

from autogen import Agent, Tool, run_agents_concurrently

# Define a simple tool

class EchoTool(Tool):

def execute(self, input_text):

return f"Echo: {input_text}"

# Define Agent 1

agent1 = Agent(name="Agent 1", tools=[EchoTool()], memory={})

# Define Agent 2

agent2 = Agent(name="Agent 2", tools=[EchoTool()], memory={})

# Run agents concurrently with simple message exchange

def agent1_logic(agent, other_agent):

response = agent.use_tool("EchoTool", "Hello from Agent 1")

print(response)

def agent2_logic(agent, other_agent):

response = agent.use_tool("EchoTool", "Hello from Agent 2")

print(response)

run_agents_concurrently([

(agent1, agent1_logic),

(agent2, agent2_logic)

])

This example shows creating two simple agents using a tool for echoing messages and running them concurrently, illustrating the basic building blocks of AutoGen’s multi-agent collaboration.

Community Support and Resources

- GitHub Repository: Access source code, samples, and community discussions at Microsoft’s AutoGen GitHub page.

- Microsoft Research Publications: Follow research articles and updates discussing AutoGen advancements.

- Developer Forums: Engage in discussions on Microsoft AI developer forums and open-source communities.

- Documentation: Comprehensive guides and API references with example workflows to aid onboarding.

7. SuperAGI

SuperAGI is an open-source platform for autonomous multi-agent orchestration. It supports complex task planning, execution, and continuous learning by coordinating multiple AI agents. SuperAGI is suitable for research, experimentation, and enterprise applications where autonomous workflows need to adapt over time. It offers extensible architecture allowing integration with external APIs and services.

Key Features of SuperAGI

- Autonomous Multi-Agent Coordination: Supports dynamic task delegation and collaboration among agents to solve complex problems.

- Continuous Learning and Adaptation: Incorporates reinforcement learning and feedback loops to evolve agent behaviors over time.

- Extensible Plug-in Architecture: Easily integrate third-party APIs, workflow tools, and custom modules to tailor workflows.

- Open-Source Platform: Enables flexibility, transparency, and community-driven innovation.

- Scalable and Modular Design: Allows scaling of agent networks and workloads for diverse applications.

Enterprise Use Case: Adaptive Workflow Automation with SuperAGI

An enterprise uses SuperAGI to automate and optimize customer engagement and sales workflows. Multiple AI agents coordinate to identify high-value leads, conduct multi-channel outreach, and provide personalized customer interactions. The system learns from past campaign results, dynamically adjusting agent strategies to maximize pipeline conversions and customer lifetime value.

Key Benefits:

- Increased lead conversion through intelligent multi-agent collaboration

- Reduced operational overhead with autonomous automation

- Continuous improvement via AI-driven learning and feedback

- Unified orchestration of complex, evolving workflows

This use case highlights SuperAGI’s strength in enabling resilient, adaptive AI-driven business workflows.

Pros

- Flexible and open-source for customization and research.

- Supports complex multi-agent systems with autonomous collaboration.

- Enables continuous learning and adaptive workflows.

- Strong modularity for integrating diverse tools and APIs.

Cons

- Requires technical expertise to deploy and customize effectively.

- Still maturing community compared to some commercial platforms.

- Limited out-of-the-box integrations compared to established tools.

- May need significant setup and tuning for enterprise readiness.

Getting Started with SuperAGI

1. Install and Clone

- Clone the SuperAGI repository from GitHub:

bash

git clone https://github.com/superagi/superagi.git

cd superagi

2. Set Up Environment

- Install dependencies and configure AI models and agent settings:

bash

pip install -r requirements.txt

# Additional setup as per documentation for model API keys and config files

3. Configure Agents

- Define agents and collaboration protocols via configuration files or Python scripts.

4. Run Workflows

- Start multi-agent orchestration workflows and monitor logs for progress and debugging.

5. Iterate and Learn

- Use feedback and learning modules to improve agent strategies and refine workflows over time

Simple Code Example: Defining and Running a SuperAGI Agent

python

from superagi.agent import Agent

from superagi.tools.echo_tool import EchoTool

# Define a simple agent with a tool

agent = Agent(

name="EchoAgent",

tools=[EchoTool()],

memory={}

)

# Run a basic task using agent tool

response = agent.use_tool("EchoTool", "Hello from SuperAGI Agent!")

print(response)

This example defines a basic SuperAGI agent equipped with an echo tool and runs it to demonstrate fundamental agent behavior.

Community Support and Resources

- GitHub Repository: Access source code, documentation, and community discussions on https://github.com/superagi

- Developer Forums: Engage with the open-source community for support and collaboration.

- Documentation: Detailed setup guides and API references available in the repository.

- Community Contributions: Open framework encourages extensions and plug-ins from users.

8. Anyscale (Ray ecosystem)

Anyscale leverages the open-source Ray project to provide a cloud-native platform for orchestrating distributed AI workloads. It excels in scaling machine learning training, distributed inference, and real-time AI applications. Anyscale offers tools for managing cluster resources, fault tolerance, and autoscaling. It's popular among AI engineering teams needing to operationalize large models and serve them with low latency across diverse environments.

Key Features of Anyscale

- Seamless Scaling: Automatically scale Ray clusters elastically across CPUs and GPUs in cloud or on-premise environments.

- Fault Tolerant Deployments: Built-in resilience with health monitoring, node draining, and zero-downtime upgrades.

- Workload Observability: Advanced profiling, logging, and dashboards for debugging and performance optimization.

- Integrated Development Environments: Cloud-based IDEs accessible via VSCode, Jupyter, and more for fast iteration.

- Cost Governance: Tools for budget monitoring, spot instance management, and optimized resource utilization with RayTurbo.

- Ecosystem Integration: Supports popular ML libraries (TensorFlow, PyTorch), workflow orchestration (Airflow), and data platforms (Databricks, Snowflake).

Enterprise Use Case: Scaling AI Model Training & Inference with Anyscale

Global companies use Anyscale to run distributed training of large language models (LLMs) and conduct batch and online inference at scale. For example, a video streaming service leverages Anyscale to fine-tune LLMs for content recommendation and metadata tagging, achieving faster model iteration and reduced infrastructure costs. The platform's autoscaling and fault-tolerance features enable production stability and low-latency serving to millions of users worldwide.

Key Benefits:

- Faster iteration and deployment of AI models

- Efficient resource utilization leading to cost savings

- Real-time model serving with high availability

- Unified platform simplifying infrastructure management

Pros

- Enterprise-ready platform simplifying complex Ray infrastructure.

- Robust autoscaling and fault-tolerant features.

- Integrated development and monitoring tools for faster workflows.

- Deep compatibility with AI/ML ecosystem and data platforms.

Cons

- Requires some expertise to optimize cluster configurations.

- Cloud dependency may limit self-hosting flexibility for some.

- Cost considerations for large-scale deployments.

- Platform complexity may need dedicated operational teams.

Getting Started with Anyscale

- Create an Account: Sign up on the Anyscale platform and log in to the cloud dashboard.

- Launch Ray Clusters: Use Anyscale UI or CLI to deploy Ray clusters on preferred cloud providers with CPU/GPU configurations.

# Log in to Anyscale CLI

anyscale login

# Create a new cluster using a config file

anyscale cluster create my-ray-cluster --config example-config.yaml

# Start the cluster

anyscale cluster start my-ray-cluster

# Check cluster status

anyscale cluster status my-ray-cluster

An example example-config.yaml cluster config:

text

cluster_name: my-ray-cluster

provider:

type: aws

region: us-west-2

head_node:

InstanceType: m5.large

worker_nodes:

InstanceType: m5.large

min_workers: 0

max_workers: 5

idle_timeout: 15- Develop & Deploy: Use Anyscale Workspaces (e.g., VSCode, Jupyter) to develop AI workloads and deploy jobs or services.

python

import ray

ray.init(address="auto")

@ray.remote

def hello_world():

return "Hello from Ray on Anyscale!"

result = ray.get(hello_world.remote())

print(result)

- Monitor and Optimize: Access built-in dashboards for job monitoring, profiling, and autoscaling management.

- Scale Production Workloads: Utilize spot instances, cost governance, and RayTurbo optimizations for production efficiency.

Community Support and Resources

- Official Documentation: https://docs.anyscale.com/

- GitHub (Ray): https://github.com/ray-project/ray

- YouTube Tutorials: Official Ray and Anyscale training sessions

- Community Forums: Ray community Slack and discussion boards

- Customer Support: Professional services and dedicated support for enterprise customers

9. Botpress

Botpress is an open-source platform for building conversational AI agents with advanced orchestration capabilities. It supports complex multi-turn dialogs, integration with large language models (LLMs), and backend system workflows. Botpress is ideal for enterprises building chatbots, voice assistants, or customer service automation requiring deep customization and multi-agent orchestration embedded in conversational flows.

Key Features of Botpress

- Multi-Turn Dialog Management: Supports sophisticated conversational flows with context tracking and dynamic responses.

- LLM Integration: Seamlessly connects with large language models to enhance natural language understanding and generation.

- Backend Workflow Orchestration: Embed AI workflows that interact with APIs, databases, and external systems during conversations.

- Rich Integration Ecosystem: Connects with messaging platforms (WhatsApp, Telegram), automation tools (Zapier), customer service platforms (Zendesk), and more.

- User-Friendly Botpress Studio: Intuitive visual interface for designing, managing, and scaling complex bots.

- Open-Source & Extensible: Flexible platform supported by an active developer community for customization and enhancements.

Enterprise Use Case: Customer Service Automation with Botpress

A large enterprise uses Botpress to automate customer support through chatbots deployed across multiple channels like WhatsApp and web chat. Customers interact with intelligent bots capable of managing multi-turn conversations, providing order status, processing refunds, and authenticating users. Complex backend workflows are orchestrated in the conversation flow to update CRM systems and escalate to human agents when necessary, improving efficiency and customer satisfaction.

Key Benefits:

- Enhanced customer engagement with natural, multi-turn interactions

- Seamless integration with existing business systems for end-to-end automation

- Scalable deployment across multiple messaging platforms and channels

- Empowered developers with visual tools and open-source flexibility

Pros

- Powerful and flexible conversational AI with multi-turn dialog support.

- Extensive ecosystem of integrations for business-critical platforms.

- Open-source platform with a strong developer community.

- Easy to host, scale, and customize with Botpress Studio.

Cons

- Requires development skills to customize advanced workflows.

- Initial setup may be complex for teams new to conversational AI.

- May need dedicated resources for maintaining multi-agent orchestration at scale.

- Some integrations require additional configuration or third-party licenses.

Getting Started with Botpress

1. Install Botpress

- Download Botpress from the official website or run via Docker:

bash

docker run -d --name botpress -p 3000:3000 botpress/server

2. Launch Botpress Studio

- Access Botpress Studio by navigating to http://localhost:3000 in your browser.

- Use the intuitive visual interface to create and manage conversational flows.

3. Integrate LLMs and APIs

- To connect a large language model or external API, you can create a custom action in Botpress.

Example: Custom action to call an LLM API

javascript

const axios = require('axios')

async function callLLM(state, event, params) {

const response = await axios.post('https://api.llmprovider.com/generate', {

prompt: event.payload.text,

max_tokens: 100

}, {

headers: { 'Authorization': 'Bearer YOUR_API_KEY' }

})

state.utterance = response.data.generated_text

return state

}

return callLLM(state, event, params)

4. Deploy Bots

- Publish your bot to messaging platforms like WhatsApp, Telegram, or embed on websites using built-in connectors and channels configuration.

5. Monitor and Optimize

- Use Botpress analytics and testing tools via the Studio dashboard to analyze user interactions, test flows, and optimize conversation quality.

Community Support and Resources

- Botpress GitHub: Access source code, issues, and contributions at https://github.com/botpress

- Discord Community: Engage with both builders and developers for support and best practices.

- Official Documentation: Detailed guides at https://botpress.com/docs

- Tutorials & Webinars: Interactive learning resources for beginners to advanced users.

10. Prefect

Prefect is a cloud-native AI and data workflow orchestrator focusing on ease of use, scalability, and real-time observability. Prefect allows users to define dynamic workflows in Python with robust retry, failure handling, and scheduling features. Its modern UI and APIs support enterprise compliance and seamless integration with cloud data stacks, making it ideal for managing complex AI pipelines and data engineering tasks.

Key Features of Prefect

- Python-Native Orchestration: Write workflows as Python functions without needing special DSLs or configuration languages.

- Dynamic Workflows: Create workflows that adjust dynamically to runtime data and conditions.

- Robust State Management: Track task and workflow states (success, failure, retries) with clear failure reasons and recovery options.

- Hybrid Execution & Scalability: Run workflows locally, in the cloud, or across multiple environments with seamless scaling.

- Human-in-the-Loop: Pause workflows for manual intervention, approvals, or input, then resume.

- Modern UI & Observability: Real-time monitoring, logging, and debugging with an intuitive interface and automatic DAG visualization.

- CI/CD Integration: Manage workflows as code with version control and automated deployment pipelines.

- Caching and Mapping: Cache intermediate results and parallelize tasks for efficiency.

Enterprise Use Case: Scalable AI & Data Pipeline Orchestration with Prefect

A leading fintech company switched from Apache Airflow to Prefect to orchestrate their machine learning and data pipelines. Prefect’s flexibility enabled the team to rapidly deploy fraud detection models across multiple clouds while reducing infrastructure costs by over 70%. With built-in retry logic and observability, the company maintains high confidence in production workflows and can recover gracefully from task failures.

Key Benefits:

- Faster and more reliable AI model deployment

- Scalable across cloud environments with cost optimization

- Improved debugging and operational visibility

- Seamless integration with existing data tools and CI/CD pipelines

Pros

- Pythonic and highly flexible workflow definitions.

- Dynamic and stateful execution for real-time data-driven pipelines.

- Strong observability and troubleshooting capabilities.

- Hybrid deployment supporting local and cloud environments.

Cons

- Newer compared to some legacy tools; ecosystem is maturing.

- Requires familiarity with Python coding.

- UI may require customization for complex enterprise use cases.

- Some enterprise features require Prefect Cloud subscription.

Getting Started with Prefect

- Install Prefect: Use pip to install on your local machine.

Bash

pip install prefect

- Write Your First Flow: Define workflows using the @flow and @task decorators in Python.

- Deploy: Use Prefect Cloud or self-hosted Prefect Server to manage and schedule flows.

- Monitor: Access the Prefect UI or CLI for real-time logs, metrics, and state tracking.

- Scale: Configure agents to run flows in distributed or hybrid environments with autoscaling.

Community Support and Resources

- Official Documentation: https://docs.prefect.io/

- GitHub Repository: https://github.com/PrefectHQ/prefect

- Community Slack: Active user community for support and discussion.

- Tutorials and Case Studies: Available on Prefect’s website and YouTube channel

These tools represent a mix of open-source and enterprise options that cater to varied needs from data pipeline scheduling to autonomous multi-agent orchestration. Choosing the right AI orchestration platform depends on your technical stack, workflow complexity, and governance requirements.

Developer-Focused Comparison of Top 10 AI Orchestration Tools in 2025

Here is an enhanced developer-focused comparison table of the Top 10 AI Orchestration Tools in 2025 with key evaluation:

| Tool | Primary Language / SDKs | Deployment Options | Primary Use Case |

|---|---|---|---|

| Kubiya AI | Node.js, REST APIs | SaaS, Hybrid | DevOps automation, multi-agent |

| Domo | JavaScript APIs | SaaS | No-code BI & AI pipeline automation |

| Kubeflow | Python, YAML, CLI | Kubernetes, Cloud | Scalable machine learning workflows and lifecycle management |

| IBM watsonx Orchestrate | REST APIs | Cloud, Hybrid | Enterprise AI workflow automation |

| UiPath | Visual workflows, Python | Cloud, On-prem | RPA + AI integrated automation |

| Microsoft AutoGen | Python SDK | Cloud, Local | Collaborative multi-agent workflows |

| SuperAGI | Python + config files | Cloud, Local | Autonomous multi-agent orchestration |

| Anyscale (Ray) | Python SDK | Cloud-native | Scalable distributed AI workloads |

| Botpress | Node.js | Cloud, On-prem | Conversational AI orchestration |

| Prefect | Python | Cloud, Hybrid | Cloud-native data & ML pipelines |

Challenges in Adopting AI Orchestration Tools

Adopting AI orchestration platforms unlocks significant automation potential but poses practical challenges, including:

Integration Complexity

For instance, integrating an LLM-based assistant with an RPA tool like UiPath involves ensuring APIs between the language model and bot workflows handle data formats correctly and handle errors gracefully. A typical pattern is translating LLM outputs into structured JSON commands that trigger specific RPA actions.

Skill Requirements

Implementing orchestration workflows often requires writing Python DAGs in Apache Airflow or managing Kubernetes clusters for platforms like Kubiya. For example, an Airflow developer should be familiar with Python scripting, task dependencies, and operators to define workflows efficiently.

Security and Compliance

Many platforms support role-based access control (RBAC). For example, in Kubiya, RBAC restricts workflow triggers to authorized users, while encryption of data in transit and at rest ensures compliance. Sample RBAC policy snippet (conceptual):

text

roles:

- name: devops-engineer

permissions: - trigger_workflow: true

- view_logs: trueMonitoring and Troubleshooting

Prefect provides a UI displaying real-time workflow status. Alerts can be configured to notify teams on failures, enabling rapid debugging. For example, an Airflow DAG can include alert emails on task failure:

python

from airflow.operators.email_operator import EmailOperator

alert = EmailOperator(

task_id='send_failure_email',

to='devops-team@example.com',

subject='Airflow Task Failed',

html_content='Please check the logs for task failure details.'

)

Latency and Reliability

Retry policies ensure transient errors do not break workflows. For example, Airflow and Prefect support automatic retries with fixed or exponential backoff intervals, preserving workflow reliability.

Human Oversight

Workflows often include manual approval checkpoints. In Airflow, this can be done via a sensor that waits for a human to mark a task complete before continuing:

python

from airflow.sensors.external_task import ExternalTaskSensor

approval = ExternalTaskSensor(

task_id='wait_for_approval',

external_dag_id='approval_dag',

external_task_id='approval_task',

poke_interval=60,

)

Best practices for AI orchestration

If you’re considering AI orchestration in your organization, here are some best practices to guide you to success:

1. Start with a pilot program

Begin with a small test project that integrates a few AI systems or just focuses on one specific workflow. This allows you to manage the complexities of AI infrastructure and understand its nuances on a smaller scale before expanding to more complex workflows.

Kubiya AI enables rapid prototyping and deployment of AI workflows through its modular multi-agent platform and natural language commands, making it ideal for small-scale pilots that can expand seamlessly.

2. Focus on data quality and access

AI systems require access to clean, well-organized, and high-quality data to function effectively, especially when it comes to decision-making, interoperability, and real-time workflows. Data scientists can help you validate data quality, pre-processing, and optimize data pipelines.

3. Design modular AI workflows

Organizations can simplify the management of AI workflows and orchestration layers with a modular approach to system architecture. By designing AI workflows as a series of modular building blocks, individual components can be modified or replaced without disrupting the entire system.

Modular architecture is also reusable, helping to promote efficiency and consistency across future projects. Each component is decomposed into small, loosely coupled services that can be developed, deployed, or scaled independently, offering greater value and flexibility to an organization.

4. Invest in AI skills

AI orchestration requires expertise with machine learning, natural language processing, data science, software engineering, and complex integrations. Equip your team with the skills required to tackle AI orchestration and infrastructure effectively. This involves ongoing training and AI education to keep pace with evolving best practices and AI technologies.

5. Establish governance and security

Establish guidelines for data privacy, security, and responsible AI usage that are compliant with industry regulations. To mitigate risk, design AI workflows with privacy, data, and compliance in mind. Also implement robust security measures within data processes such as secure business APIs, data encryption, and regular security audits to protect against vulnerabilities.

With built-in role-based access control and secure integrations, Kubiya ensures enterprise-grade governance and compliance, protecting sensitive data while maintaining flexible, context-aware AI automation.

6. Monitor and optimize

Continuously monitor and optimize your orchestrated AI systems and workflows to ensure they deliver business value. Track the efficiency and performance of data processes in analytics, and gather user feedback to identify areas for improvement as part of a data-driven, iterative approach to success.

Conclusion

AI orchestration tools have become fundamental for businesses aiming to automate, coordinate, and manage increasingly complex AI workflows. In 2025, organizations face the challenge of integrating multiple AI models, agents, data sources, and IT systems into seamless processes that are efficient, reliable, and compliant.

The tools outlined here provide a mix of open-source and enterprise solutions tailored to different needs—from developer-centric data pipeline orchestration to natural language-driven multi-agent automation for DevOps and business users. Selecting the right platform depends on your technical environment, workflow complexity, and governance policies.

Embracing AI orchestration is no longer optional; it’s a strategic imperative to unlock the true potential of AI-driven innovation and productivity. Whether you choose platforms like Kubiya AI for secure enterprise automation or leverage the ecosystem strengths of Apache Airflow, IBM watsonx, or UiPath, investing in a robust orchestration layer will empower your AI initiatives to scale and succeed with confidence.

FAQs

FAQs

Q1. What is an AI orchestration tool?

AI orchestration tools automate the management, coordination, and execution of AI workflows spanning multiple models, systems, and services, optimizing throughput, reliability, and governance.

Q2. How does AI orchestration differ from MLOps?

While MLOps focuses primarily on managing machine learning model development and deployment pipelines, AI orchestration covers broader coordination including rule engines, RPA, LLM-based agents, and end-to-end AI workflows.

Q3. Are these AI orchestration platforms only for large enterprises?

No. Many platforms like Apache Airflow, Prefect, and SuperAGI offer open-source versions accessible to startups and mid-sized companies. Larger enterprises typically invest in feature-rich commercial platforms offering governance, support, and hybrid deployment

Q4. How should I choose the right AI orchestration tool?

Consider factors like integration with your existing stack, ease of use, support for agentic workflows, scalability, security features, and cost. Evaluate use case fit and conduct pilot projects to assess platform suitability.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.