Top 5 Best AIOps Platforms in 2025

Amit Eyal Govrin

TL;DR

- AI Ops platforms are transforming DevOps with intelligent automation, scoped memory, and contextual workflows.

- Traditional automation lacks adaptability, while AI Ops tools dynamically respond to real-time changes and reduce manual overhead.

- Kubiya leads with Slack-native, secure DevOps automation and memory retention across workflows.

- Top AI Ops platforms are Kubiya, BigPanda, PagerDuty, CrewAI, and Harness AI each solving different layers of DevOps complexity.

- Choose the right AI Ops platform based on integration fit, RBAC, memory features, and developer experience.

AI Ops has quietly transformed from a buzzword into a practical layer within the DevOps ecosystem. According to Gartner, by 2026, 40% of DevOps teams will augment their toolchains with AI-driven operational insights, up from less than 10% in 2022. Once considered speculative or academic, AI-powered tooling is now addressing real friction points for DevOps and SRE teams, incident correlation, self-service provisioning, and change risk mitigation.

This article dives deep into the evolving landscape of AI Ops, breaks down the most relevant tools across categories, and examines how Kubiya redefines interaction with DevOps systems, not by replacing them, but by making them context-aware, memory-scoped, and finally, human-friendly.

What is AI Ops in the Context of DevOps Workflows?

AI Ops, in a DevOps setting, isn’t just about using machine learning to analyze logs or surface anomalies. It represents a shift in how automation is designed and consumed, where traditionally brittle, static scripts are now being replaced or enhanced by adaptive systems that learn from prior events, track contextual changes, and react accordingly.

In real-world DevOps workflows, AI Ops plays a supporting but high-leverage role. It reduces alert noise, spots change risks before production outages, streamlines postmortem analysis, and enables self-service workflows for developers, all while learning over time. This isn’t a dashboard you stare at; it’s a set of capabilities that plug into your pipelines, tools, and team chat systems, helping you get from problem to resolution faster and with less cognitive overhead.

It’s not about building a new stack. It’s about augmenting what you already have, Terraform modules, Kubernetes clusters, GitHub pipelines, Grafana dashboards, with intelligence that understands them and talks to them, preferably in plain English.

Key Benefits of AIOps for DevOps Teams

AI Ops isn’t about adding more layers of tooling; it’s about reducing complexity by making existing layers smarter. For DevOps teams managing constantly changing systems, AI Ops offers tactical advantages that go beyond automation.

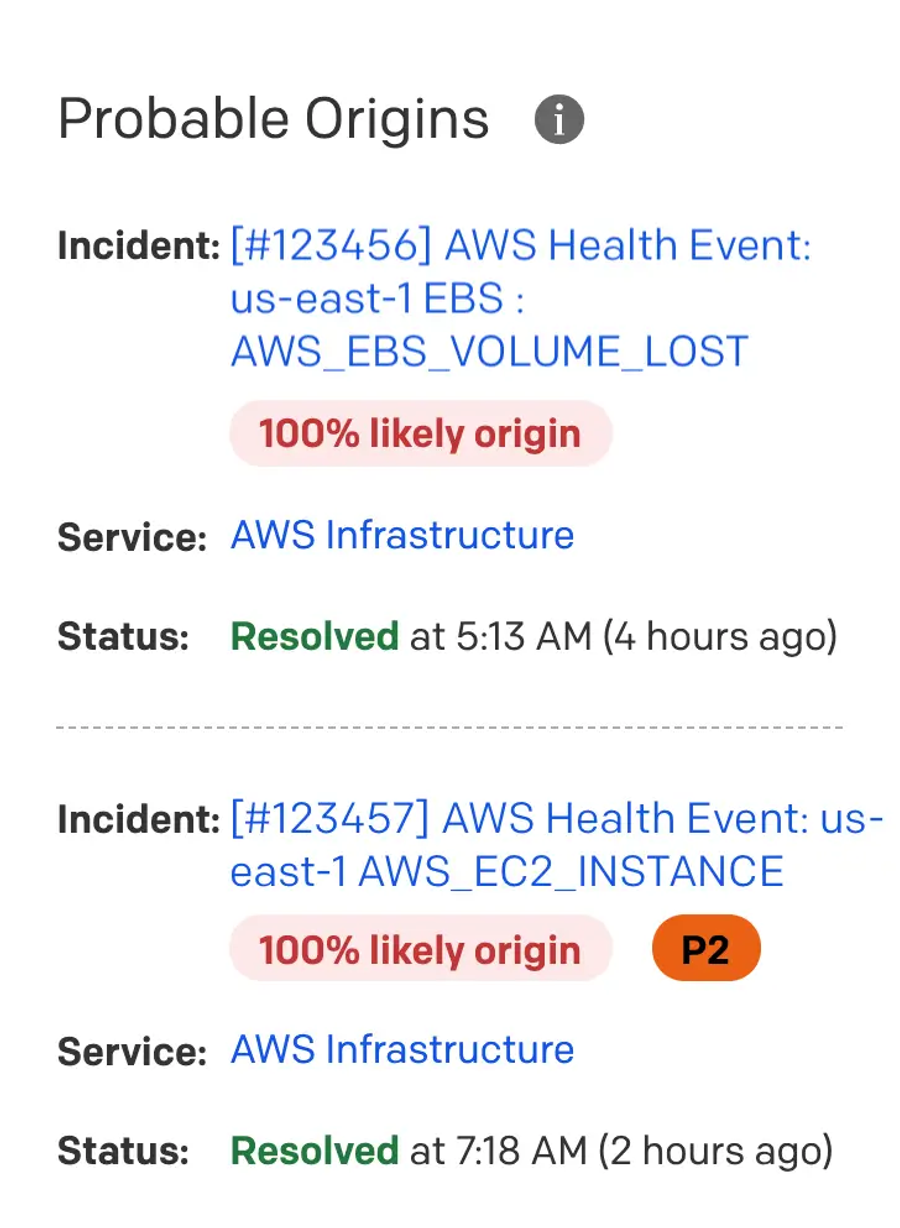

1. Reduced Alert Noise and Faster Incident Triage

AI Ops tools use anomaly detection, event correlation, and topology awareness to suppress noisy alerts and surface only critical incidents. This helps on-call engineers avoid alert fatigue and focus on the root cause instead of being overwhelmed by symptom-level signals.

2. Contextual Automation with Memory

Tools like Kubiya retain execution context, such as previously used Terraform modules, default cloud regions, or environment scopes, so engineers don’t need to repeat inputs or hunt down configurations. This shortens cycle times for tasks like provisioning, restarts, or scaling.

3. Developer Self-Service Without Platform Bottlenecks

AI agents embedded in platforms like Slack or Teams enable developers to request and execute common DevOps workflows, like spinning up environments or checking deployment status, without filing tickets or waiting on platform teams. This increases autonomy without compromising governance.

Evaluation Criteria for Choosing AI Ops Platforms

Not all AI Ops platforms serve the same purpose, and selecting the right one requires evaluating how it fits within your existing infrastructure, team maturity, and automation patterns.

1. Define the Operational Problem You’re Solving

Choose tools based on whether you're trying to reduce alert noise, improve deployment safety, automate workflows, or analyze incidents faster. Don’t just “adopt AI Ops”, apply it where manual overhead is highest.

2. Check Compatibility with Your Existing Tooling Stack

Evaluate how well the tool integrates with Terraform, Kubernetes, CI/CD pipelines (GitHub Actions, Jenkins, Argo), observability platforms (Datadog, Prometheus), and chat tools like Slack. Tools that plug in natively are easier to adopt and scale.

3. Enforce Security, RBAC, and Approval Controls

Any AI Ops platform that interacts with live infrastructure must support strict role-based access controls, approvals for destructive actions, and audit logging. Kubiya, for example, routes sensitive requests for approval and logs all actions within the conversation thread.

4. Evaluate Context Retention and Scoped Memory

Tools like Kubiya stand out by maintaining scoped memory, recalling previous inputs, project histories, and workflow preferences. This creates smarter interactions over time, unlike stateless tools that forget everything between sessions.

5. Assess Developer Experience and Adoption Friction

The best AI Ops tools are usable by both SREs and developers without needing custom scripts or new UIs. Look for platforms that enable interaction in tools your team already uses (e.g., Slack), not ones that add dashboards you’ll ignore.

Best AI Ops Platforms Every DevOps Engineer Should Evaluate in 2025

The AI Ops landscape is broad, but not all tools are built to solve the same set of problems. Some target incident intelligence, others focus on deployment risk, and a few are designed to act as intelligent assistants embedded directly into your workflow. Below are five standout tools worth evaluating, each addressing a distinct category of operational complexity.

1. Kubiya – Conversational Workflow Automation for DevOps

Overview

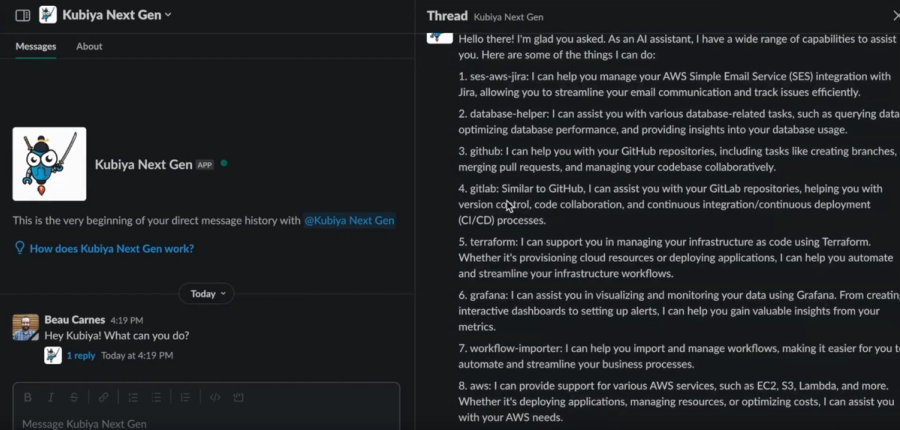

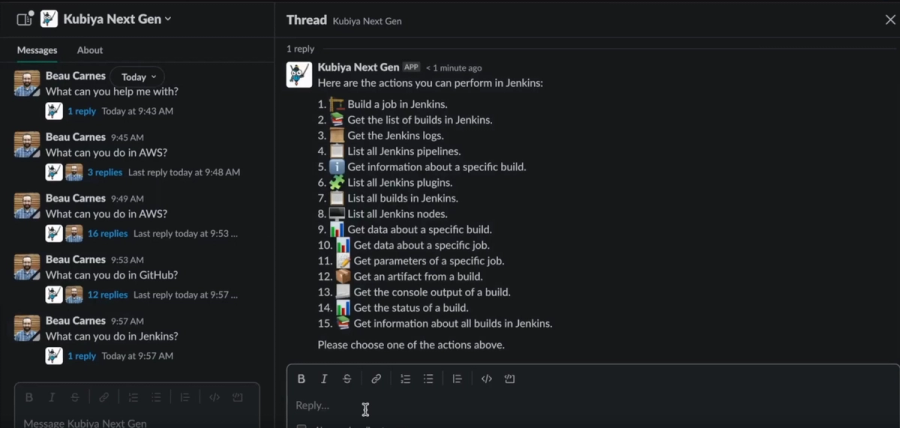

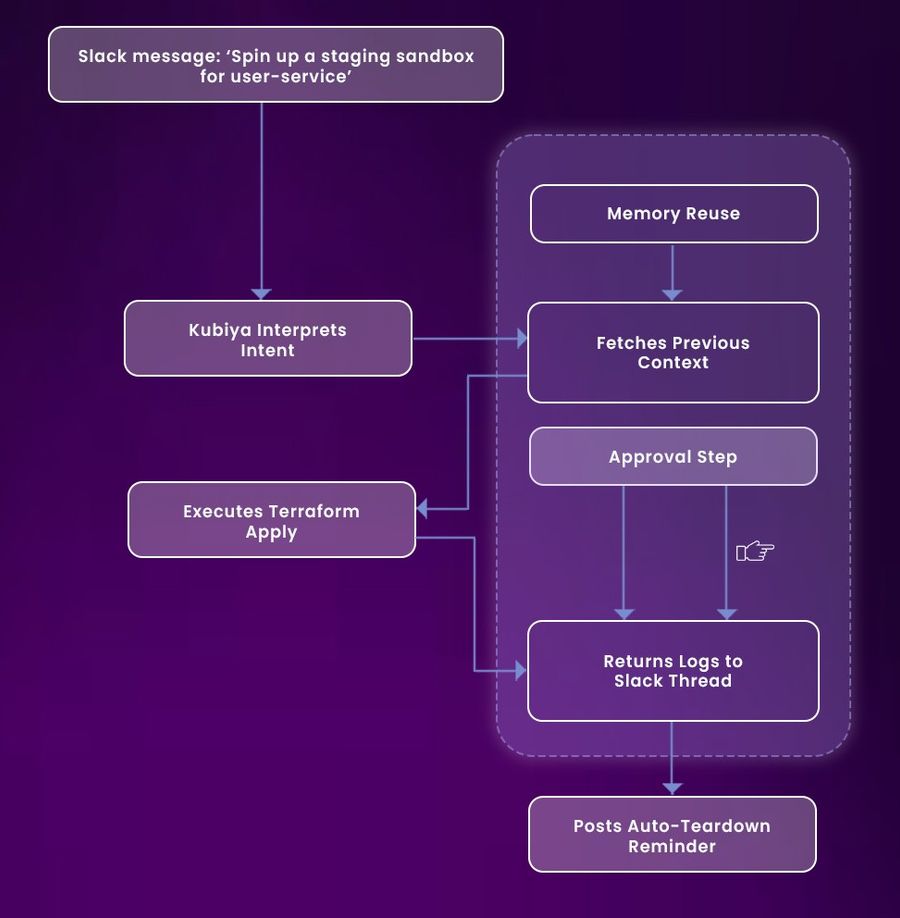

Kubiya shifts AI Ops from dashboards and runbooks into Slack itself. Designed for secure, context-aware DevOps automation, it allows engineers to interact with infrastructure using natural language. Kubiya goes beyond chatbots, it retains scoped memory across users, projects, and workspaces, allowing workflows to evolve over time and reuse past context intelligently.

Key Features

- Conversational interface for provisioning, metrics querying, deployment, and approvals

- Scoped memory for users, channels, and projects, so prompts like “create a sandbox like last week” just work

- Secure, role-aware execution with built-in RBAC and audit trails

- Integrates with Terraform, GitHub Actions, Jenkins, AWS, Datadog, and more

Best For

DevOps and platform teams who want secure, Slack-native automation for complex infra tasks, with human-in-the-loop controls and memory-driven workflows.

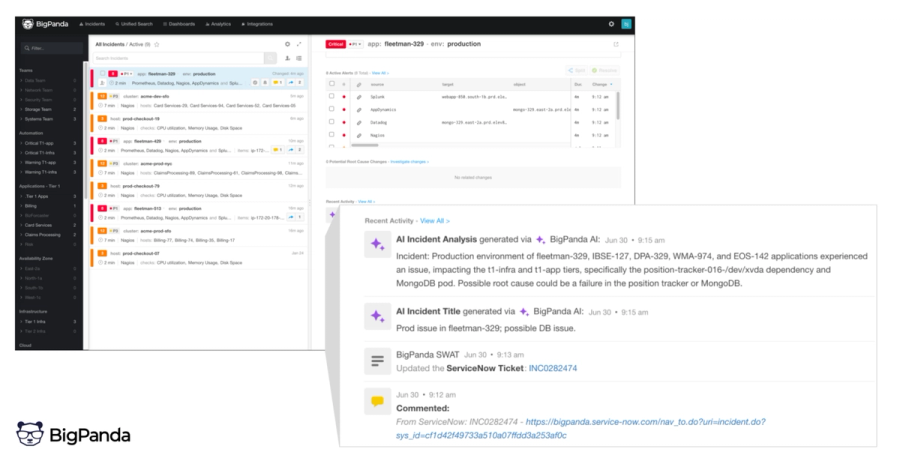

2. BigPanda – Event Correlation and Incident Intelligence

Overview

BigPanda tackles alert fatigue by turning high-volume, noisy telemetry into actionable incidents. It applies topology awareness and machine learning to correlate related alerts, creating unified incident timelines that help responders identify root causes faster.

Key Features

- Real-time correlation across monitoring, logging, and change data

- Infrastructure-aware alert grouping and incident mapping

- Signal-to-noise optimization using historical context and event patterns

- Integrations with Splunk, Datadog, AppDynamics, and more

Best For

Enterprises with distributed systems and fragmented observability stacks looking to reduce MTTR and simplify on-call incident response.

3. PagerDuty AIOps – Noise Suppression and Anomaly Filtering

Overview

PagerDuty AIOps extends traditional incident management by adding machine learning for alert filtering, deduplication, and intelligent triage. It suppresses low-signal noise while ensuring meaningful alerts trigger complete response workflows with SLA enforcement.

Key Features

- Alert deduplication and automatic suppression of known-noise patterns

- Event intelligence based on historical incident trends

- Auto-resolution of low-priority incidents

- Deep integration with PagerDuty’s incident lifecycle tools

Best For

Teams already invested in PagerDuty for on-call management, looking to reduce alert noise while maintaining high-resolution fidelity.

4. CrewAI – Multi-Agent Orchestration Framework

Overview

CrewAI is a self-hosted agent orchestration framework that allows teams to build and run coordinated agent systems across infrastructure tasks. Unlike SaaS-based options, CrewAI gives full control over how agents are composed, how memory is shared, and how workflows are executed.

Key Features

- Modular, multi-agent architecture with collaboration logic

- Code-first setup using Python SDKs

- Support for long-running agents and persistent context

- Extensible with custom LLM integrations or domain-specific agents

Best For

Advanced teams that need custom AI agent logic, prefer open frameworks, and operate in compliance-heavy or air-gapped environments.

5. Harness AI Ops – Deployment Verification and Change Risk Management

Overview

Harness AI Ops embeds machine learning directly into the CI/CD process. It analyzes logs, metrics, and past deployments in real-time to assess whether a rollout is safe to proceed, offering automated rollback or gating when anomalies are detected.

Key Features

- Real-time deploy risk scoring using observability data

- Auto-verification of service health during rollout

- Integration with monitoring tools and Harness pipelines

- Automated rollback or approval workflows on anomaly detection

Best For

DevOps and release engineering teams practicing frequent deployments who need AI-powered safety nets to prevent regressions and maintain availability.

Top AIOps Platforms Comparison

| Tool | Core Focus | Interface Type | Context Memory | Hosting Model | Best Fit For |

|---|---|---|---|---|---|

| Kubiya | Conversational DevOps automation | Slack (ChatOps) | Scoped | SaaS | DevOps teams automating infra through chat |

| BigPanda | Incident correlation & alert intelligence | Web dashboard | ❌ | SaaS | Enterprises needing cross-tool alert correlation |

| PagerDuty AIOps | Alert noise suppression & triage | Web + API integrations | ❌ | SaaS | On-call teams using PagerDuty for response |

| CrewAI | Multi-agent orchestration framework | Code-first (Python SDK) | Agent-level | Self-hosted | Engineering teams building custom AI pipelines |

| Harness AI Ops | CI/CD deploy verification & risk analysis | Web dashboard | ❌ | SaaS | DevOps teams needing safe continuous delivery |

Kubiya vs Other AI Ops Tools: Where It Excels

Kubiya isn’t trying to compete with alerting systems or observability dashboards. Instead, it focuses on what most AI Ops tools ignore, how DevOps teams interact with their systems daily. Whether it’s creating a sandbox, querying logs, or triggering a rollback, Kubiya turns these actions into natural language interactions with RBAC, memory, and audit trails.

Unlike CrewAI, which requires extensive self-hosting, Kubiya offers a managed SaaS interface that’s fast to deploy and easy to adopt across teams. Compared to Harness, which excels in CI/CD verification, Kubiya plays well across broader workflows, Terraform, K8s, GitHub, Datadog, and more.

Its conversational UX, memory scoping, and multi-tool integrations make it less of a replacement and more of an AI layer over your existing DevOps stack.

Real-World Use Cases and DevOps Workflows With Kubiya

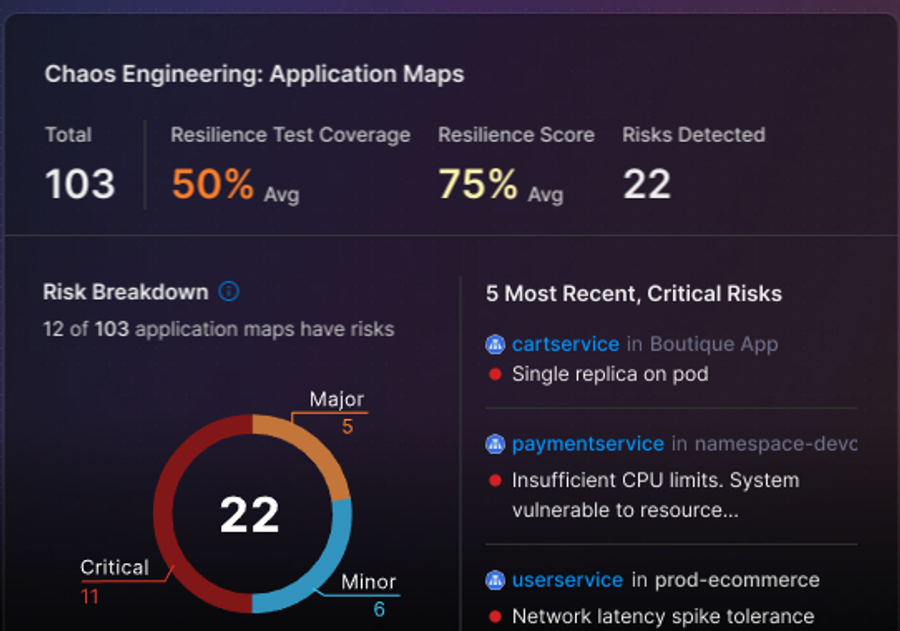

One of the most powerful demonstrations of AI Ops in practice is how Kubiya Composer automates critical vulnerability remediation, bridging security and DevOps without the friction of traditional ticket-based workflows.

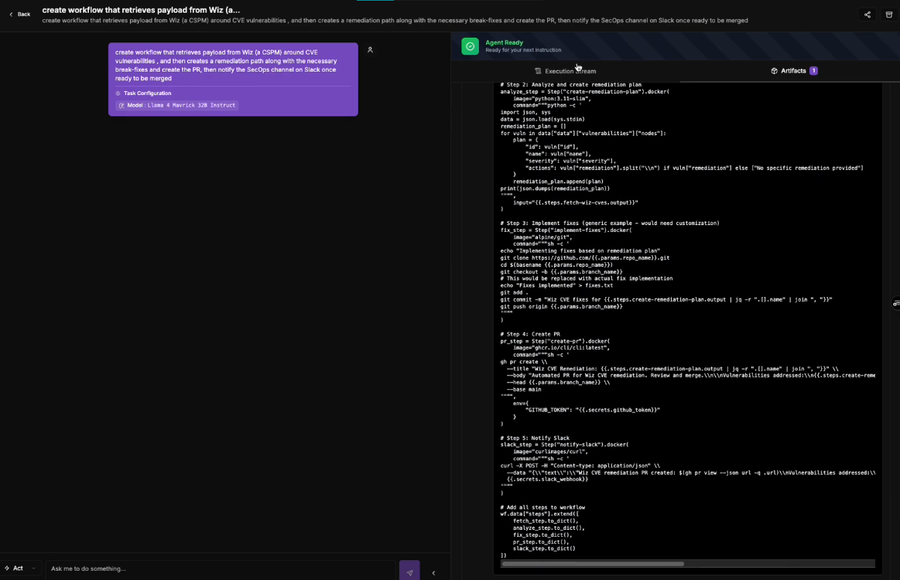

Let’s consider a real-world challenge: CVEs detected by a security scanner like Wiz are often pushed into Jira, where they can linger for days or weeks. Meanwhile, your production systems remain exposed. Manually triaging these issues across multiple tools, Wiz, Jira, GitHub, and Kubernetes, is slow, error-prone, and depends on too many human handoffs. This is where Kubiya Composer completely reshapes the workflow.

In the demo scenario, Wiz detects a critical CVE, say, an exposed Log4j vulnerability in a container image. As soon as it's identified, Kubiya ingests the alert, maps it to its contextual graph, and determines the impacted systems based on real runtime topology. Then, instead of waiting for a security engineer to manually triage the finding, Kubiya opens a secure Slack thread, notifying the relevant team with a proposed remediation plan.

From Slack, a developer or DevSecOps engineer can interact directly with Kubiya. They can approve or adjust the remediation steps, such as updating the affected image version or patching the container base image. Kubiya then orchestrates the remediation through GitHub (opening a PR), runs CI pipelines to verify the fix, applies Kubernetes rollout logic, and finally resolves the original Jira ticket, all while logging every step in an auditable thread.

What's most compelling here is how Kubiya maintains SOC2-grade audit trails and operates under zero-trust execution principles. No action is performed blindly, each step is tied to policy and can be gated by approval when needed. And because it’s memory-scoped, Kubiya knows which versions have previously caused regression, or which environments require additional review.

In short, what used to take days, detecting a CVE, writing a Jira ticket, tracking down the owner, building a patch, and pushing a fix, is now compressed into a few minutes of safe, AI-led orchestration. This isn’t just “chat with DevOps.” It’s real remediation, end-to-end, triggered by real vulnerabilities, with all the compliance and traceability controls built in.

This is the kind of workflow shift that defines modern AI Ops, not theoretical bots, but production-grade AI agents that plug into existing tools and accelerate secure resolution without sacrificing control.

Setting Up Kubiya in Your Stack

Unlike traditional automation tools that require scripting or GUI-based workflows, Kubiya is designed to plug directly into where your DevOps conversations already happen, Slack. It acts as a contextual assistant that not only understands infrastructure but remembers how you work.

1. Install Kubiya in Slack

The first step is connecting Kubiya to your Slack workspace. This creates a secure, scoped environment where users can interact with Kubiya using natural language. Once installed, Kubiya appears as a bot but behaves more like a DevOps teammate, responding to prompts, retrieving metrics, and executing real actions.

To install:

- Visit the Kubiya dashboard and initiate the Slack app integration

- Assign appropriate scopes and permissions (admin-level access required for workflow execution)

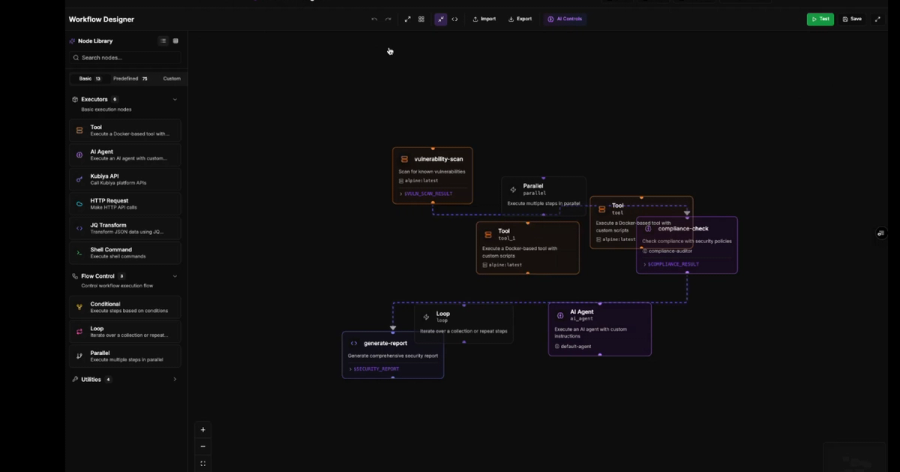

2. Configure Your Action Runners

Kubiya relies on Action Runners, predefined or custom YAML-based workflows that describe what Kubiya can do. These can wrap around:

Terraform modules (e.g., provision an S3 bucket), GitHub workflows (e.g., trigger CI jobs), Bash or Python scripts (e.g., restart a service), Kubernetes manifests (e.g., scale a pod)

You define these workflows with input parameters, RBAC requirements, and descriptions. Once defined, they can be invoked conversationally like:

“Provision a dev sandbox with 2 replicas and latest app image”

3. Enable Scoped Memory

Kubiya supports persistent memory across:

Users (remembers your default AWS region), Channels (remembers team-specific project context), Projects/workspaces (remembers recent actions, module usage, variables)

This allows for prompts like:

“Re-run the deployment from last Thursday” or “Create a sandbox just like the one we spun up for marketing”

You don’t need to pass config files or flags, it retrieves and reuses known context.

4. Set Up Secure RBAC and Approvals

Kubiya supports role-based access control, ensuring that destructive actions like delete production pod require either Inline approval from a designated approver or Preconfigured policy via GitHub or Slack groups

It logs every action, tracks approvals, and embeds status updates directly into the conversation for full traceability.

5. Connect to External Tools

You can link Kubiya with Terraform Cloud/CLI, GitHub (repos, actions, PR triggers), Jenkins or CircleCI Datadog, Prometheus for observability queries and AWS, GCP, Azure credentials scoped via Vault or cloud IAM roles.

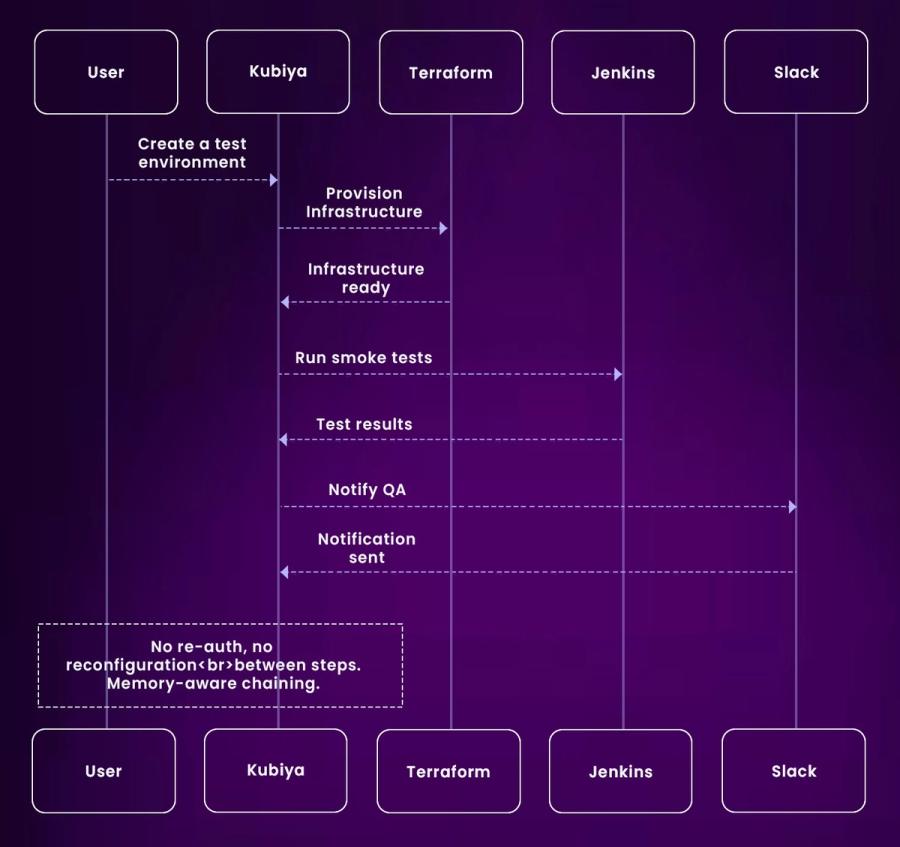

Once linked, Kubiya can execute compound workflows, like provisioning infrastructure, deploying a service, verifying logs, and notifying stakeholders, all from a single prompt.

Chaining commands is where Kubiya truly excels. A user might say, “create a test environment, run smoke tests, notify QA.” This three-step process gets mapped to three backend actions, each contextualized, approved (if needed), and reported back, all within Slack, without touching any CLI or portal.

Conclusion

AI Ops isn’t about eliminating DevOps engineers. It’s about offloading their cognitive load, reducing repetitive toil, and making infrastructure interaction more fluid and intelligent. Kubiya does this not with a flashy dashboard or predictive graphs, but by turning your chat platform into a command center, context-aware, memory-retentive, and role-secure.

By narrowing its focus to the everyday workflows DevOps teams actually use, and wrapping them in conversational interfaces, Kubiya makes AI Ops usable. It’s not a monolith you must learn. It’s a teammate you already know how to talk to.

Start small. Automate a single sandbox request. Add approval gates. Track who does what. Watch how much faster your team moves when the tools remember you.

FAQs

1. Is Kubiya a replacement for CI/CD tools like GitHub Actions or ArgoCD?

Not at all. Kubiya integrates with them and allows developers to trigger or observe pipelines contextually without needing access to the CI system.

2. How does Kubiya handle cloud credentials?

Kubiya integrates with secret managers and uses permission-scoped execution policies, ensuring that sensitive actions are bound to user roles and logged appropriately.

3. Is Kubiya open source or SaaS?

Kubiya is currently SaaS-first, but hybrid and enterprise deployment options are on the roadmap, especially for industries with regulatory concerns.

4. Can it be used outside Slack?

Slack is its most mature interface, but support for Microsoft Teams and Discord is actively being developed.

About the author

Amit Eyal Govrin

Amit oversaw strategic DevOps partnerships at AWS as he repeatedly encountered industry leading DevOps companies struggling with similar pain-points: the Self-Service developer platforms they have created are only as effective as their end user experience. In other words, self-service is not a given.